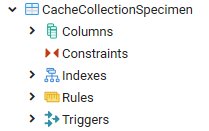

Cache Database

Transfer Via BCP Export

Export using the transfer via bcp

Overview

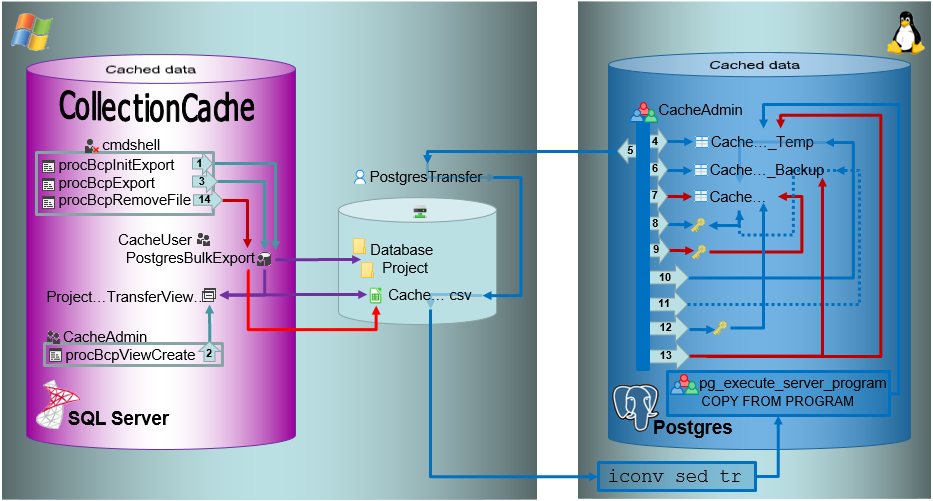

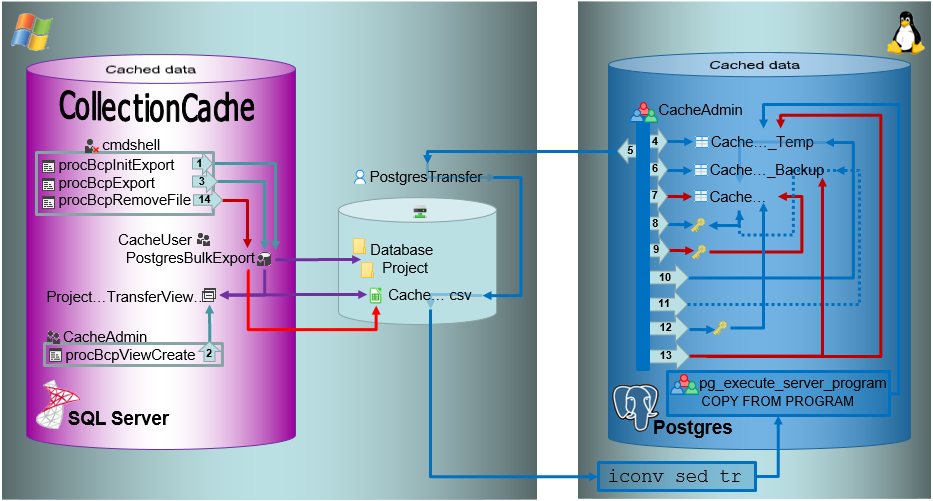

The image shows an overview of the steps described below. The numbers in

the arrows refer to the described steps.

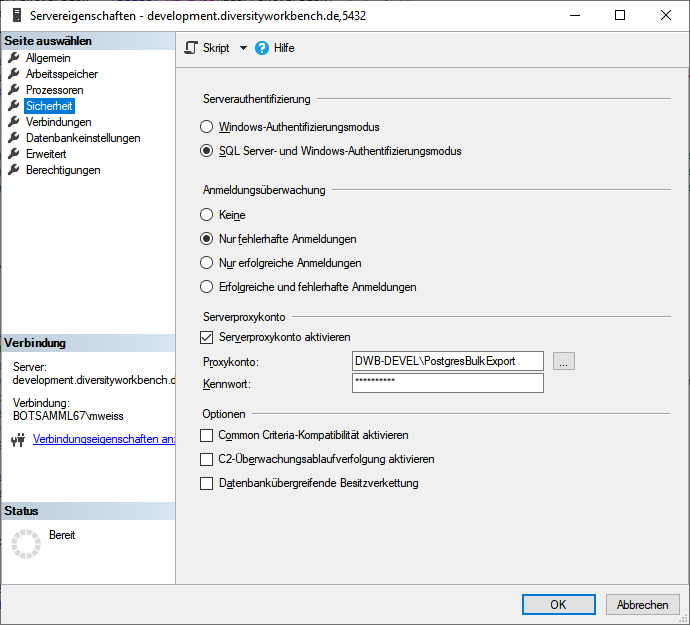

Export

Initialization of the batch export

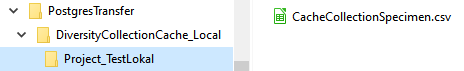

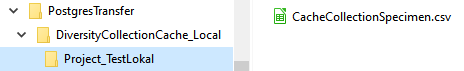

In the shared directory folders will be created according to

.../<Database>/<Schema>. The data will be exported into a csv file

in the created schema (resp. project) folder in the shared directory.

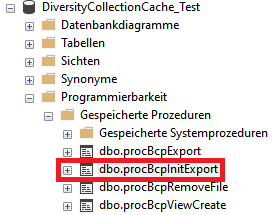

The initialization of the export is performed by the procedure

procBpcInitExport (see below and

[ 1 ]

in the overview image above).

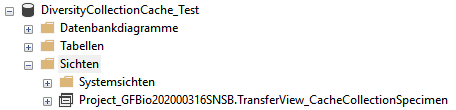

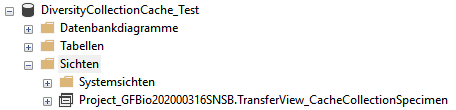

Creation of view as source for the batch export

To export the data into the file a view (see below) will be created

transforming the data in the table of the SQL-Server database according

to the requirements of the export (quoting and escaping of existing

quotes). The creation of the view is performed by the procedure

procBcpViewCreate (see above and

[ 2 ]

in the overview image above).

The views provide the data from the SQL-Server tables in the sequence as

defined in the Postgres tables and perform a reformatting of the string

values (see example below).

CASE WHEN \[AccessionNumber\] IS NULL THEN NULL ELSE \'\"\' + REPLACE(\[AccessionNumber\], \'\"\', \'\"\"\') + \'\"\' END AS \[AccessionNumber\]

Export of the data in to a csv file in the transfer directory

To data will be exported using the procedure procBcpExort (see above and

[ 3 ]

in the overview image above) into a csv file in the directory created in

the shared folder (see below).

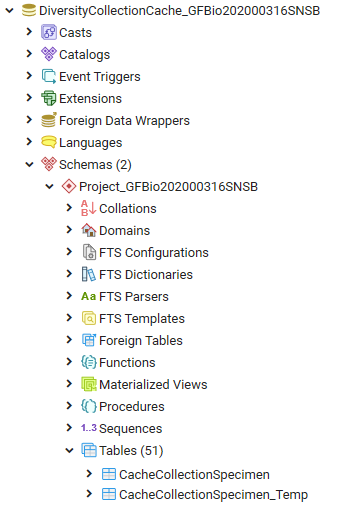

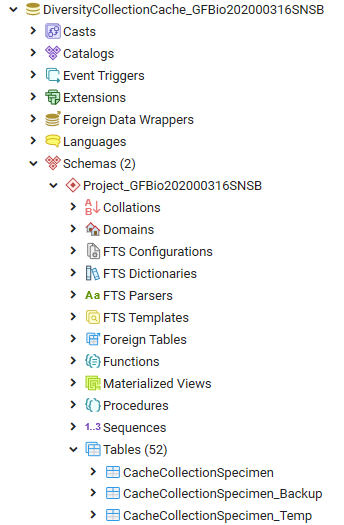

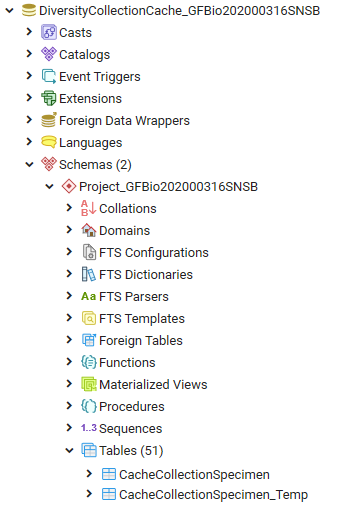

For the intermediate storage of the data, a temporary table (..._Temp)

is created (see below and

[ 4 ]

in the overview image above). This table is the target of the bash

import described below.

As Postgres accepts only UTF-8 without the Byte OrderMark (BOM) the exported

csv file must be converted into UTF-8 without BOM. For this purpose

there are scripts provided for every Windows-SQL Server instance

(/database/exchange/bcpconv_INSTANCE). These scripts accept the UTF-16LE

file that should be converted as an argument in dependence of the name

of the instance, e.g. for INSTANCE 'devel':

/database/exchange/bcpconv_devel

DiversityCollectionCache_Test/Project_GFBio202000316SNSB/CacheCollectionSpecimen.csv

The scripts are designed as shown below (for INSTANCE 'devel'):

#!/bin/bash iconv -f UTF16LE -t UTF-8 /database/exchange/devel/\$1 \|sed \'1s/\^\\xEF\\xBB\\xBF//\' \| tr -d \'\\r\\000\'

AS a first step

iconv converts

the file from UTF-16LE to UTF-8.

AS next step

sed removes

the Byte Order Mark (BOM).

AS final step

tr removes

NULL characters.

Import with COPY

The script above is used as source for the import in Postgres using the

psql COPY command

as shown in the example below (for INSTANCE 'devel').

COPY \"Project_GFBio202000316SNSB\".\"CacheCollectionSpecimen_Temp\" FROM PROGRAM \'bash /database/exchange/bcpconv_devel DiversityCollectionCache_Test/Project_GFBio202000316SNSB/CacheCollectionSpecimen.csv\' with delimiter E\'\\t\' csv;

The options set the tab sign as delimiter: with delimiter E\'\\t\' and csv as format of the file: csv .

Within the csv file empty fields are taken as NULL-values and quotes

empty strings "" are taken as empty string. All strings must be

included in quotation marks ("...") and quotation marks (") within

the strings must be replaced by 2 quotation marks ("") - see example

below. This conversion is performed in the view described above.

any\"string → \"any\"\"string\"

Bash conversion and Import with COPY relate to

[ 5 ]

in the overview image above. The COPY command is followed by a test for

the existance of the created file.

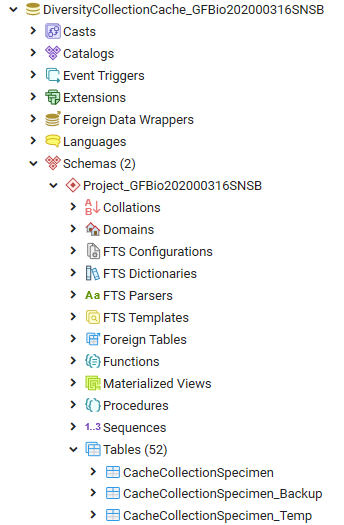

Backup of the data from the main table

Before removing the old data from the main table, these data are stored

in a backup table (see below and

[ 6 ]

in the overview image above).

Removing data from the main table

After a backup was created and the new data are ready for import, the

main table is prepared and as first step the old data are removed (see

[ 7 ]

in the overview image above).

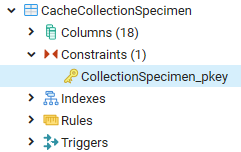

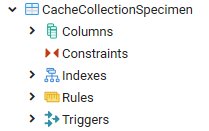

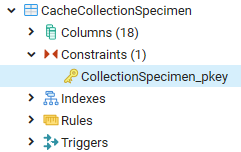

Getting the primary key of the main table

To enable a fast entry of the data, the primary key must be removed.

There the definition of this key must be stored for recreation after the

import (see below and

[ 8 ]

in the overview image above).

Removing the primary key from the main table

After the definition of the primary key has been extracted from the

table, the primary key is removed (see below and

[ 9 ]

in the overview image above).

Inserting data from the intermediate storage into the main table and clean up

After the data and the primary key has been removed, the new data are

transferred from the temporary table into the main table (see

[ 10 ]

in the overview image above) and the number is compared to the number in

the temporary table to ensure the correct transfer.

In case of a failure the old data will be restored from the backup table

(see

[ 11 ]

in the overview image above).

After the data have been imported the primary key is restored (see

[ 12 ]

in the overview image above). Finally the intermediate table and the

backup table (see

[ 13 ]

in the overview image above) and the csv file (see

[ 14 ]

in the overview image above) are removed.

Cache Database

Transfer Via BCP Setup

Setup for the transfer via bcp

There are several preconditions to the export via bcp.

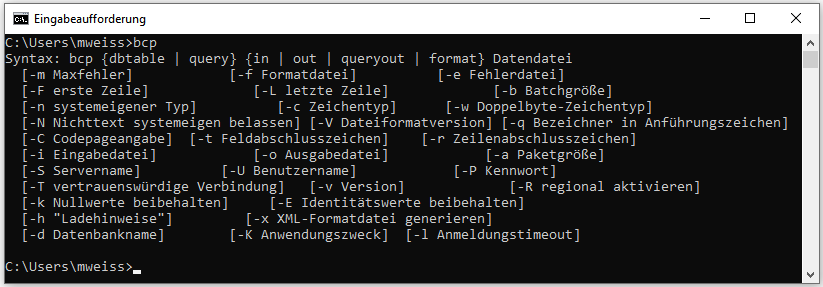

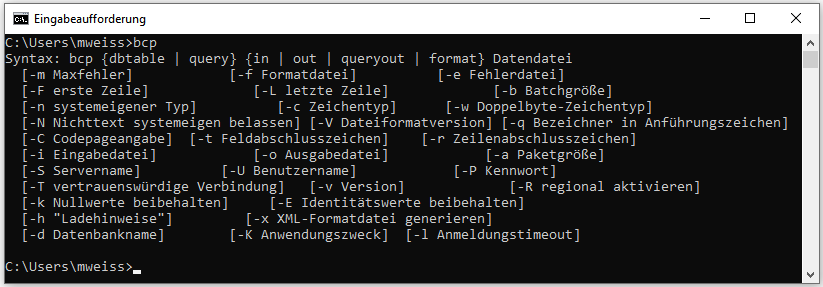

bcp

The export relies on the external

bcp

software that must have been installed on the database server.To test if

you have bcp installed, start the command lind and type bcp. If bcp is

installed you get a result like shown below.

SQL-Server

The transfer via bcp is not possible with SQL-Server Express editions.

To use this option you need at least SQL-Server Standard edition.

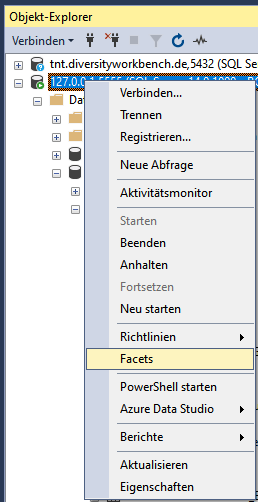

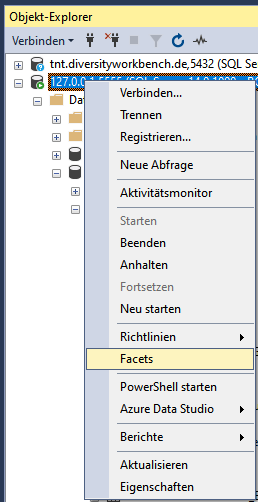

To enable the transfer the XP-cmd shell must be enabled on the

SQL-Server. Choose Facets from the context menu as shown below.

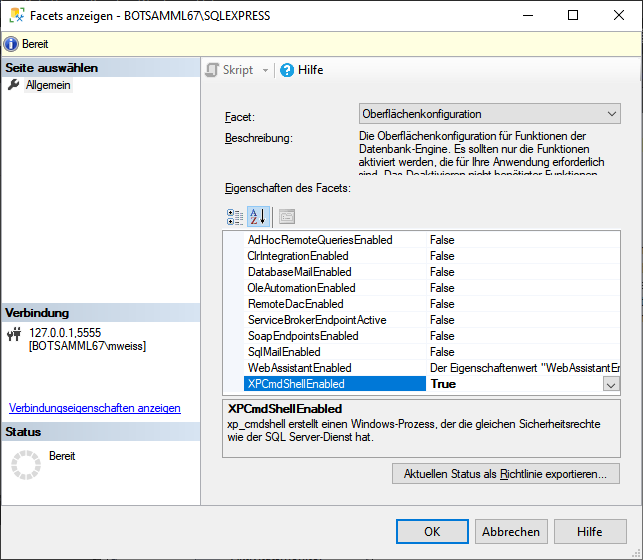

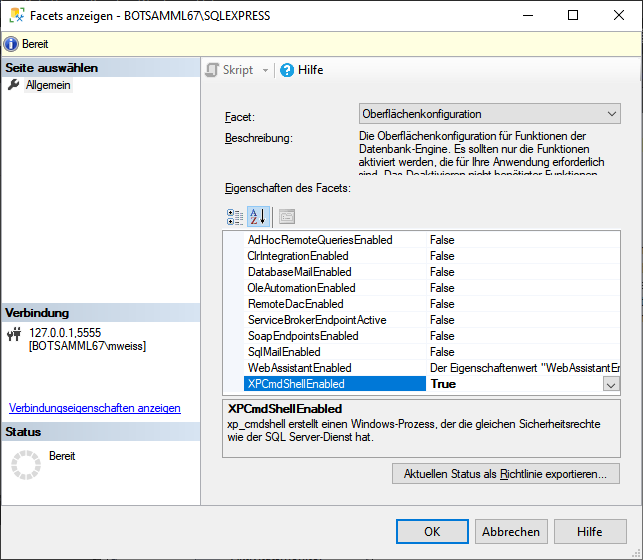

In the window that will open change XPCmdShellEnabled to True as shown

below.

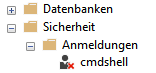

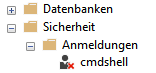

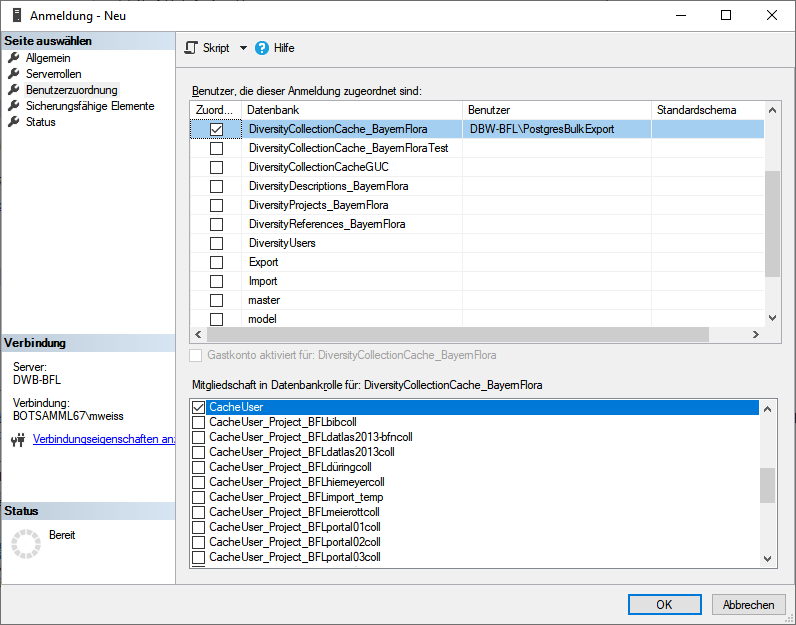

Login cmdshell

The update script for the cache database will create a login cmdshell

used for the transfer of the data (see below). This login will get a

random password and connecting to the server is denied. This login is

needed to execute the transfer procedures (see below) and should not be

changed. The login is set to disabled as shown below.

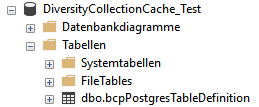

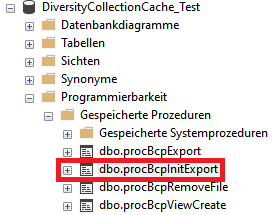

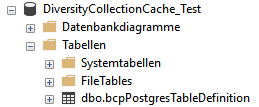

Stored procedures

Furthermore 4 procedures and a table for the export of the data in a

shared directory on the Postgres server are created (see below).

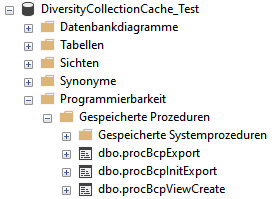

Login PostgresBulkExport

You have to create a Windows login (e.g. PostgresBulkExport) on the

server with full access to the shared directory and set this account as

the server proxy:

Open Windows Administrative Tools -

Computer Management -

Computer Management -

System-Tools -

System-Tools -

Local Users and Groups

Local Users and Groups

left klick on folder Users and select New User...

In the window that will open enter the details for the user and create

the user (e.g. PostgresBulkExport) and click ** OK** to

create the user.

In the File Explorer select the shared folder where the files from the

export will be stored.

From the context menu of the shared folder (e.g.

u:\\postgres) select Properties

In the Tab security click on button Edit…

In the window that will open click on button Add…

Type or select the User (e.g. PostgresBulkExport) and click OK

Select the new user in the list and in Permissons check Full Controll at

Allow

Leave with Button OK

Close Window with Button OK

Go through the corresponding steps for the share (e.g.

u:) to ensure access.

As an alternative you may use SQL to great the Login:

CREATE LOGIN \[DOMAIN\\LOGIN\] FROM WINDOWS;

where DOMAIN is the domain for the windows server and LOGIN is the name

of the windows user.

exec sp_xp_cmdshell_proxy_account \'DOMAIN\\LOGIN\',\'[?password?]\';

where DOMAIN is the domain for the windows server, LOGIN is the name of

the windows user and PASSWORD is the password for the

login.

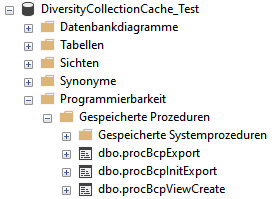

After the user is created and the permissions are set, set this user as

cmdshell proxy (see below)

creat a new login:

- Open Sql Server Managment Studio

\

\

- select Security

\

\

- select Logins

\

\

- in the context menu select New Login …

- in the window that will open, click on the Search... button

- Type in object name (e.g.: PostgresBulkExport)\

- click on the Button Check Names (name gets expanded with domain name)\

- click button OK twice to leave the window and create the new login

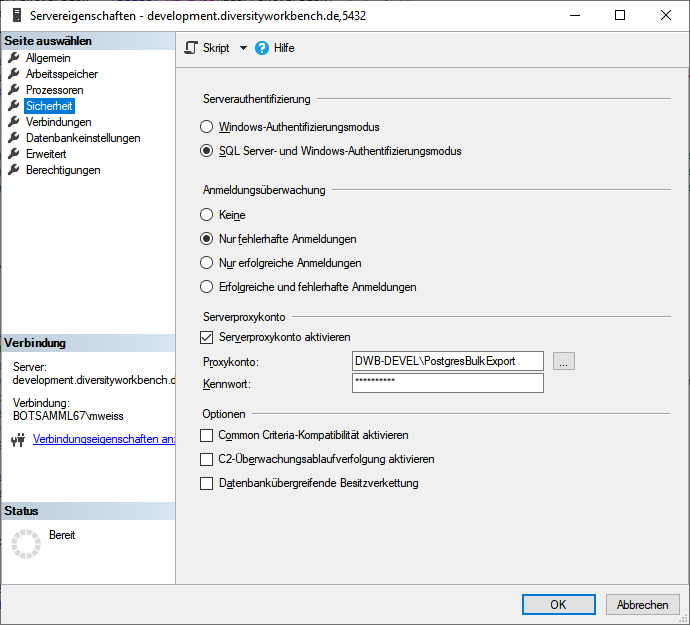

in the context menu select Properties and include the login in the

users of the cache database (see below)

On the database server add this user as new login and add it to the

cache database. This login must be in the database role CacheUser to be

able to read the data that should be exported (see below).

Login PostgresTransfer

Another login (e.g. PostgresTransfer) on the Windows server is needed

with read only access to the shared directory to read the exported data

and import them into the Postgres database. This login is used by the

Postgres server to access the shared directory. It does not need to have

interactive login permissions.

Shared directory on Windows-server

On the Windows side the program needs an accessible shared directory in

which sub-directories will be created and into which the files will be

exported. This directory is made accessible (shared folder) to

CIFS/SMB with read

only access for the login used for the data transfer (e.g.

PostgresTransfer). This shared directory is mounted on the Postgres

server using

CIFS

(/database/exchange/INSTANCE). The INSTANCE is an identifier/server-name

for the windows server. If more windows server deliver data to one

postgres server these INSTANCE acronyms need to be unique for each

windows server.

On the Windows-server this directory is as available as "postgres".

The directory will be mounted depending on the INSTANCE under the path

/database/exchange/INSTANCE. The corresponding

fstab entries for the INSTANCEs

'bfl and 'devel' are:

/etc/fstab:

//bfl/postgres /database/exchange/bfl cifsro,username=PostgresTransfer,password=[[?password?]]

//DeveloperDB/postgres /database/exchange/devel cifsro,username=PostgresTransfer,password=[[?password?]]

| command |

meaning |

| [?Instance?]/postgres |

is the UNC path to the shared folder |

| /database/exchange/[?Instance?] |

is local path on the postgres server where to mount the shared folder |

| PostgresTransfer |

is the windows user name to access the shared folder |

| ?password? |

is the password for the windows user to access the shared folder |

These mounts are needed for every Windows-server resp. SQL-Server

instance. Instead of fstab you

may use systemd for mounting.

Bugfixing and errorhandling

If you get the following error:

An error occurred during the execution of xp_cmdshell. A call to \'LogonUserW\' failed with error code: \'1326\'.

This means normally, that the passwort is wrong, but could also be a

policy problem.

First recrate the proxy user with the correct password:

use \[Master\];

-- Erase Proxy user:

exec sp_xp_cmdshell_proxy_account Null;

go

-- create proxy user:

exec sp_xp_cmdshell_proxy_account \'[?domain?]\\PostgresBulkExport\',\'[?your password for PostgresBulkExport?]\';\

go

And if this does not work, change the policy:

Add proxy user in the group to run as batch job:

Open the Local Security Policy

Log on as a batch job

Add user or group ...

Type user in object name field (e.g. PostgresBulkExport)

Leave Local Security Policy editor by clicking Button OK twice

For further information see

https://www.databasejournal.com/features/mssql/xpcmdshell-for-non-system-admin-individuals.html

NOT FOR PRODUCTION!

Setup of a small test routine.

Remove cmdshell account after your tests and recreate it with the

CacheDB-update-scripts from DiversityCollection (Replace [?marked

values?] with the values of your environment):

USE \[master\];

CREATE LOGIN \[cmdshell\] WITH PASSWORD = \'[?your password for cmdshell?]\',

CHECK_POLICY=OFF;

CREATE USER \[cmdshell\] FOR LOGIN \[cmdshell\];

GRANT EXEC ON xp_cmdshell TO \[cmdshell\];\

-- setup proxy

CREATE LOGIN \[[?domain?]\\PostgresBulkExport\] FROM WINDOWS;

exec sp_xp_cmdshell_proxy_account\

\'[?domain?]\\PostgresBulkExport\',\'[?your password for PostgresBulkExport?]\';\

USE \[[?DiversityCollection cache database?]\];

CREATE USER \[cmdshell\] FOR LOGIN \[cmdshell\];

-- allow execution to non privileged users/roles

grant execute on \[dbo\].\[procCmdShelltest\] to \[CacheUser\];

grant execute on \[dbo\].\[procCmdShelltest\] to \[[?domain?]\\AutoCacheTransfer\];

-- recreate Proxy User:

exec sp_xp_cmdshell_proxy_account Null;

go

-- create proxy user:

exec sp_xp_cmdshell_proxy_account '[?domain?]\\PostgresBulkExport\',\'[?your password for PostgresBulkExport?]\';

go

-- Make a test:

create PROCEDURE \[dbo\].\[procCmdShelltest\]

with execute as \'cmdshell\'

AS

SELECT user_name(); \-- should always return \'cmdshell\'

exec xp_cmdshell \'dir\';

go

execute \[dbo\].\[procCmdShelltest\];

go

-- Should return two result sets

-- \'cmdshell\'

-- and the filelisting of the current folder

-- Check Proxy user:

-- The proxy credential will be called ##xp_cmdshell_proxy_account##.

select credential_identity from sys.credentials where name = '##xp_cmdshell_proxy_account##\';

-- should be the assigned windows user, e.g. [?domain?]\\PostgresBulkExport

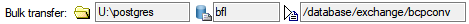

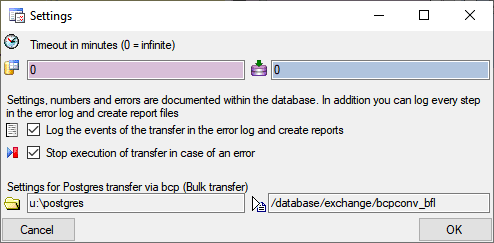

Settings for the bulk transfer

To enable the bulk transfer via bcp enter the path of the directory

on the Postgres server, the mount point

on the Postgres server, the mount point

of the postgres server and the

bashfile

of the postgres server and the

bashfile  as shown below.

as shown below.

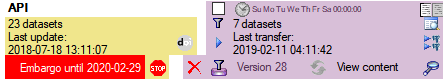

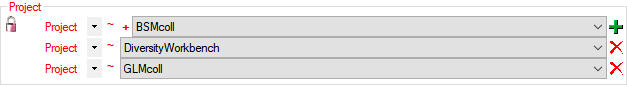

To use the batch transfer for a project, click in the checkbox as shown

below.

The image for the transfer will change from  to

to

. Now the data of every table within the

project will be transferred via bcp. To return to the standard transfer,

just deselect the checkbox.

. Now the data of every table within the

project will be transferred via bcp. To return to the standard transfer,

just deselect the checkbox.

Role pg_execute_server_program

The user  executing the transfer must be

in the role

executing the transfer must be

in the role  pg_execute_server_program.

pg_execute_server_program.

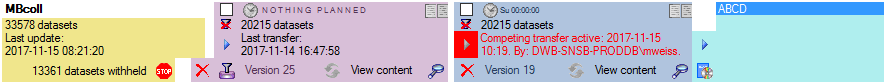

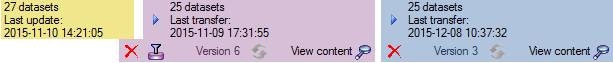

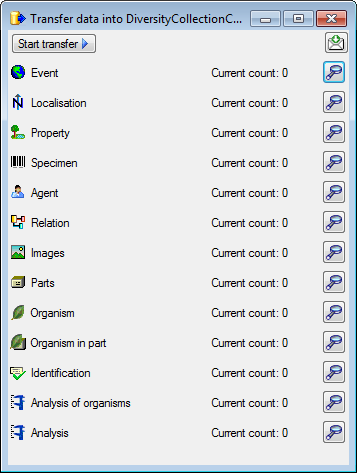

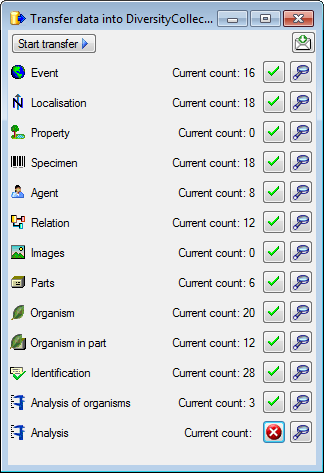

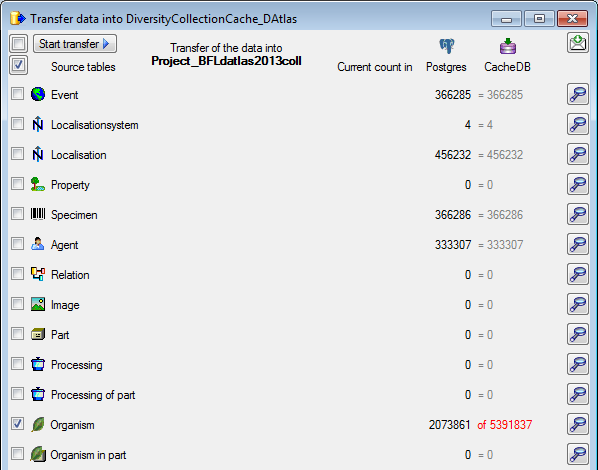

: Transfer data of a single project

: Transfer data of the data via bcp

: Transfer data of all projects and sources selected for the schedule based transfer

. If you transferred only a part

of the data this will be indicated by a thin red border for the current

session

. If you transferred only a part

of the data this will be indicated by a thin red border for the current

session  . The context menu of

the

. The context menu of

the

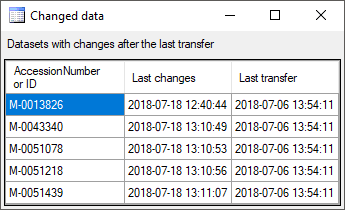

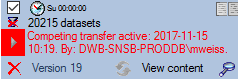

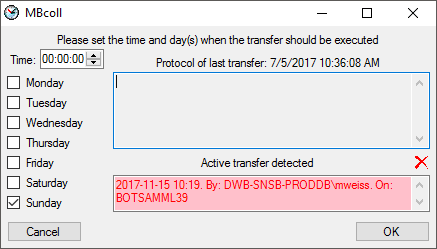

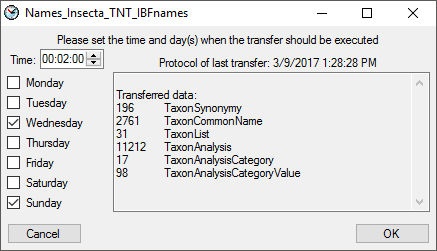

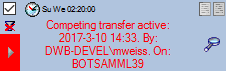

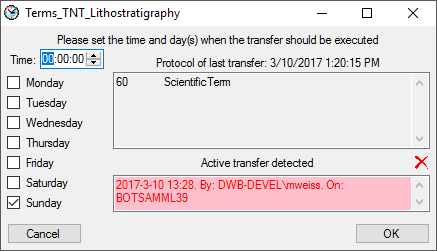

![]() competing transfer is active for the same step, this will be

indicated as shown below. While this transfer is active, any further

transfer for this step will be blocked.

competing transfer is active for the same step, this will be

indicated as shown below. While this transfer is active, any further

transfer for this step will be blocked.

an error by

an error by

button in the Timer area to open this directory. To inspect data in the

default schemas (dbo for SQL-Server

button in the Timer area to open this directory. To inspect data in the

default schemas (dbo for SQL-Server

database to

database to  cache database and all

cache database and all

and the bash file

and the bash file

→

→

button.

button.

option will show the line

numbers of the protocol and the

option will show the line

numbers of the protocol and the  button will show the protocol in

the default editor of your computer. The

button will show the protocol in

the default editor of your computer. The  [Update,

Sources] ) the batch transfer can be

activated. Click e.g. on the

[Update,

Sources] ) the batch transfer can be

activated. Click e.g. on the  button to set the

values. The window that will open offers a list with existing entries

for the respecitive value. Please ask the postgres server administrator

for details.

button to set the

values. The window that will open offers a list with existing entries

for the respecitive value. Please ask the postgres server administrator

for details.

Computer Management -

Computer Management -

System-Tools -

System-Tools -

Local Users and Groups

Local Users and Groups

\

\

of the postgres server and the

bashfile

of the postgres server and the

bashfile  executing the transfer must be

in the role

executing the transfer must be

in the role  pg_execute_server_program.

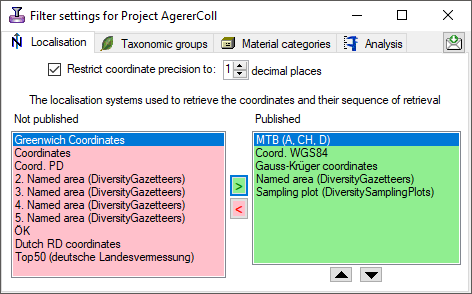

pg_execute_server_program. restrictions of the published data are defined in the

restrictions of the published data are defined in the

,

,  and

and  .

.

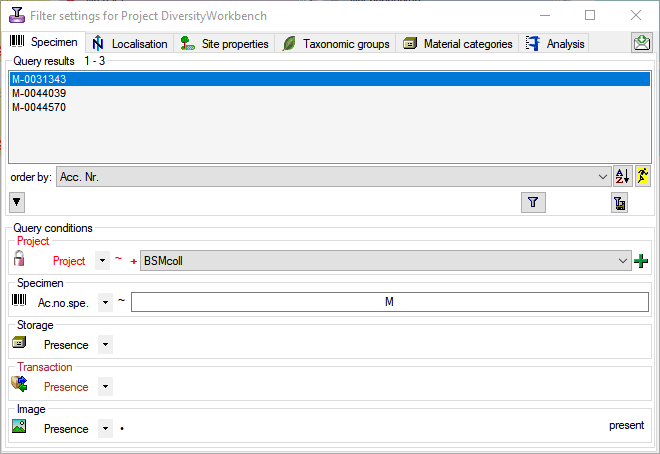

may be

may be

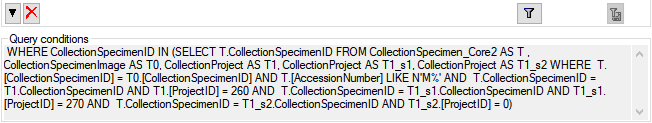

button. The restrictions will be converted into a SQL statement as shown below that will be applied in the filter for the transfer (see below). With the

button. The restrictions will be converted into a SQL statement as shown below that will be applied in the filter for the transfer (see below). With the

: Add projects to the list for the restriction as shown below:

: Add projects to the list for the restriction as shown below:

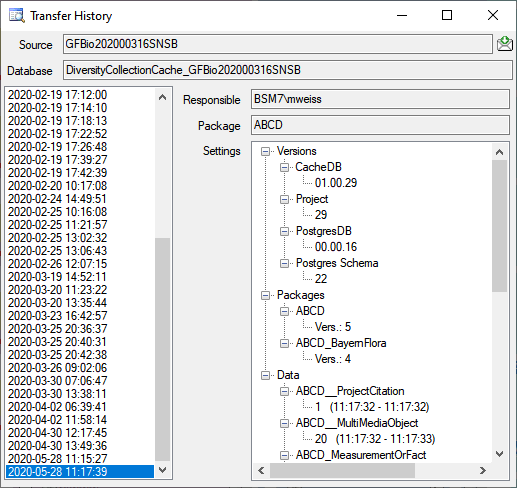

Transaction: If a transaction should be present

Transaction: If a transaction should be present Image: If a specimen image should be present

Image: If a specimen image should be present