Diversity Collection

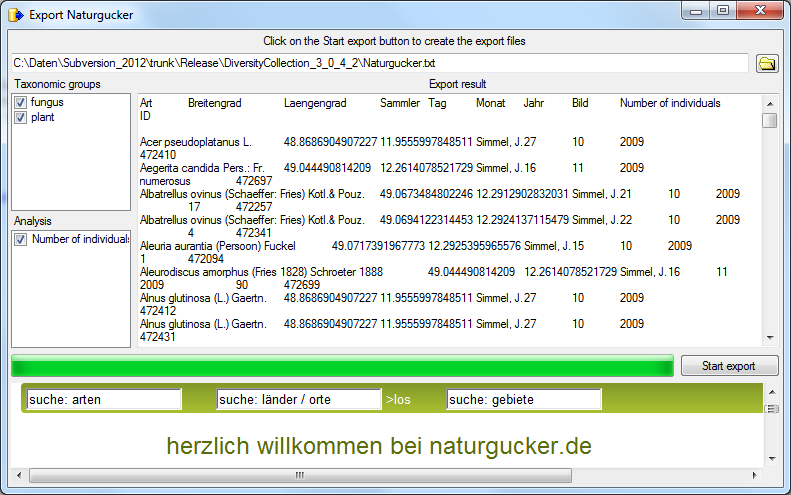

Export Wizard

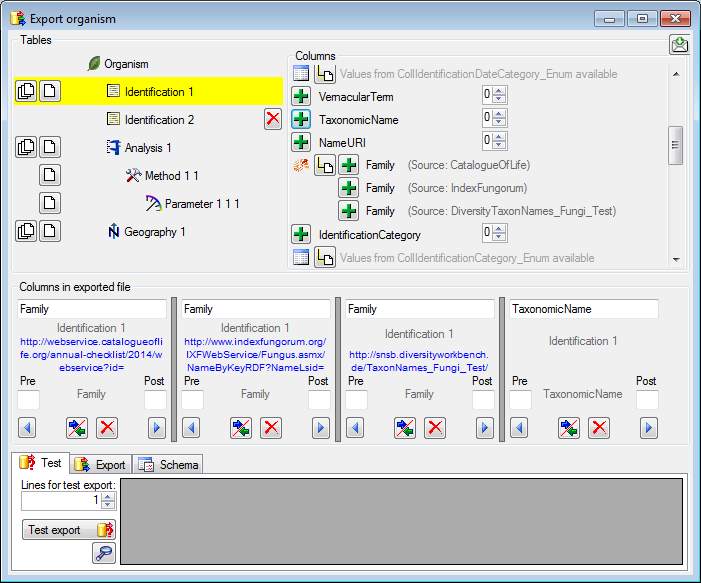

The export wizard provides a possibility to export the data selected in

the main form. The data are exported as tab separated text file. The

export may include transformations of the data as well as information

provided by linked modules and webservices. Choose Data - Export -

Export wizard

The export wizard provides a possibility to export the data selected in

the main form. The data are exported as tab separated text file. The

export may include transformations of the data as well as information

provided by linked modules and webservices. Choose Data - Export -

Export wizard  from the menu and then

select one of the export targets (Event, Specimen, ...). For a short

introduction see the tutorial.

from the menu and then

select one of the export targets (Event, Specimen, ...). For a short

introduction see the tutorial.

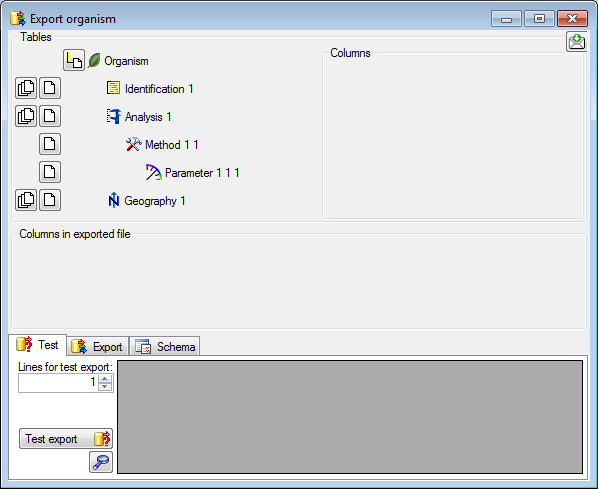

Adding tables

There are the following ways to add tables:

There are the following ways to add tables:

One parallel table

One parallel table Several parallel tables according to

selected data

Several parallel tables according to

selected data Dependent table

Dependent table

All options will include the depending tables as defined for the default

table. The  option for several tables will add

as many tables as there are found in the data.

option for several tables will add

as many tables as there are found in the data.

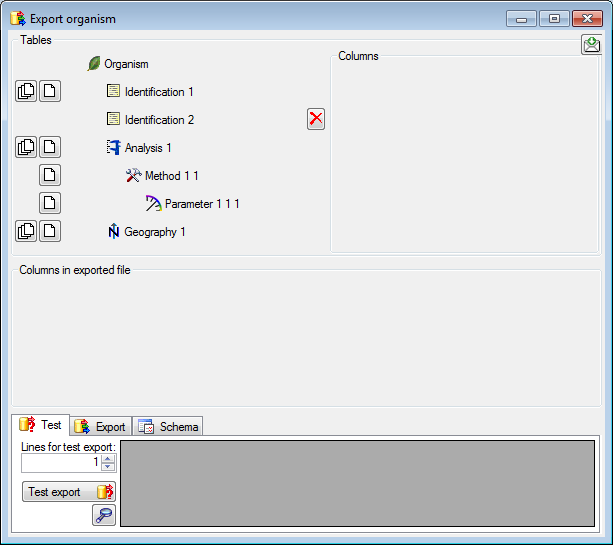

If you added parallel tables, you should set the sequence of the

datasets within these tables: For the columns that should be used for

sorting the data, set the ordering sequence to a value > 0 and choose

if the ordering sequence should be ascending  or

descending

or

descending  .

.

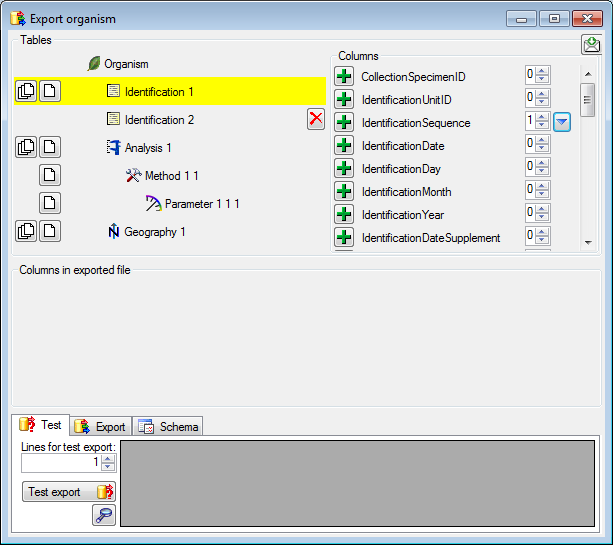

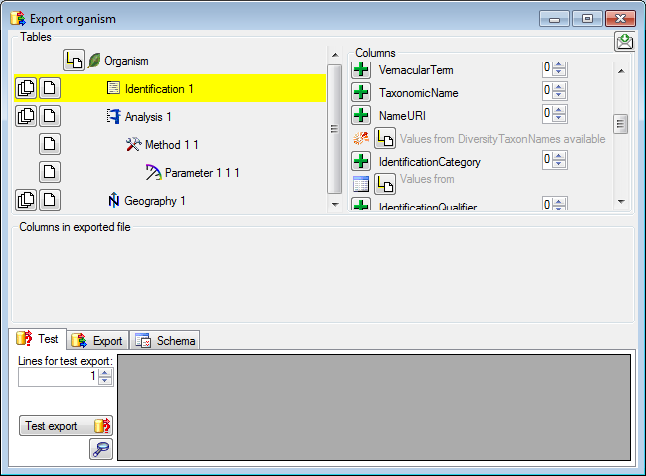

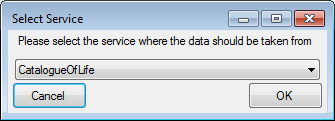

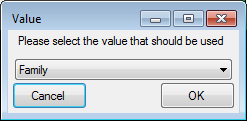

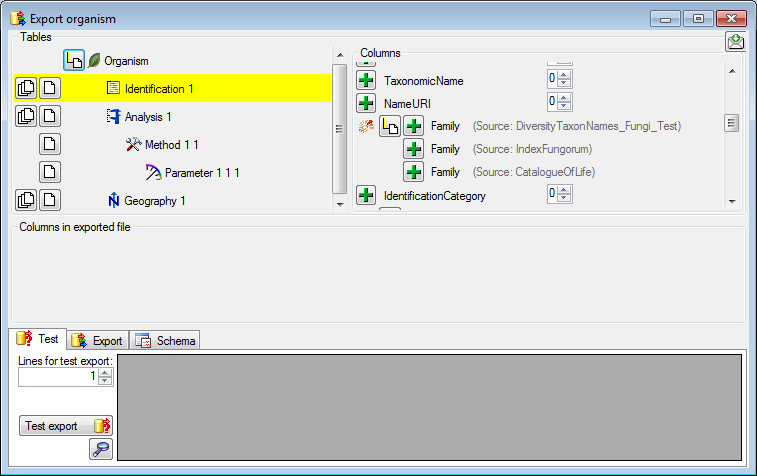

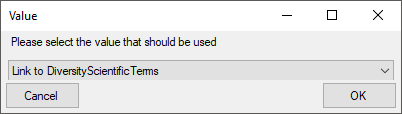

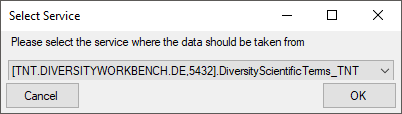

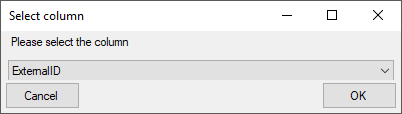

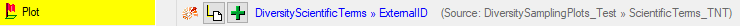

Certain columns in the database may provide information linked to

another table

Certain columns in the database may provide information linked to

another table  or a module resp. webservice

or a module resp. webservice

. Click on the

. Click on the  button

to add a linked value.

button

to add a linked value.

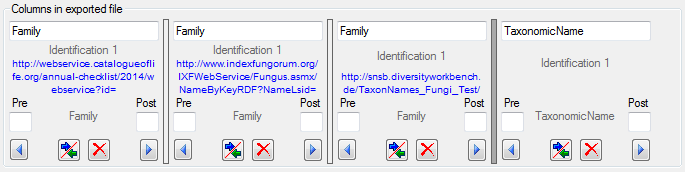

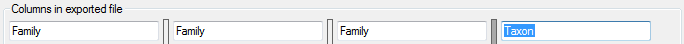

Adding and editing file columns

To add columns to the exported file, use the

To add columns to the exported file, use the  buttons. In the textbox at the top of the file column, you can change

the header for the column. To change the position of a file column use

the

buttons. In the textbox at the top of the file column, you can change

the header for the column. To change the position of a file column use

the  resp.

resp.  button. To fuse

a column with the previous column, click in the gray bar

button. To fuse

a column with the previous column, click in the gray bar

on the left side of the column that will change to

on the left side of the column that will change to  for fused columns. To remove a file column, use the

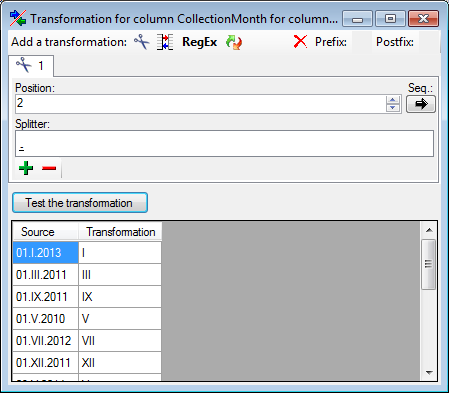

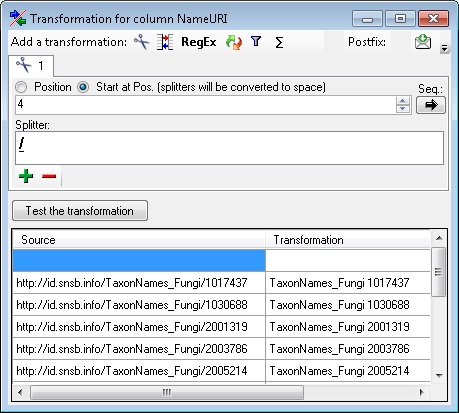

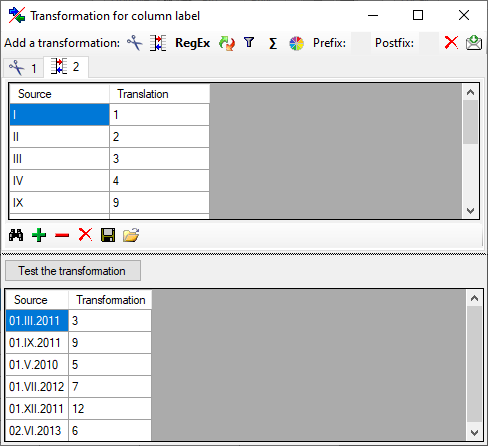

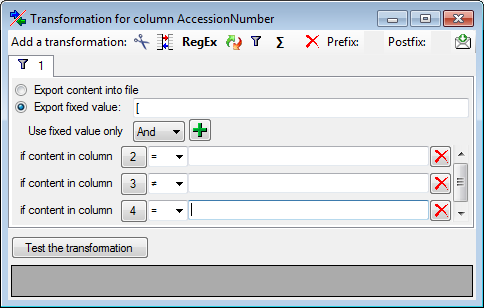

for fused columns. To remove a file column, use the  delete button. Pre- and postfixes for the columns can directly be entered in the corresponding fields. To apply transformations on the data click on the

delete button. Pre- and postfixes for the columns can directly be entered in the corresponding fields. To apply transformations on the data click on the  button.

button.

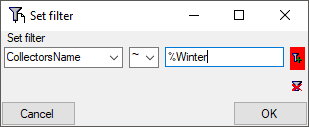

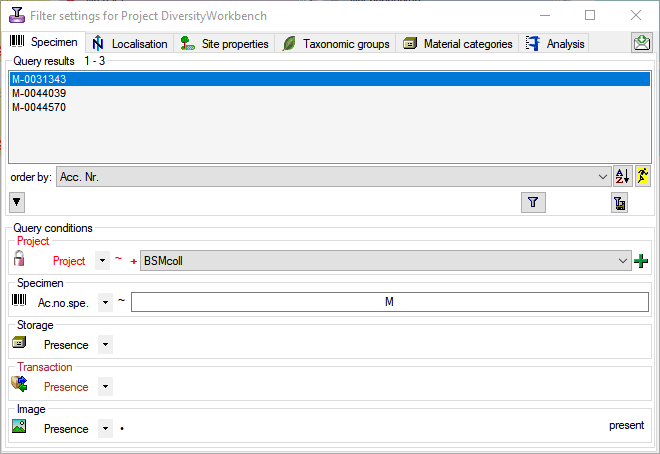

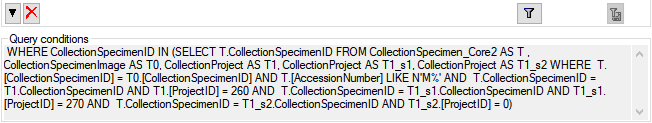

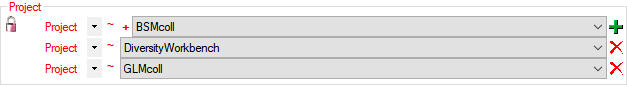

Filter

To filter the exported data, use the filter function. Click on the

To filter the exported data, use the filter function. Click on the

button and

enter the text for the filter. Only data matching the filter string will

be exported. If a filter is set, the

button and

enter the text for the filter. Only data matching the filter string will

be exported. If a filter is set, the

button will have a red background to remind you of the filter. The

filter may be set for any number of columns you need for the restriction

of the exported data.

button will have a red background to remind you of the filter. The

filter may be set for any number of columns you need for the restriction

of the exported data.

Rowfilter

This filter in contrast to the filter above strictly applies to the row

according to the sequence of the data. For an explanation see a short

tutorial

This filter in contrast to the filter above strictly applies to the row

according to the sequence of the data. For an explanation see a short

tutorial

.

.

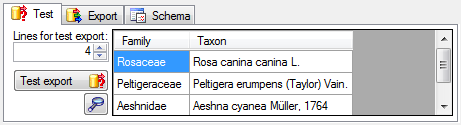

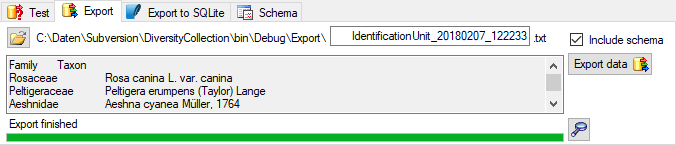

Test

To test the export choose the

To test the export choose the  Test tab,

set the number of lines that should be included in the test and click on

the Test export

Test tab,

set the number of lines that should be included in the test and click on

the Test export  button. To inspect the

result in a separate window, click on the

button. To inspect the

result in a separate window, click on the  button.

button.

SQL

If you want to inspect the SQL commands created during the test check this option. To see the generated SQL click on the SQL button after the Test export. A window containing all commands including their corresponding tables will be shown.

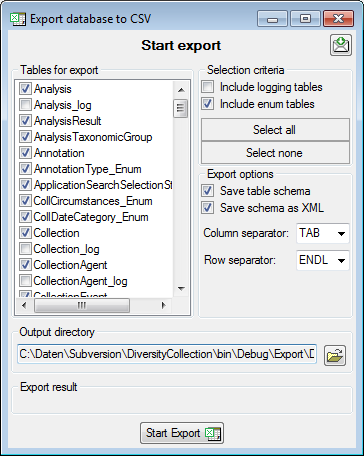

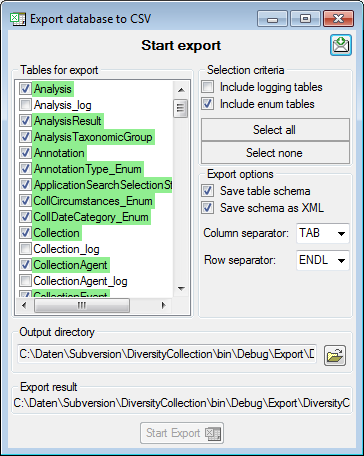

Export

To export your data to a file, choose the

To export your data to a file, choose the  Export tab. If you want to store the file in different place use the

Export tab. If you want to store the file in different place use the

button to choose the directory and edit the name of

the file if necessary. Check the

button to choose the directory and edit the name of

the file if necessary. Check the  include a schema option if you want to save a schema together with your

export. To start the export, click on the Export data

include a schema option if you want to save a schema together with your

export. To start the export, click on the Export data

button. To open the exported file, use the

button. To open the exported file, use the  button.

button.

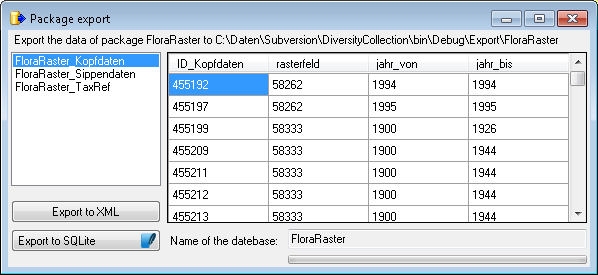

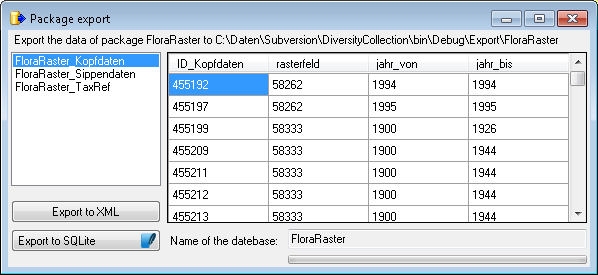

Export to SQLite

To export your data into a SQLite

database, choose the

To export your data into a SQLite

database, choose the  Export to SQLite tab. You may change the preset name of the database in order to keep previous exports. Otherwise you overwrite previous exports with the same filename. To start the export, click on the Export data

Export to SQLite tab. You may change the preset name of the database in order to keep previous exports. Otherwise you overwrite previous exports with the same filename. To start the export, click on the Export data

button. To view the exported data, use the

button. To view the exported data, use the

button.

button.

Schema

To handle the settings of your export, choose the

To handle the settings of your export, choose the

Schema tab. To load a predefined schema,

click on the

Schema tab. To load a predefined schema,

click on the  open button. To reset the settings to the

default, click on the

open button. To reset the settings to the

default, click on the  undo button. To save the current

schema click on the

undo button. To save the current

schema click on the  save button. With the

save button. With the

button you can inspect the schema in a separate

window.

button you can inspect the schema in a separate

window.

Diversity Collection

Label

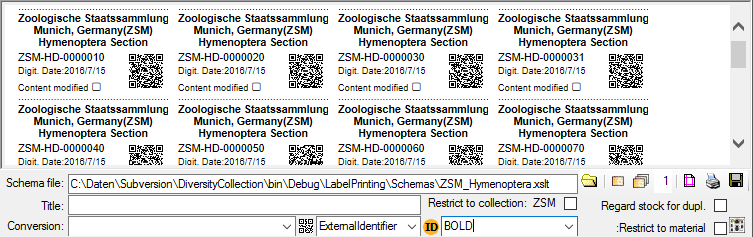

Select the printer  in the header menu to switch to

the printing mode. If your specimen contains more than one part, choose

the part of the specimen for which the label should be generated. The

sequence is shown in the image below and in a short tutorial

in the header menu to switch to

the printing mode. If your specimen contains more than one part, choose

the part of the specimen for which the label should be generated. The

sequence is shown in the image below and in a short tutorial

.

.

Additional information about a label is entered in the label section

(see image below). The title may contain a longer text that can be

displayed by switching from a combobox to a textbox using the

resp.

resp.  button to return to

the combobox. The data are stored in the table

CollectionSpecimen.

button to return to

the combobox. The data are stored in the table

CollectionSpecimen.

The organisms of a specimen are printed on a label according to the

display order.

Schema

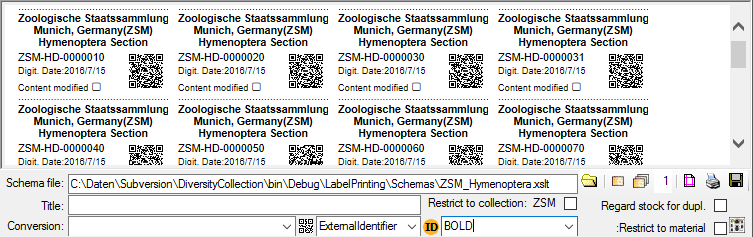

If you do not select a schema file, i.e. the textbox Schema file: is

empty, you will see the generated XML-file as shown in the image below.

The XML file is the base for all label types you wish to generate. To

create your own labels simply design your own XSLT-schema file

.

See e.g. http://www.w3.org/TR/xslt for further information about

schema files.

.

See e.g. http://www.w3.org/TR/xslt for further information about

schema files.

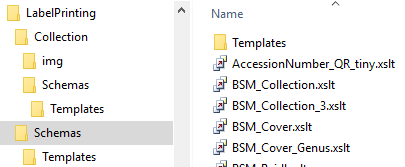

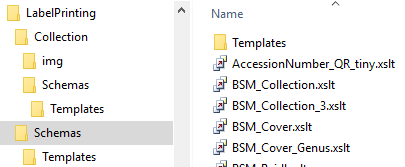

To print a label for a specimen you have to select a schema file. There

are default schema files available on github or in the folder

LabelPrinting/Schemas in your application directory (see below).

This is the default place to store schema files.

Click on the  open button to open the directory. You will

find several prepared schema files among which you can choose or change

them to your own needs or create new ones respectively. The schema file

/LabelPrinting/Schemas/Templates/LabelTemplates.xslt provides

templates for the other schema files. If this file is missing the

generation of the label may fail and you will get a warning. You may

give a title for the print in the field Title. From the Collection and

MaterialCategory available for the selected specimen choose one from the

list (Coll./Mat.). To generate the label for the current specimen

click on the

open button to open the directory. You will

find several prepared schema files among which you can choose or change

them to your own needs or create new ones respectively. The schema file

/LabelPrinting/Schemas/Templates/LabelTemplates.xslt provides

templates for the other schema files. If this file is missing the

generation of the label may fail and you will get a warning. You may

give a title for the print in the field Title. From the Collection and

MaterialCategory available for the selected specimen choose one from the

list (Coll./Mat.). To generate the label for the current specimen

click on the  button. To generate labels for all

specimens selected in your query click on the

button. To generate labels for all

specimens selected in your query click on the

button (you may use the

button (you may use the

button to select all specimens). If you need

duplicates of your labels change the number in the duplicates box

button to select all specimens). If you need

duplicates of your labels change the number in the duplicates box

to the desired value. You can print 1 - 99

duplicates of one label. If there are more than 20 specimens in the

list, you receive a warning whether you really wish to create all these

labels as this could be somewhat time consuming. The labels are

generated as XML files with XSLT-schema files, transformed to HTML-files

and depicted in a browser. To print the label click on

the

to the desired value. You can print 1 - 99

duplicates of one label. If there are more than 20 specimens in the

list, you receive a warning whether you really wish to create all these

labels as this could be somewhat time consuming. The labels are

generated as XML files with XSLT-schema files, transformed to HTML-files

and depicted in a browser. To print the label click on

the  button.

button.

Duplicates

If you wish to print labels for duplicates which are stored in a

different collection, the duplicate should be a child of the original

specimen as shown in the example below.

Depending on the schema you use, the label will be marked as duplicate

and contain a reference to the original specimen (see below).

Save

If you wish to save the generated files for later printing, click on

the  button to do so. Note that the program will by

default create a file Label.xml and in case a schema file is

specified a file Label.htm in the LabelPrinting directory which

will be overwritten every time you generate a new label. Thus, you need

to save the file under a different name or in a different directory to

prevent the program to erase this data.

button to do so. Note that the program will by

default create a file Label.xml and in case a schema file is

specified a file Label.htm in the LabelPrinting directory which

will be overwritten every time you generate a new label. Thus, you need

to save the file under a different name or in a different directory to

prevent the program to erase this data.

If you wish to print labels for all the specimens in the specimen list,

you can restrict these to the collection and the material category of

the current specimen part (see image above).

Accession number

If you wish to reformat the accession number, you may choose among the

options provided by the program, e.g. conversion of arabic to roman

numbers (BSPG-1988-007-00001 → BSPG-1988-VII-00001). Select the format

from the combobox Conversion shown above.

Codes

For a short tutorial about the inclusion of codes in the label see a

short tutorial

.

.

Code 39

If you use  Code 39 for your labels and wish to print the barcodes on

the labels, you need the font

Code 39 for your labels and wish to print the barcodes on

the labels, you need the font  , which is included in

the DiversityCollection package. Place this font in the folder where

your fonts are stored (e.g.: C:\WINNT\Fonts). If the font is not

available, the barcode will appear as the accession number between two

'*' signs. If this font does not do the job you may try other fonts,

e.g. code 39 fromlogitogo.

Download the font, copy it into your fonts directory and adapt the xslt

file according to the line below:

, which is included in

the DiversityCollection package. Place this font in the folder where

your fonts are stored (e.g.: C:\WINNT\Fonts). If the font is not

available, the barcode will appear as the accession number between two

'*' signs. If this font does not do the job you may try other fonts,

e.g. code 39 fromlogitogo.

Download the font, copy it into your fonts directory and adapt the xslt

file according to the line below:

<xsl:variable name=\"FontBarcode\"\> font-size: 10pt; font-family:[Code-39-Logitogo]\</xsl:variable\>

QR-code

The QR-codes are restricted to labels for specimen parts. Select a part in the lower tree to enable the QR-codes.

To include a  QR-Code in the label, activate the QR-Code generator

QR-Code in the label, activate the QR-Code generator

and select the source for the QR code. For certain sources you can specify the type of the

source (see below).

and select the source for the QR code. For certain sources you can specify the type of the

source (see below).

- AccessionNumber

- CollectorsEventNumber

- DepositorsAccessionNumber

- ExternalIdentifier

- PartAccessionNumber

- StableIdentier

- StorageLocation

Depending on your XSLT-schema the QR code will

appear in your label (see below). By Default the QR-Codes are generated via a

an Api provided by the SNSB IT-Center using a python library, which is only available if you have access to the Internet.

The created QR-Code images will be stored in the folder LabelPrint/img with the filenames corresponding to the ID of the specimen part.

This folder will be cleared for every printout. So if you want to keep

the label you need to copy the created file Lable.html together with the

folder img.

You can change the default size and the default service for generating the QR-code. Use the context menu of the  QR-code button to open a window where you can enter either the size or the template of your preferred service as in the example shown below where the parameter {0} corresponds to the text that should be coded e.g. the accession number (as selected in the interface) and parameter {1} the size in pixel (as integer) for the QR code.

QR-code button to open a window where you can enter either the size or the template of your preferred service as in the example shown below where the parameter {0} corresponds to the text that should be coded e.g. the accession number (as selected in the interface) and parameter {1} the size in pixel (as integer) for the QR code.

https://services.snsb.info/qrcode/?size={1}&text={0}

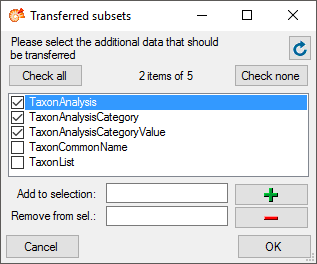

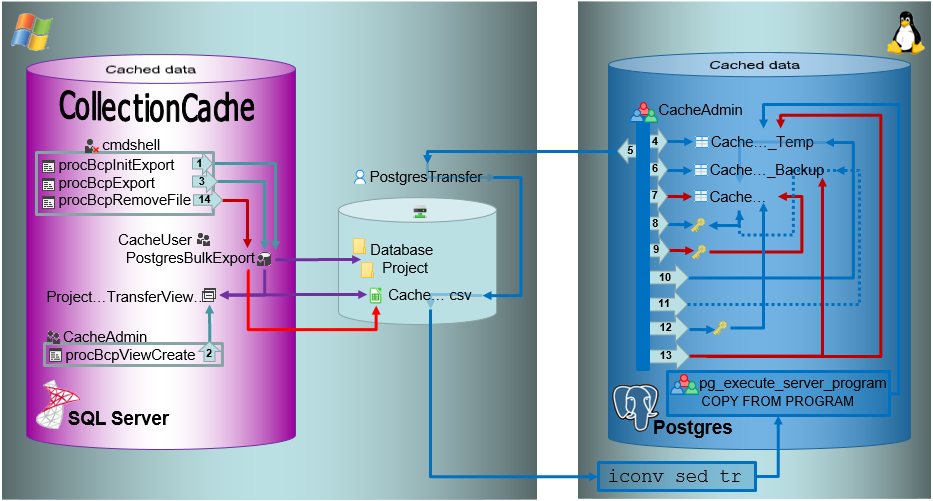

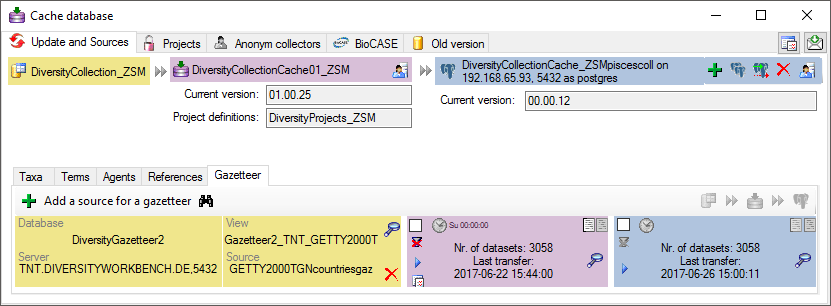

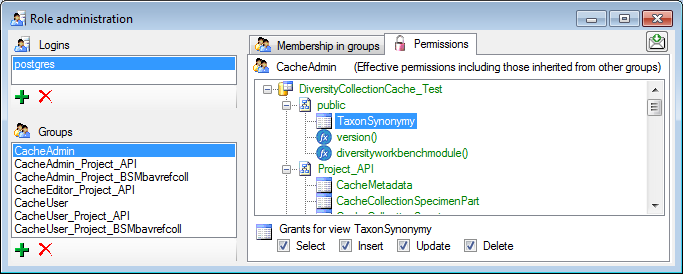

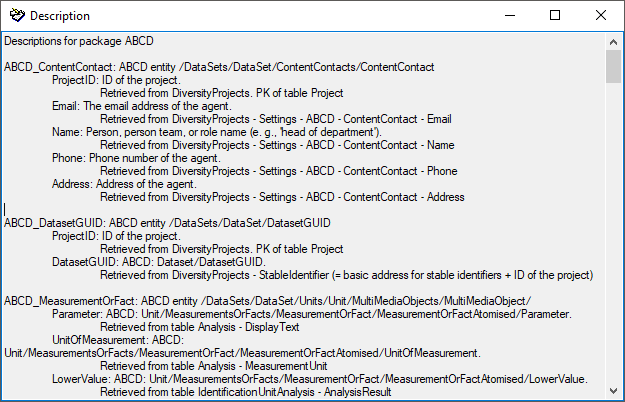

Subsections of Cache Database

Diversity Collection

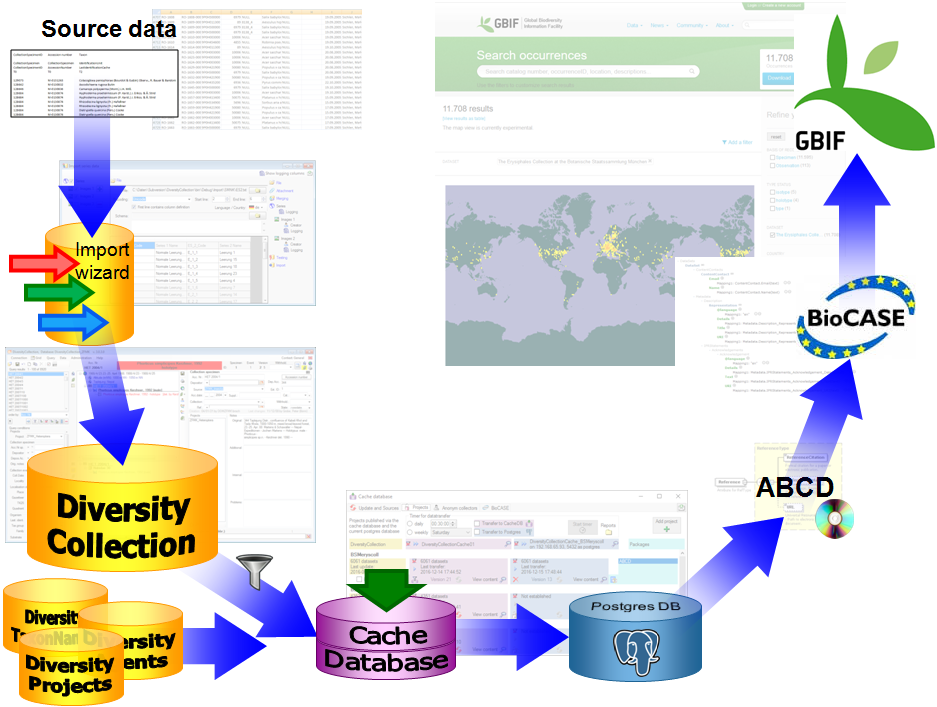

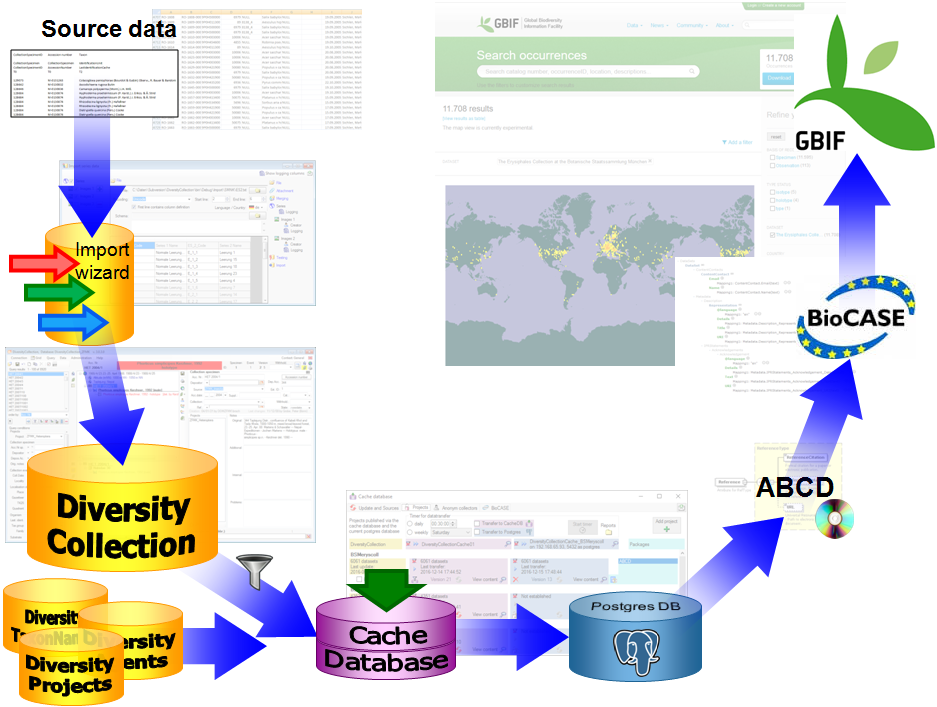

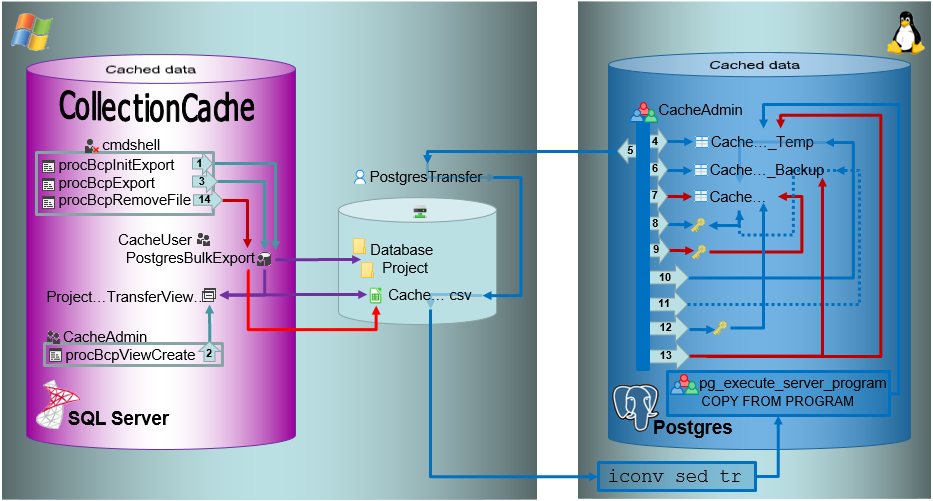

Data Flow

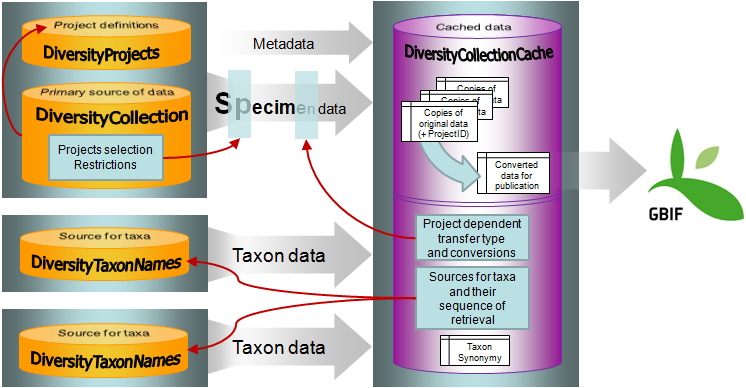

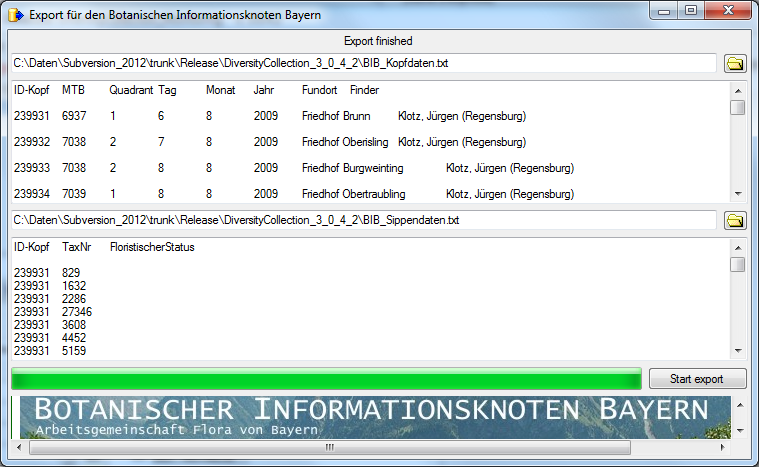

Import / Export - example for a data flow

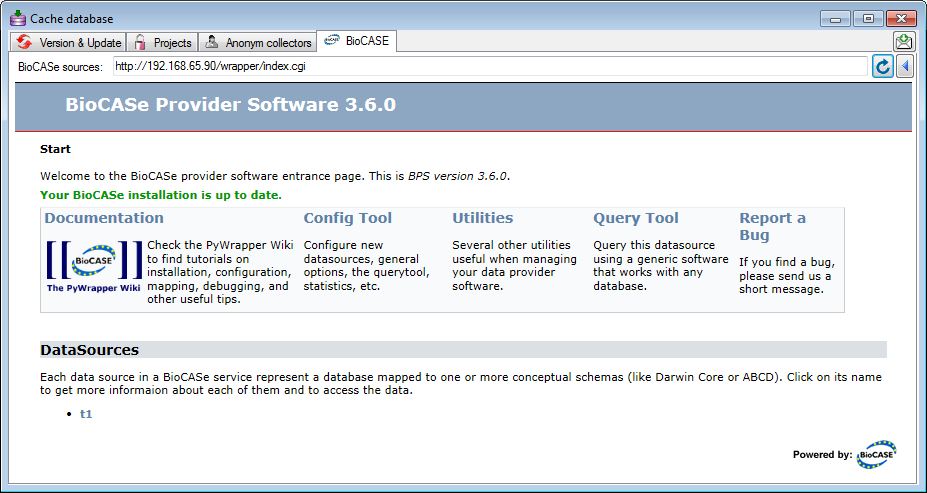

In the image below, an expample for a data flow from the original source

to the final GBIF-portal is shown. As a first step the data are imported

via the Import wizard  are imported into the

database. After the data are given free for

publication, they are transferred into the cachedatabase

are imported into the

database. After the data are given free for

publication, they are transferred into the cachedatabase  . From

there they are transferred into a Postgresdatabase

. From

there they are transferred into a Postgresdatabase  containing a package for conversion into

ABCD. Finally the

containing a package for conversion into

ABCD. Finally the  BioCASE tool for mapping the

data is used to provide the data for GBIF.

BioCASE tool for mapping the

data is used to provide the data for GBIF.

Cache Database

Tutorial

Basic steps for publication of data via the cache database

1 - Create the cache database

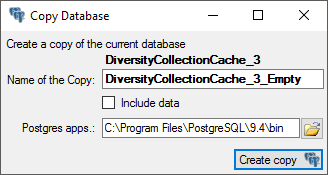

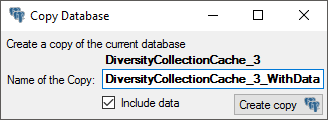

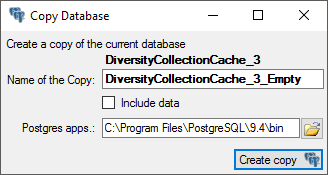

To create a cache database  as shown in a short tutorial

as shown in a short tutorial

and in the chapter Creation of the cachedatabase you need to be a system

administrator (s. Login administration).

After this step the cache database should be available and you can

create a Postgres database as final target of your data.

and in the chapter Creation of the cachedatabase you need to be a system

administrator (s. Login administration).

After this step the cache database should be available and you can

create a Postgres database as final target of your data.

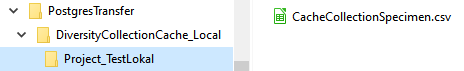

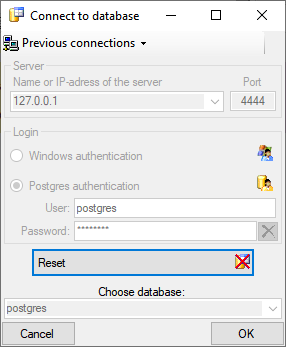

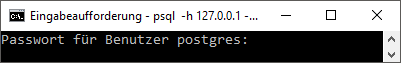

2 - Create a Postgres database

The final formatting of the data e.g. for publication via webservice are

performed in a  Postgres database. If no server providing Postgres is

available, you may install Postgres on your local machine (see

https://www.postgresql.org/ for further information). The creation and

administration of a Postgres database is described in a short tutorial

Postgres database. If no server providing Postgres is

available, you may install Postgres on your local machine (see

https://www.postgresql.org/ for further information). The creation and

administration of a Postgres database is described in a short tutorial

and in chapter Administration of the Postgres cachedatabases.

and in chapter Administration of the Postgres cachedatabases.

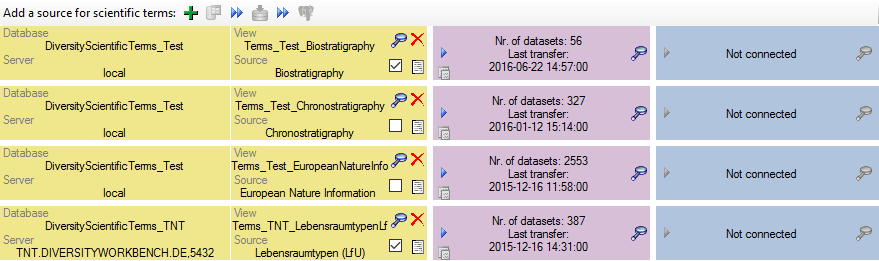

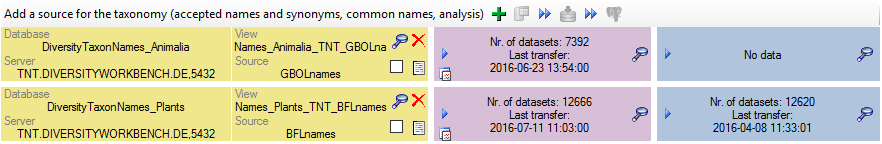

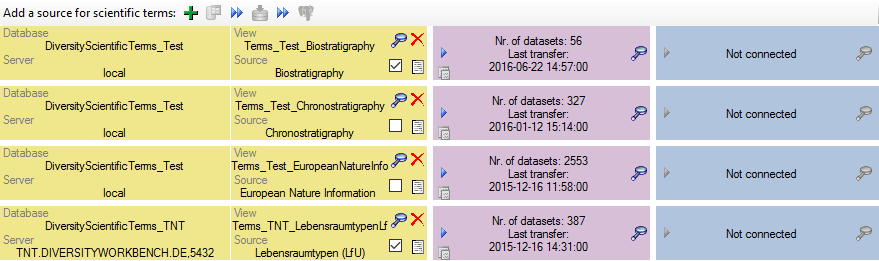

3 - Insert sources for taxonomic names, scientific terms, agents etc.

This step is optional and depends upon the availability of a source for

e.g. taxonomic names. You may either use sources from your local server

or the public available sources provided by tnt.diversityworkbench.de

(turn to http://www.snsb.info for further information). For a

introduction see a short tutorial

.

The needed settings are described in chapter Sources from othermodules.

.

The needed settings are described in chapter Sources from othermodules.

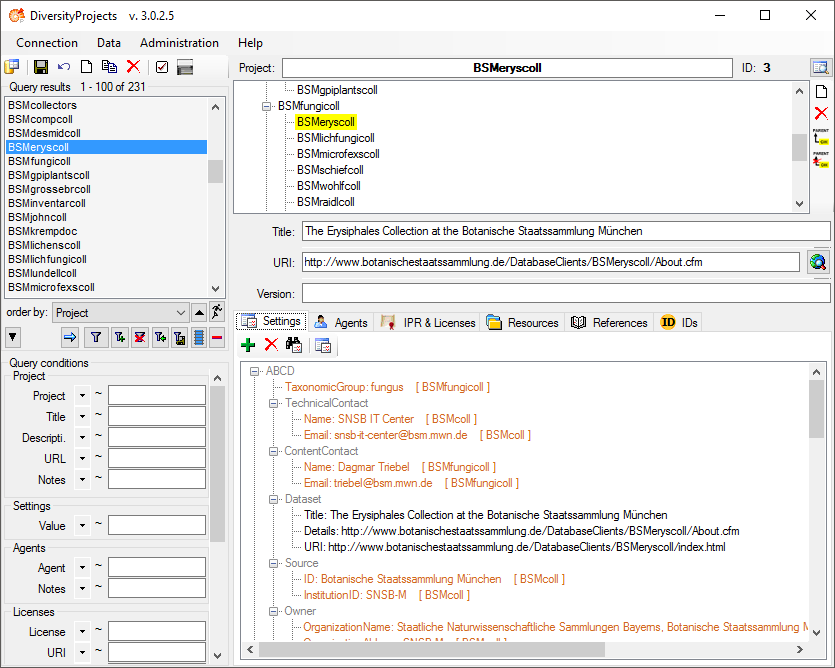

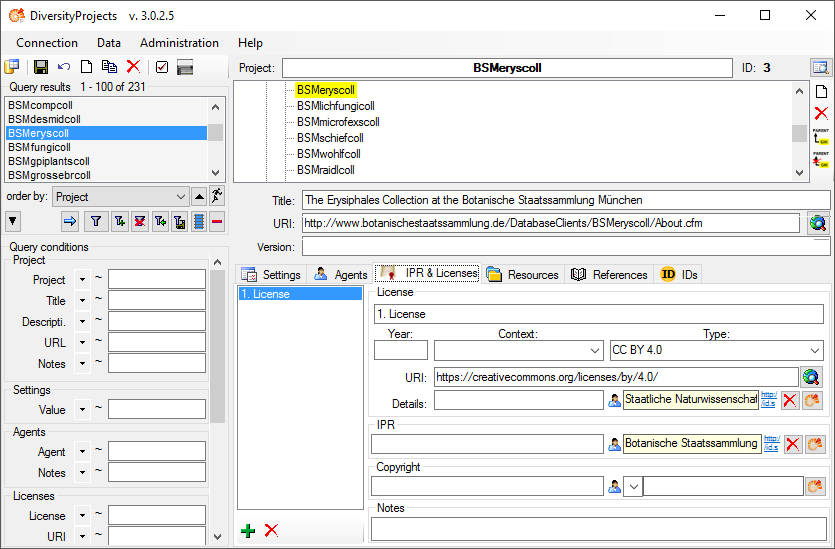

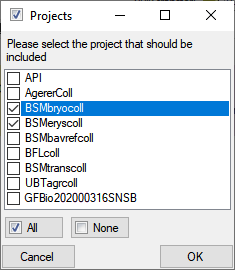

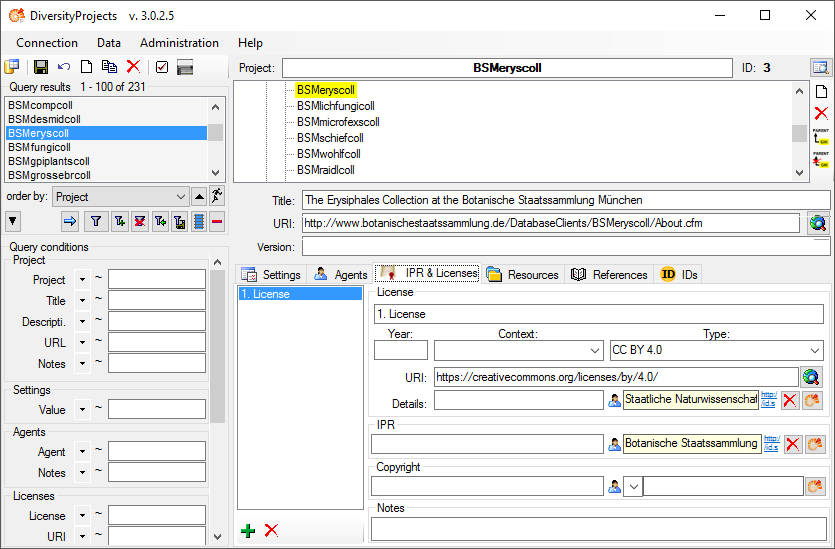

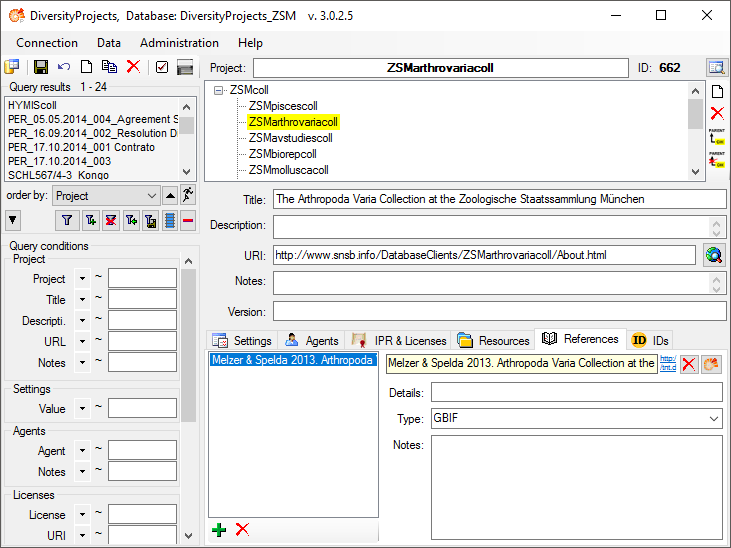

4 - Insert a project

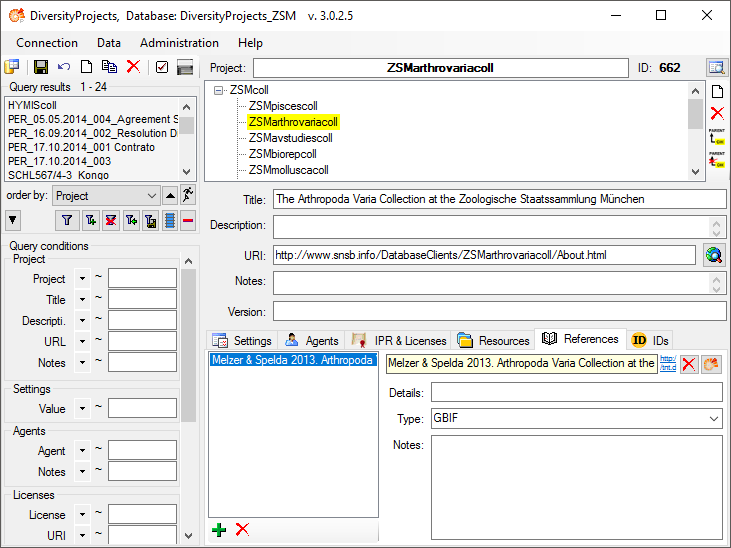

The data published in the cache database are organized according to the  projects. Add a project as shown in a short tutorial

projects. Add a project as shown in a short tutorial

and described in chapter Projects in the cachedatabase. In the source database, make sure

that the data within this project are not withheld from publication (see

chapter Availability of data sets for more

details) and that the ranges you want to publish are set properly (see

chapter Restrictions for the datatransfer into the cachedatabase).

and described in chapter Projects in the cachedatabase. In the source database, make sure

that the data within this project are not withheld from publication (see

chapter Availability of data sets for more

details) and that the ranges you want to publish are set properly (see

chapter Restrictions for the datatransfer into the cachedatabase).

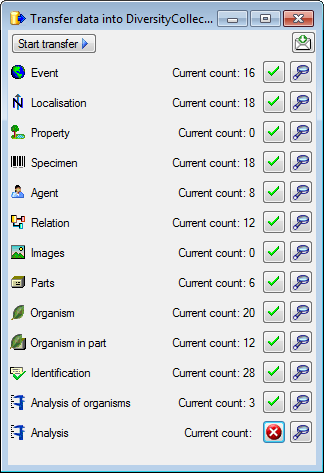

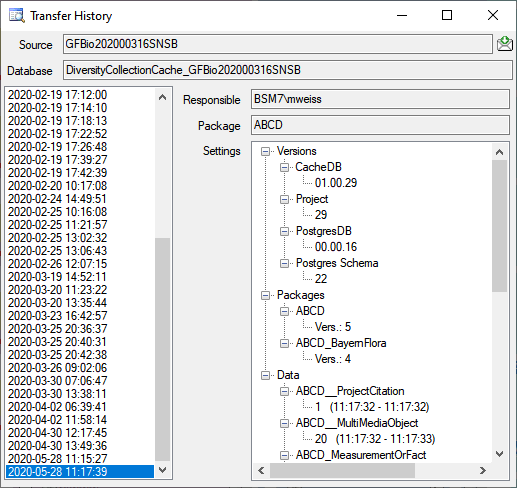

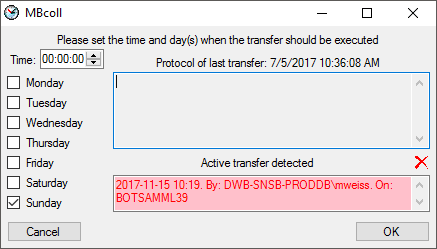

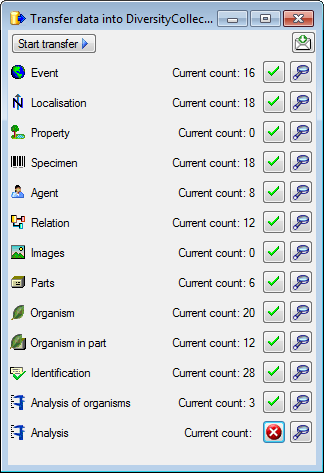

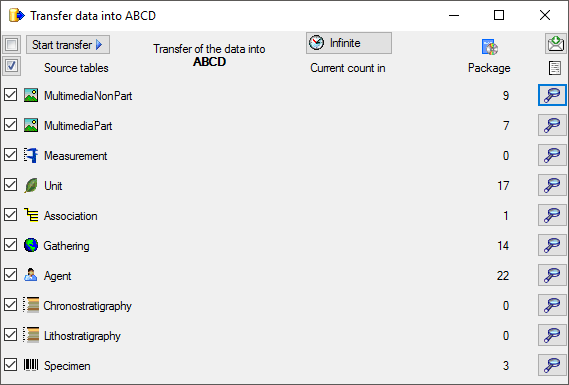

5 - Transfer the data

The final  transfer of the data is described in chapter Sources for

other modules and chapter Transfer of the

data.

transfer of the data is described in chapter Sources for

other modules and chapter Transfer of the

data.

6 - Publish

Publish  or export

or export  the data

the data

To export the data or prepare them for publication according to the

specifications of webservices etc. the data frequently need to be

formatted. This is done with packages as described in chapter

Administration of the Packages.

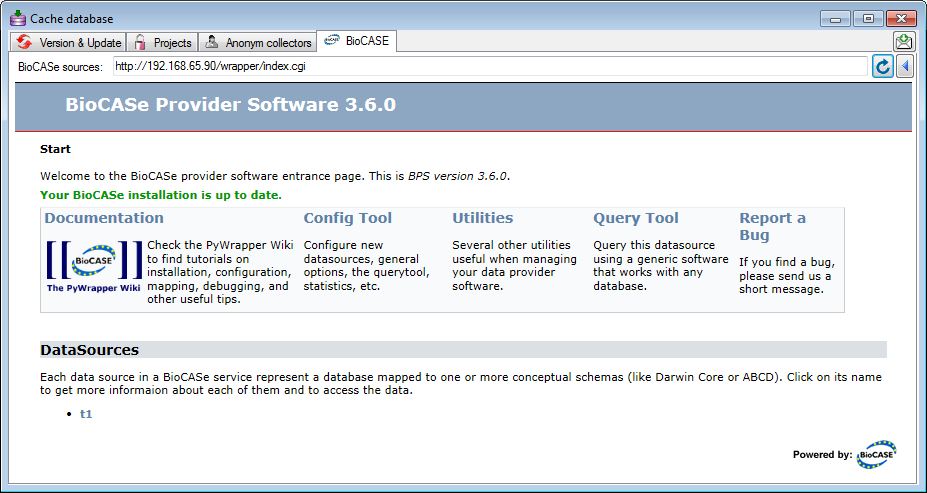

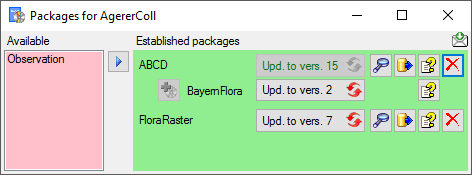

7 - BioCASe

Map data via  BioCASe (only for ABCD consuming publishers like GBIF)

BioCASe (only for ABCD consuming publishers like GBIF)

For publishers using ABCD like GBIF, use the  BioCASe provider software and mapping tool to

link the data formatted with the ABCDpackage.

BioCASe provider software and mapping tool to

link the data formatted with the ABCDpackage.

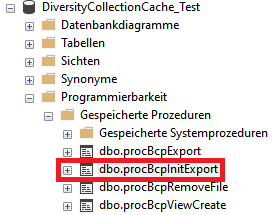

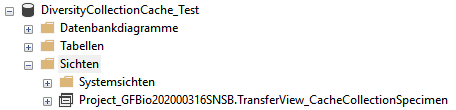

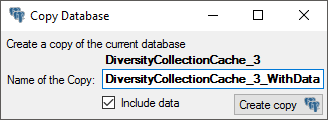

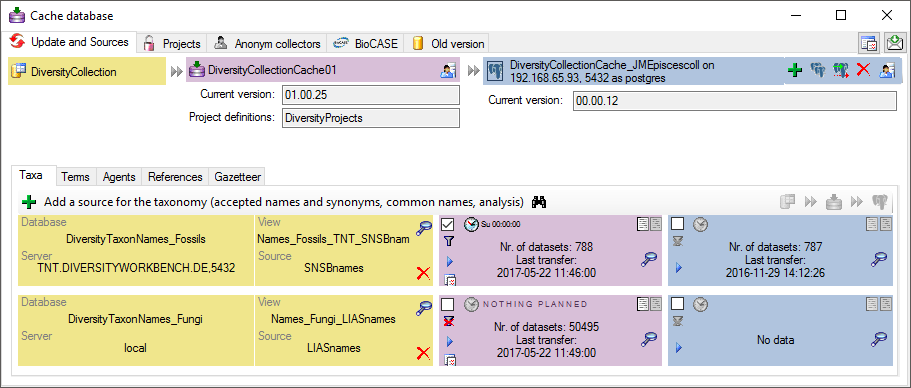

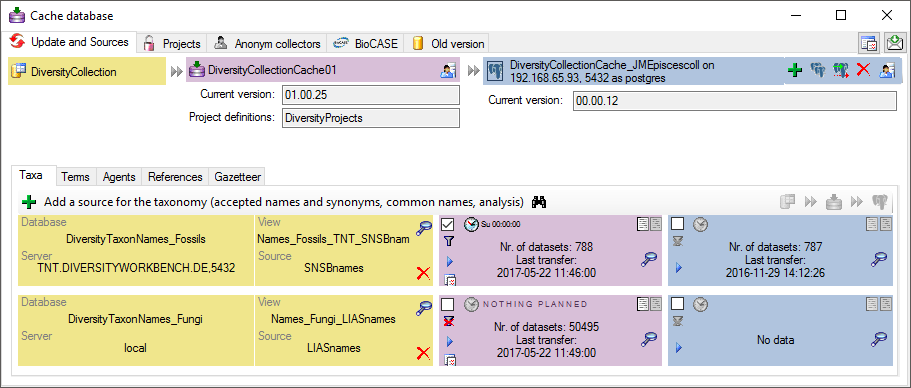

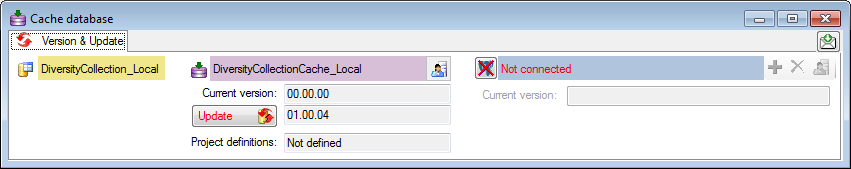

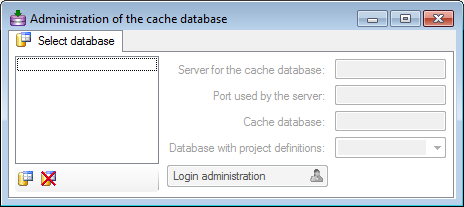

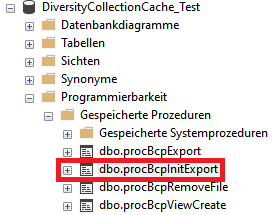

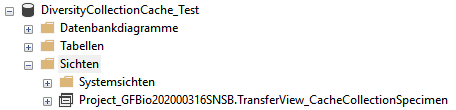

Create Cache Database

Creation of the cache database

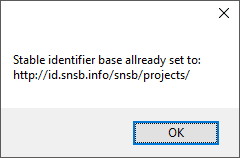

To create a cache database you need to be a system administrator (s.

Login administration). To create the cache

database, choose  Data - Cache database

... from the menu. If so far no cache database exists, you will be

asked if a new one should be generated. Next you have to select the

corresponding DiversityProjects database placed on the same server. If

the stable identifier has not been defined in this DiversityProjects

database, you get a message, that this has to be done first. Please see

the manual of DiversityProjects for details. Next you have to select the

corresponding DiversityAgents database placed on the same server.

Finally you are asked for the name of the Cachedatabase. We recommend to

accept the suggestion shown in the dialog. After the generation of the

cache database a window as shown below will open. For an introduction

see a short tutorial

Data - Cache database

... from the menu. If so far no cache database exists, you will be

asked if a new one should be generated. Next you have to select the

corresponding DiversityProjects database placed on the same server. If

the stable identifier has not been defined in this DiversityProjects

database, you get a message, that this has to be done first. Please see

the manual of DiversityProjects for details. Next you have to select the

corresponding DiversityAgents database placed on the same server.

Finally you are asked for the name of the Cachedatabase. We recommend to

accept the suggestion shown in the dialog. After the generation of the

cache database a window as shown below will open. For an introduction

see a short tutorial

.

.

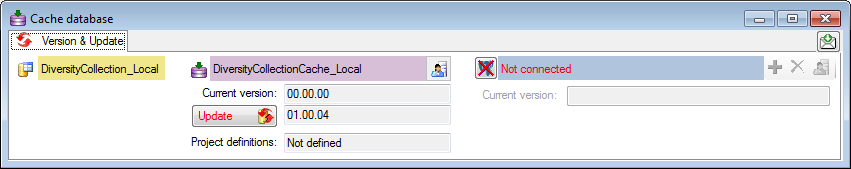

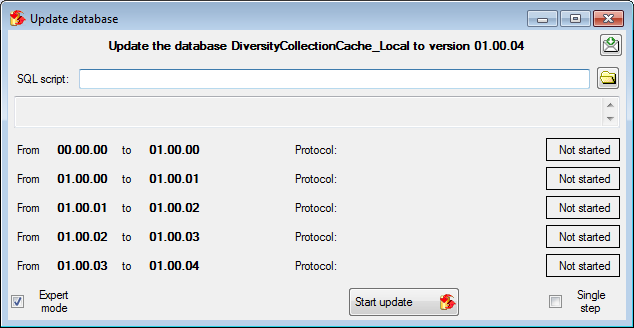

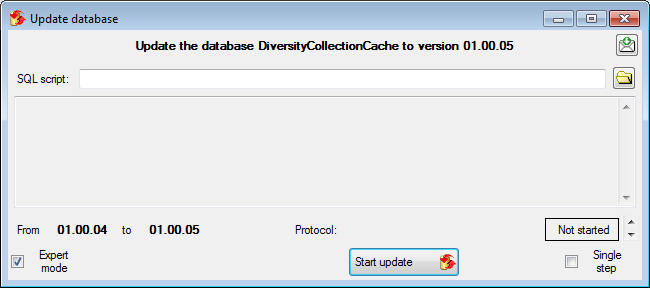

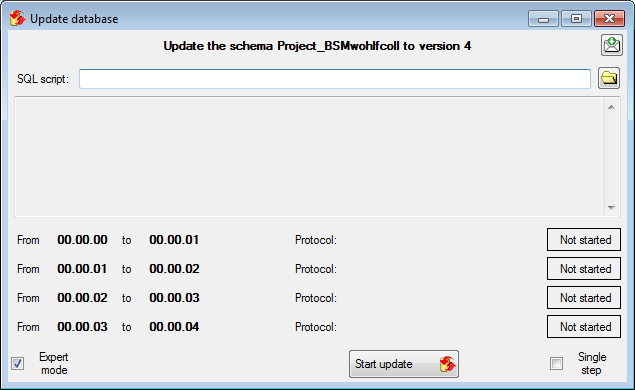

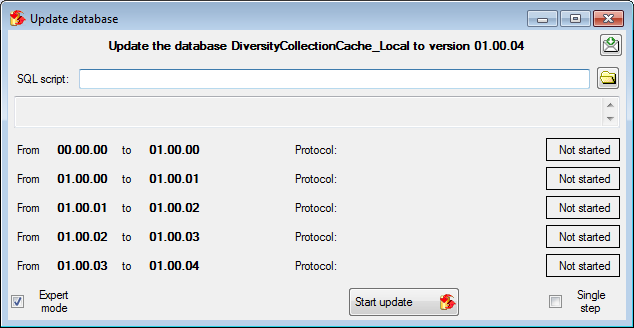

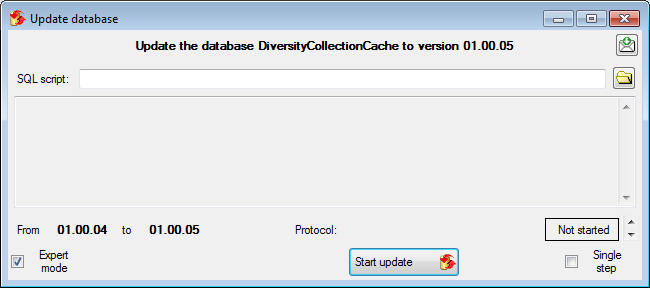

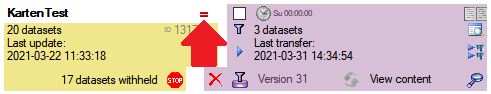

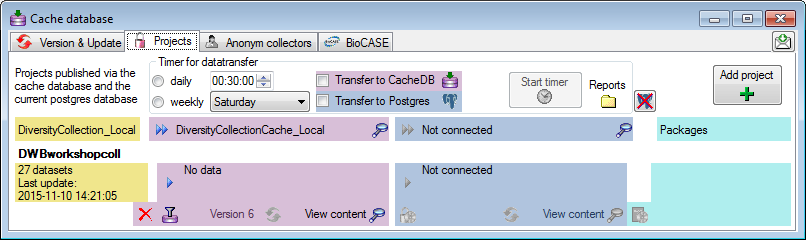

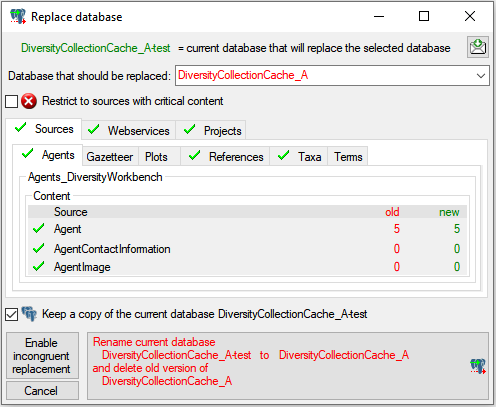

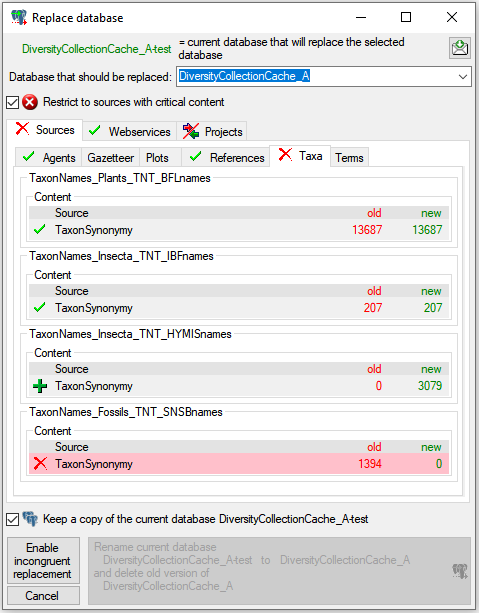

Click on the Update  button to update

the database to the latest version. A window as shown below will open.

Click on Start update

button to update

the database to the latest version. A window as shown below will open.

Click on Start update  to execute all

the scripts needed for the latest version of the database.

to execute all

the scripts needed for the latest version of the database.

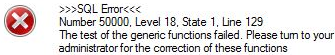

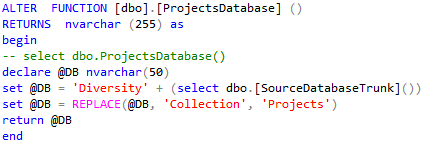

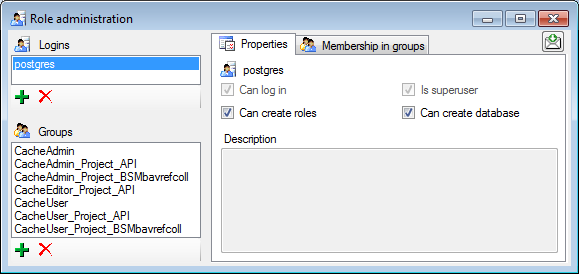

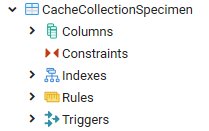

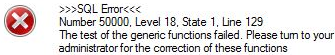

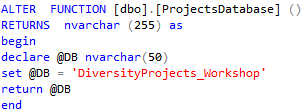

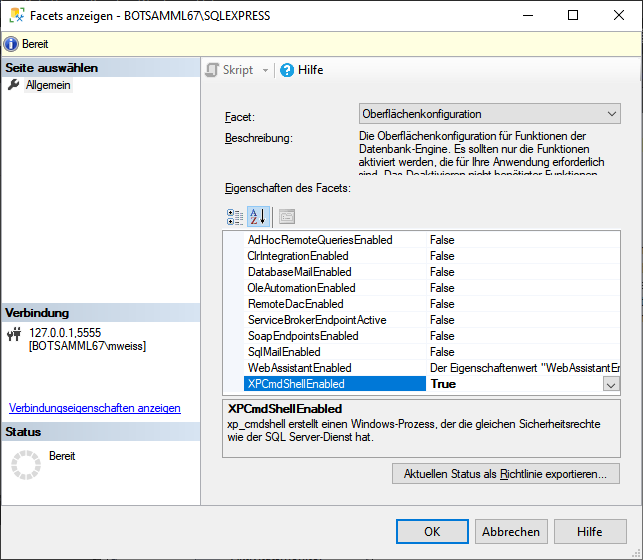

During the update you may encounter under certain circumstances the

message that the test of the generic functions failed (see below).

The most probable reason for this is that the name of your projects

database does not correspond to the specifications of the update-script.

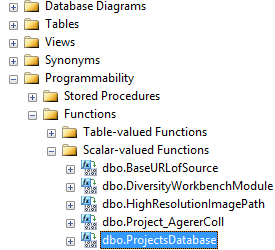

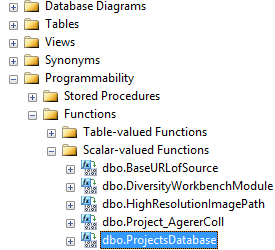

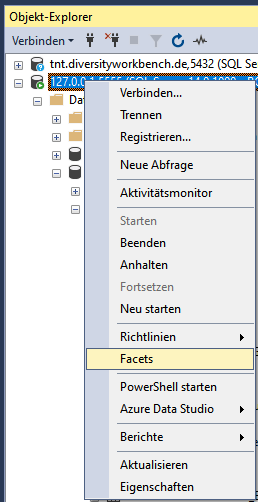

This error can easily be fixed. As an administrator use the Microsoft

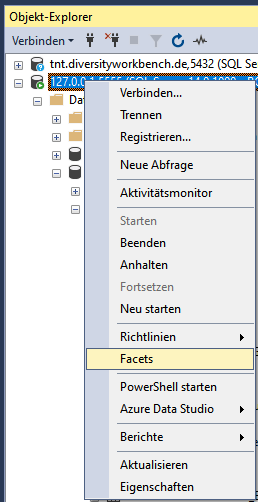

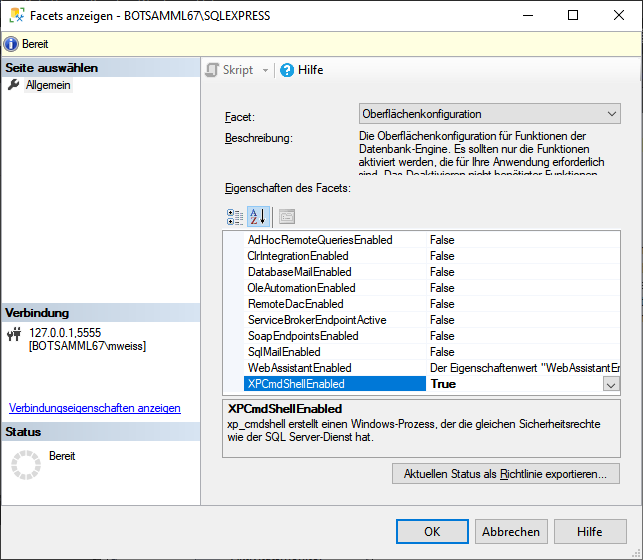

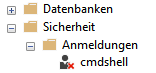

SQL Server Management Studio. In the directory of your cache database

select the Scalar-valued Function

dbo.ProjectsDatabase()

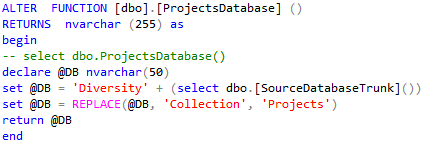

... and use Modify from the context menu. In the Code of the function

(see below) change the last set @DB = ... to the real name of your

projects database.

The result may look as shown below. After the change, press F5 to

execute the script.

After the function returns the correct name of the ProjectsDatabase, the

update script should proceed without further error messages.

Cache Database

Logins

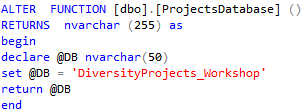

Cache database - User administration

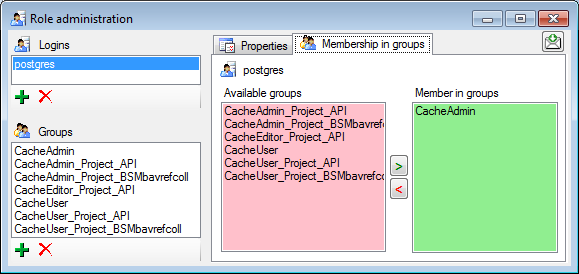

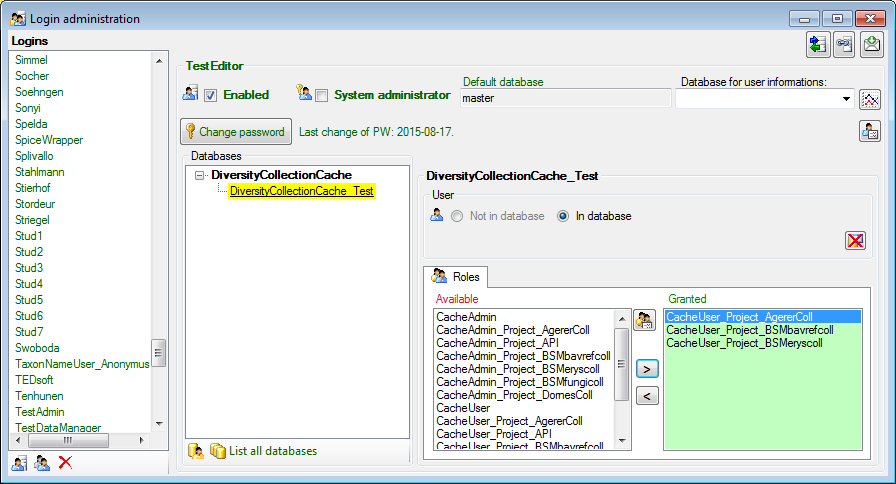

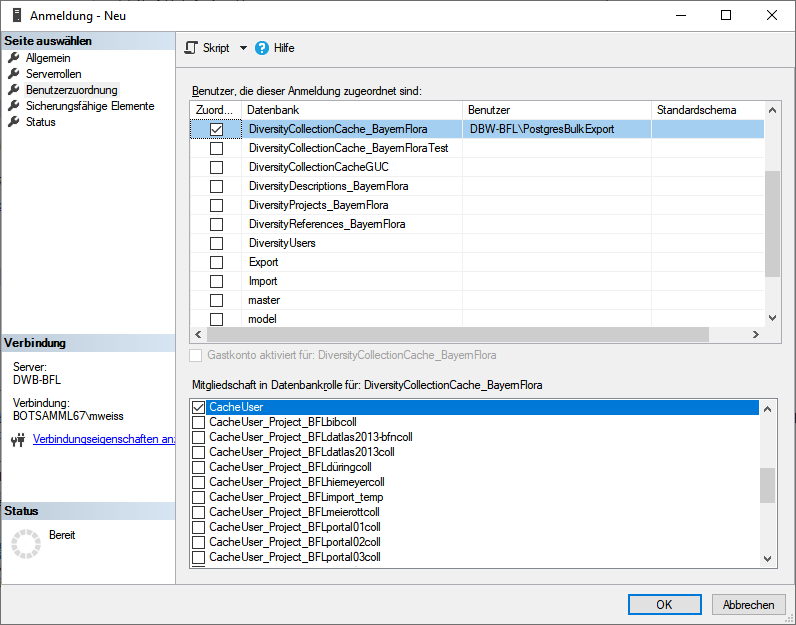

There are 2 roles in the cache database with a general access to the

data: CacheAdmin (for the administration and transfer of the data) and

CacheUser (with read only access).

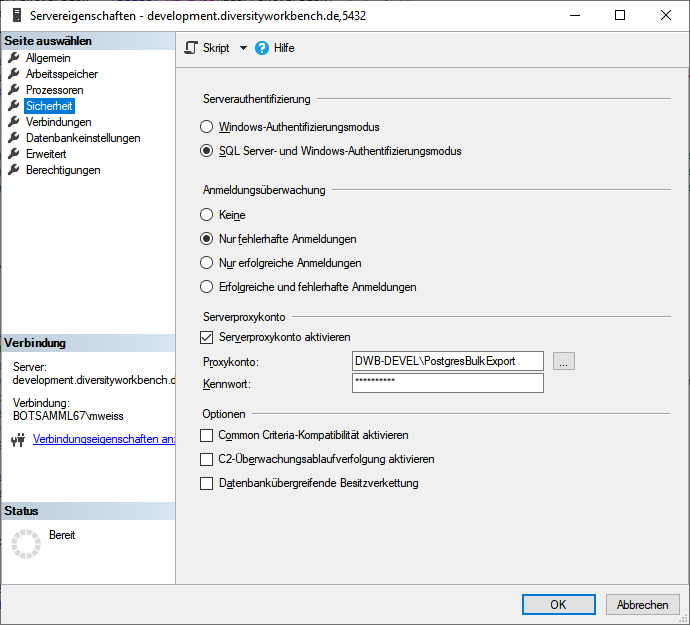

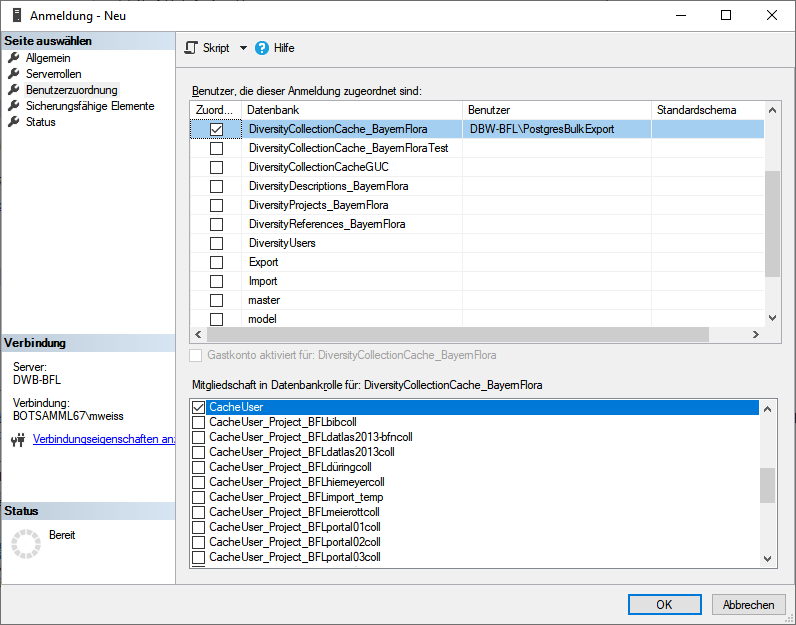

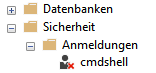

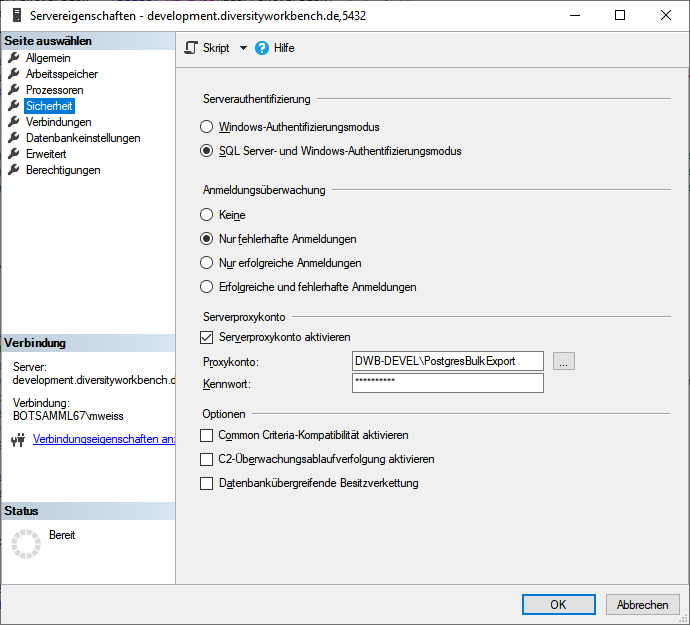

To administrate the logins in the  SQL-Server

database, click on the

SQL-Server

database, click on the  button to open a window

as shown below. To administrate the access for other logins, you have to

be a System administator. For further details please see the chapter

about the login administration for the main database.

button to open a window

as shown below. To administrate the access for other logins, you have to

be a System administator. For further details please see the chapter

about the login administration for the main database.

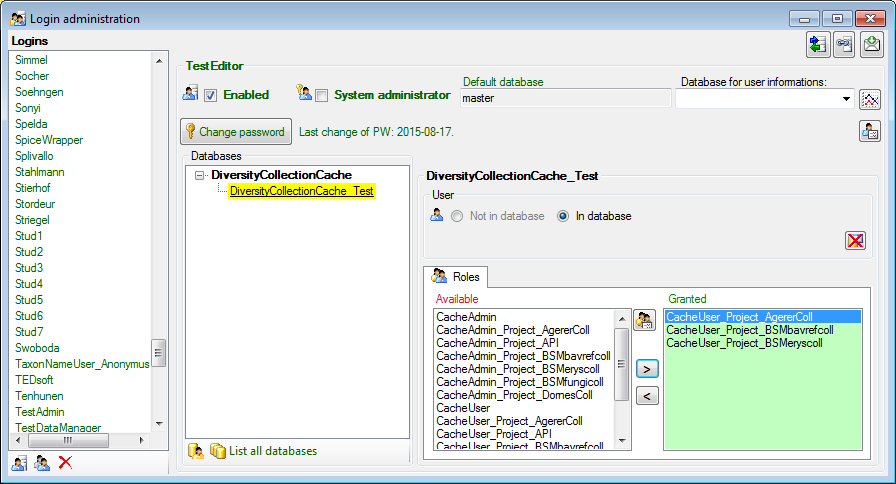

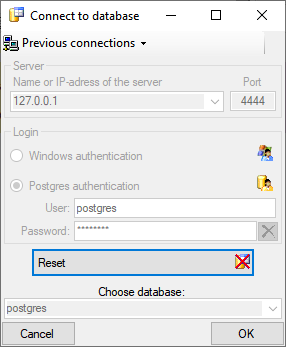

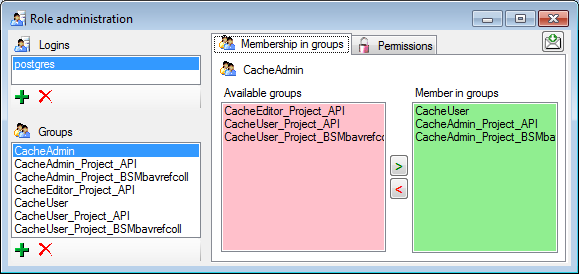

Postgres database

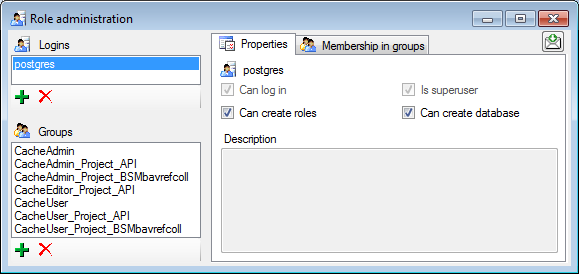

To handle the logins and user groups on the  Postgres database server,

click on the

Postgres database server,

click on the  button. A window as shown below

will open, where you can create and delete logins and groups. For the

logins you can change their membership in groups and their properties

(see below). On the left you find 2 lists, with the upper list

containing the logins and the list below with the groups resp. roles.

For the logins you can set the general properties as shown below. The

login postgres is created with the installation of the database and is

the login for the administration of the database including the updates

etc. For details about predefined properties like Is superuser, please turn to the Postgresdocumentation.

button. A window as shown below

will open, where you can create and delete logins and groups. For the

logins you can change their membership in groups and their properties

(see below). On the left you find 2 lists, with the upper list

containing the logins and the list below with the groups resp. roles.

For the logins you can set the general properties as shown below. The

login postgres is created with the installation of the database and is

the login for the administration of the database including the updates

etc. For details about predefined properties like Is superuser, please turn to the Postgresdocumentation.

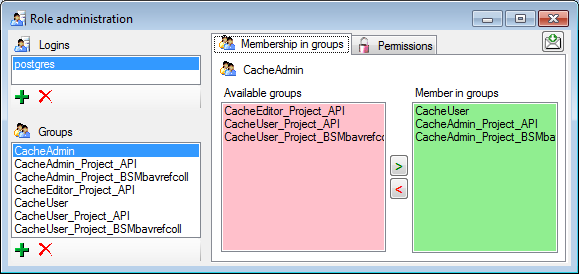

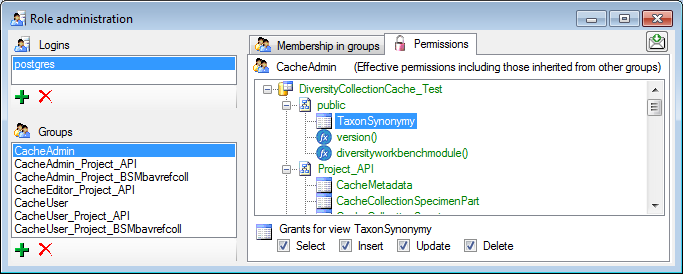

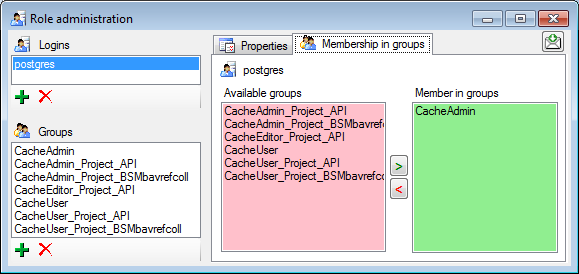

In the Membership in groups area you can define the groups in which

the login is a member (see below).

For the groups you can change their membership in other groups and their

permissions (see below).

Cache Database

Configuratation

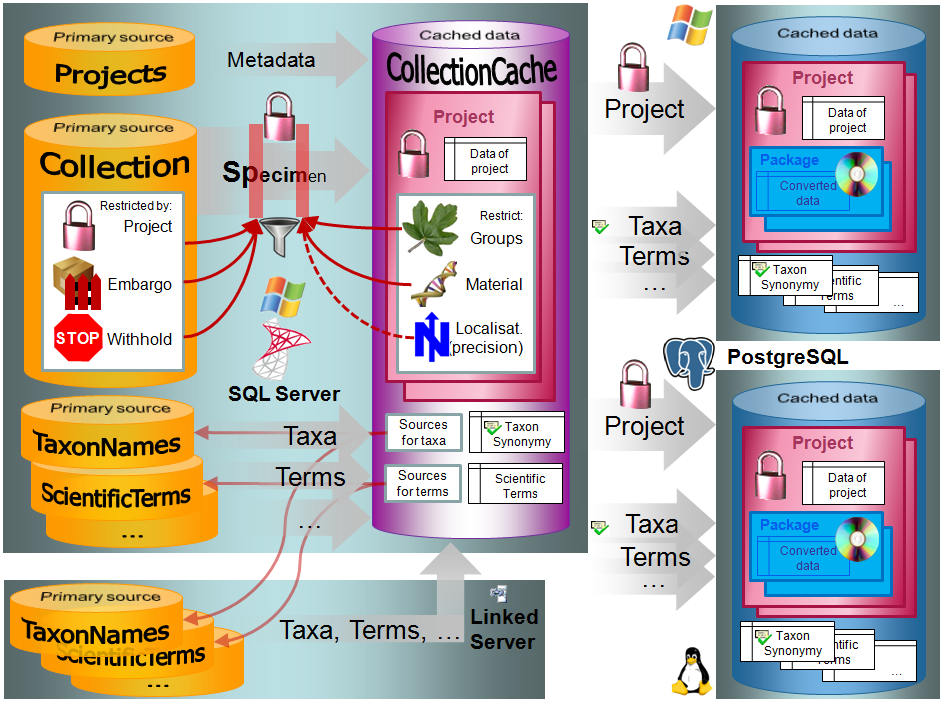

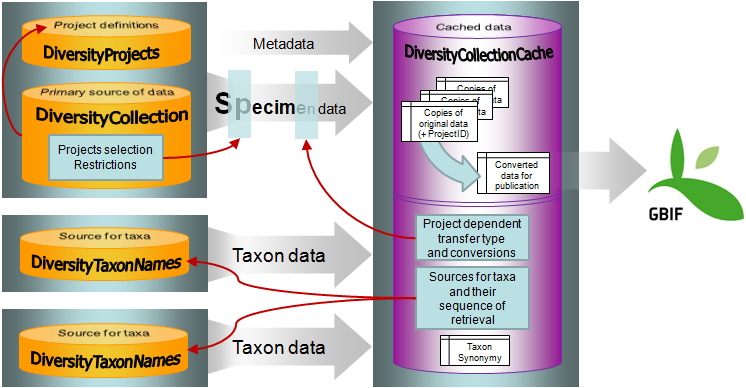

The cache databases for DiversityCollection are designed as sources for

preformated data for publication in e.g. public user portals like

GBIF. There may be several cache databases which

can be located on several servers. The restrictions of the published

data are defined in the main database via projects,

data withholding and

embargos. The publication of the data is

allways related to a project, defined in DiversityProjects, holding the

metadata that will be transfered into the cache database. Therefore

every dataset copied from the source into the cache database contains a

reference to the project (ProjectID). The publication of the data

includes several steps:

- Setting of restrictions within the original data with data withholding

- Selection of the project

- Transfer of the data into the cache database

- Conversion of the data into the format required by the portal

In Addition to the data transfered from DiversityCollection, the data

for the taxonomy has to be transfered from the relevant sources. The

links to these sources and the project dependent retrieval are stored in

the cache database.

The image below gives an overview for the process described above.

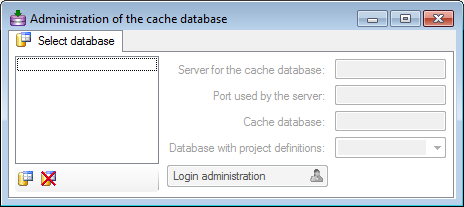

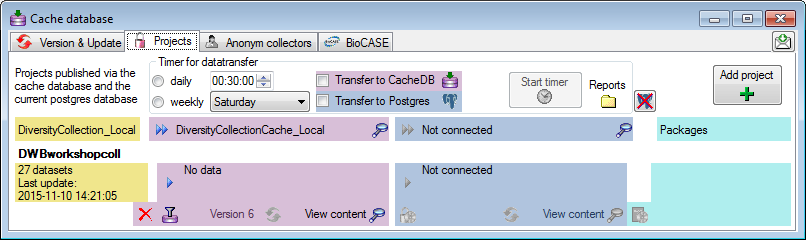

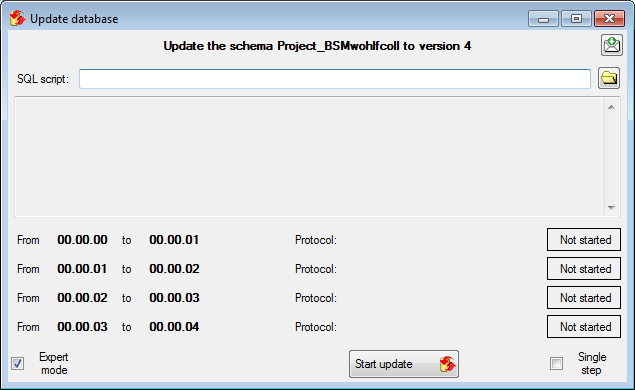

To configure your cache databases, choose Administration →

Cache database from the menu. A window will

open as shown below.

Cache database from the menu. A window will

open as shown below.

Creation of a cache database

If no cache database has been defined so far, use the

button to create a new cache database. You

have to be a System administrator to be able

to create a cache database. You will be asked for the server,

the port used by the server, the directory of the

database files, the name of the new cache database and

finally the name of the projects database where the metadata of the

projects transfered into the cache database are stored.

button to create a new cache database. You

have to be a System administrator to be able

to create a cache database. You will be asked for the server,

the port used by the server, the directory of the

database files, the name of the new cache database and

finally the name of the projects database where the metadata of the

projects transfered into the cache database are stored.

To delete a once created cache database, use the

button.

button.

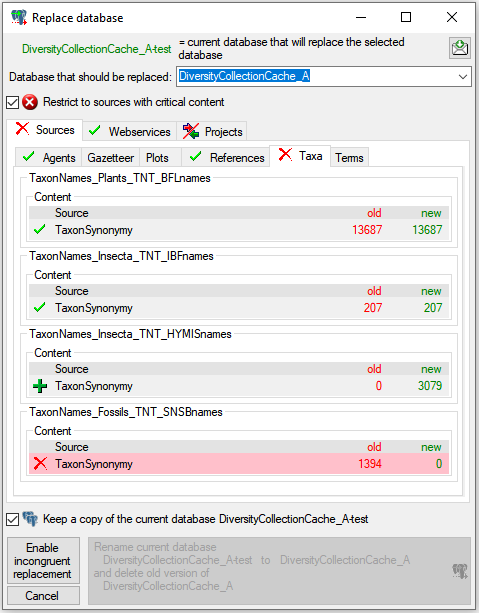

Updates of the cache database

After the new cache database has been created or if you select an

outdated cache database, a button Update database

will appear, instructing you to run updates

for the cache database. Click on the button to open a window as shown

below.

will appear, instructing you to run updates

for the cache database. Click on the button to open a window as shown

below.

All update scripts for the database will be listed. Click on the Start update  button to update the database to the

current version.

button to update the database to the

current version.

Login administration

To handle the data for the cache database a user needs access to the

data on the source database, the cache database,

the project database and the taxon databases. To

administrate the users that can transfer data into the cache database

use the button  Login administration. For

details see the chapter Loginadministration.

Login administration. For

details see the chapter Loginadministration.

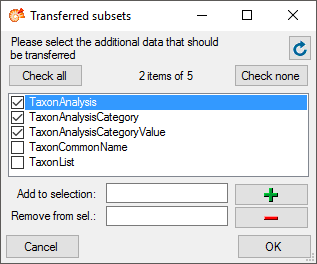

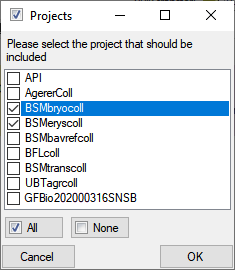

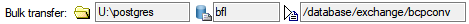

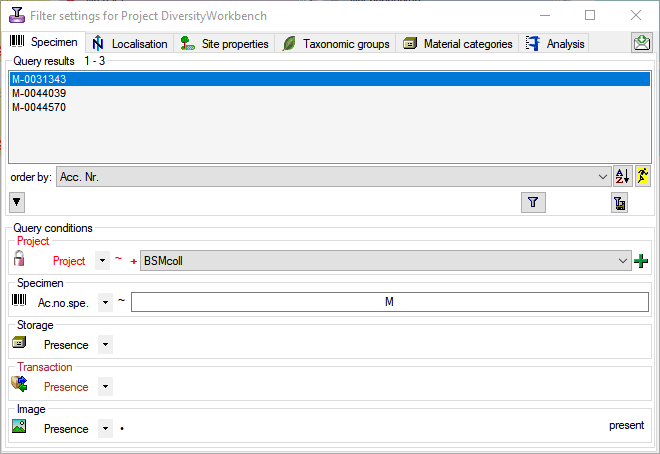

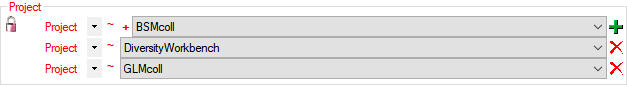

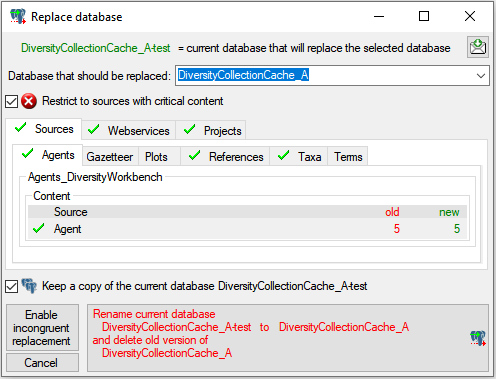

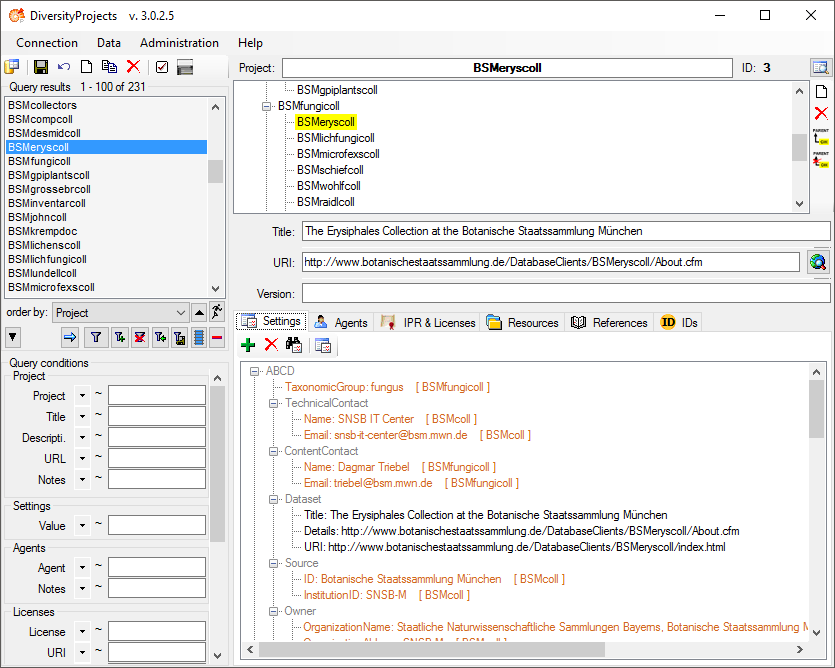

Configuration, Projects

Data transfer to the cache database is linked to projects

. To add a project of which the data should

be transfered into the cache database click on the

. To add a project of which the data should

be transfered into the cache database click on the  button. For every project that should be transferred you have several

options for configuration:

button. For every project that should be transferred you have several

options for configuration:

- Restriction of transfered taxonomic groups

- Restriction of transfered material categories

- Restriction of transfered localisations

- Restriction of the precision of the coordinates

- Restriction of transfered images

Data types handle the data for the cache database. A user needs access

to the data in the [source] database, the [cache]

database, the [project] database and the [taxon]

databases. To administrate the users who can transfer data into the

cache database, use the button  Login administration. For details see the chapter Login

administration.

Login administration. For details see the chapter Login

administration.

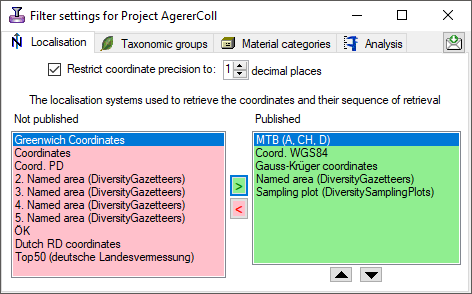

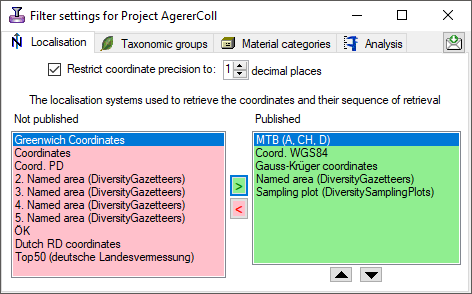

Restriction

- Taxonomic groups

- Material categories

- Localisation systems

- Images

To restrict the Taxonomic groups, Material catagories, Localisation

systems or Images that are transferred to the cache database choose the

corresponding options and select those that should be transferred into

the cache database in the tab pages that are added.

Coordinate precision

To reduce the precision of the coordinates of the localisation systems

transferred to the cache database you can check the corresponding option

and determine the number of digits after the decimal point.

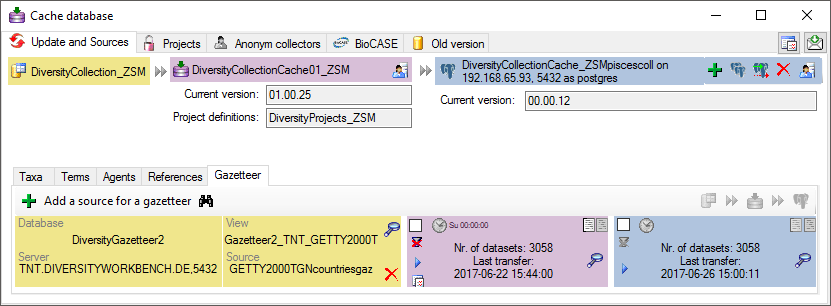

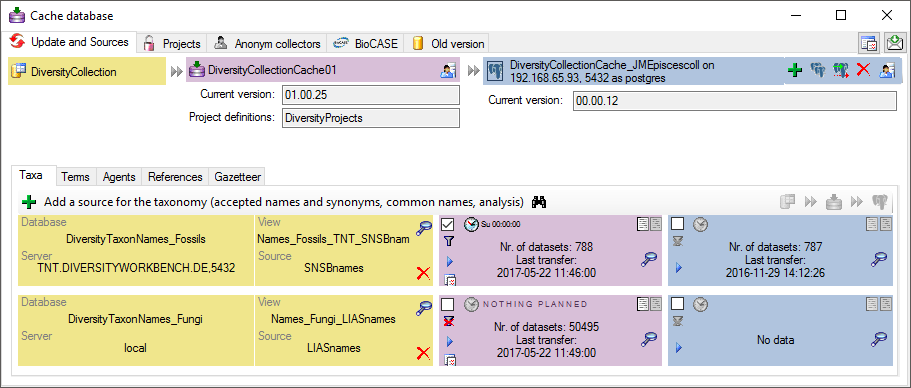

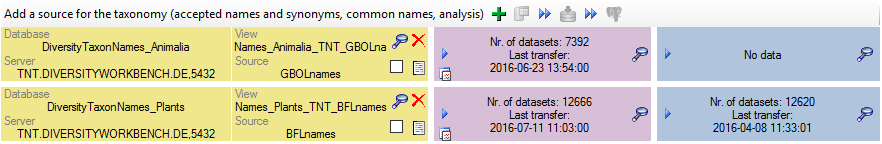

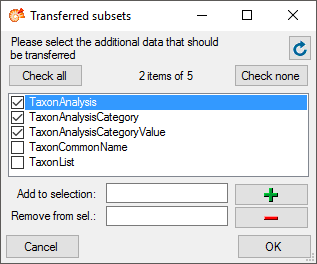

Taxonomy

The collection data may be linked to sources holding taxonomic

information ( DiversityTaxonNames). To provide this information add all

sources used in your collection data and transfer the corresponding data

into the cache database. The data in the taxonomic sources are organized

by projects, thus, you need to provide the sequence of the projects that

should be imported into the cache database for every source. A name will

be imported only once. This means that the name with synonymy to the

first imported project will be imported, all following data with this

name will be ignored.

DiversityTaxonNames). To provide this information add all

sources used in your collection data and transfer the corresponding data

into the cache database. The data in the taxonomic sources are organized

by projects, thus, you need to provide the sequence of the projects that

should be imported into the cache database for every source. A name will

be imported only once. This means that the name with synonymy to the

first imported project will be imported, all following data with this

name will be ignored.

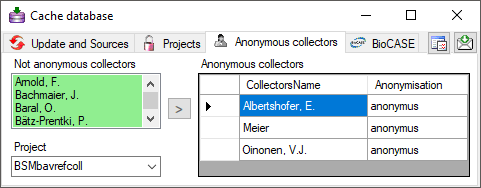

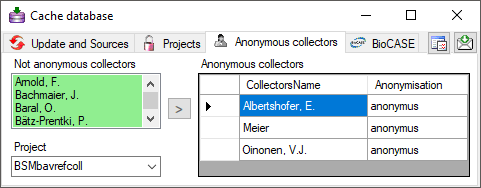

Anonym Colletors

Anonymous collectors

If collectors should be published as an anonym string, edit the

Anonymous collectors list. Use the

> button to move a collector from the selected project into the

list of anonymous collectors. To remove a collector from this list, just

delete it from the table (see below).

Anonymous collectors list. Use the

> button to move a collector from the selected project into the

list of anonymous collectors. To remove a collector from this list, just

delete it from the table (see below).

In the cache database these collectors will be translated into the

selected Anonymisation and a number, e.g. Anonymus 1. So the

data of one collector can still be recognized without revealing the name

of the collector.

Cache Database

Linked Server

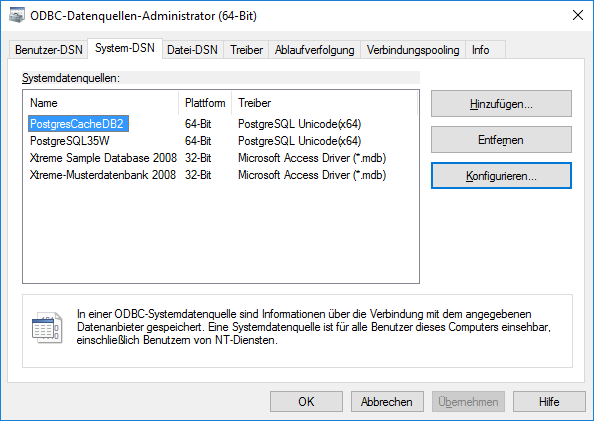

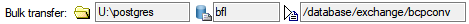

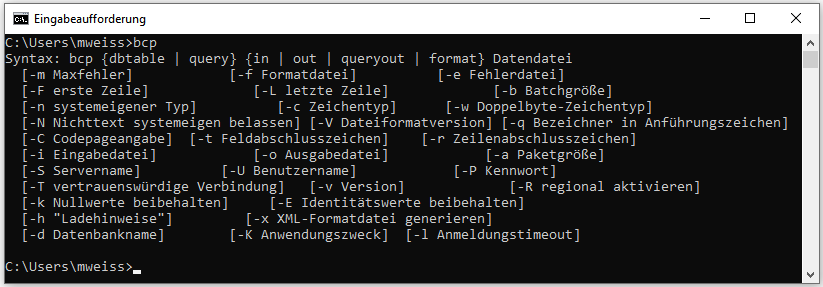

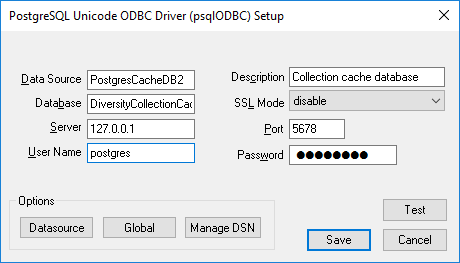

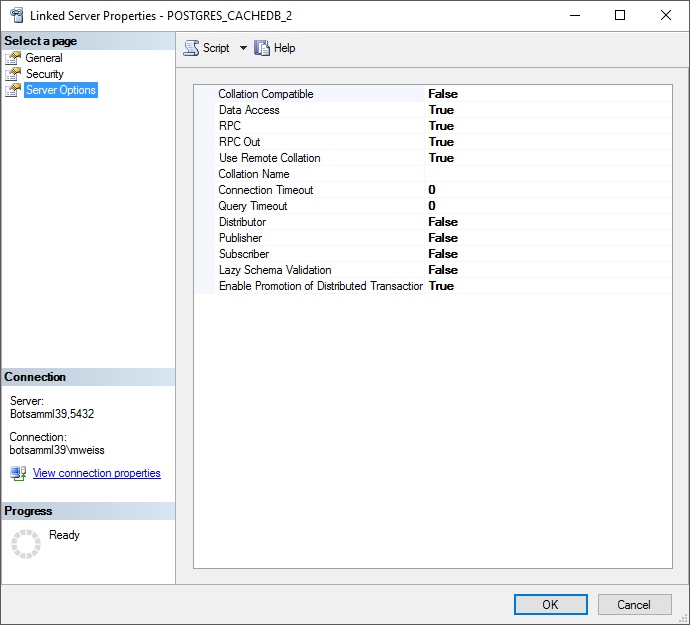

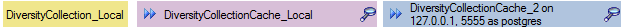

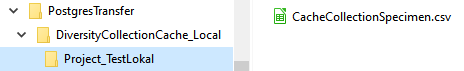

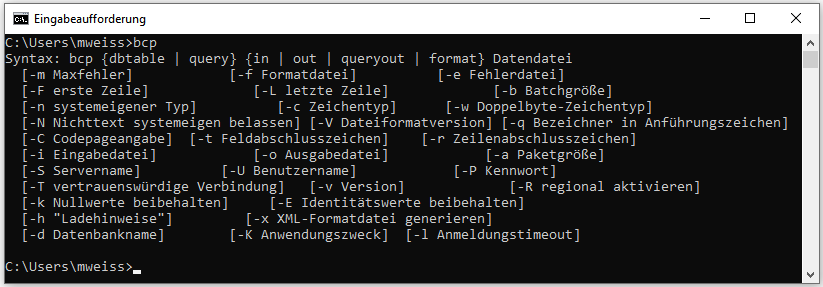

Transfer of data to Postgres via linked server

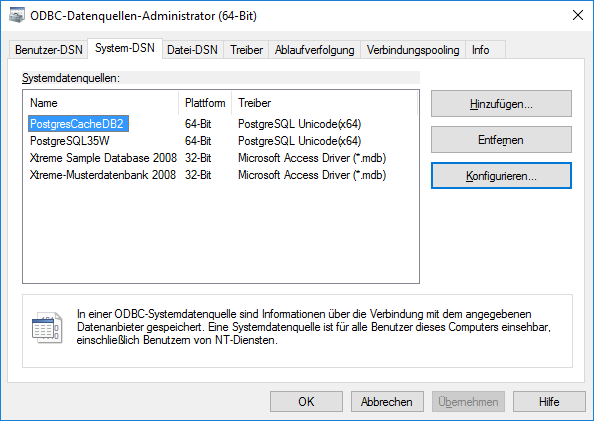

For projects with great amounts of data the preferred way to transfer

data is a linked server. To use a linked server you have too install the

ODBC driver software for Postgres on your SQL-Server, e.g. provided

here: postgresql. Download and

install the software, e.g.:

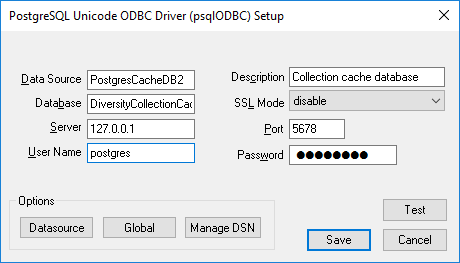

After the software has been installed, add a ODBC datasource.

Configured to access your Postgres cache database.

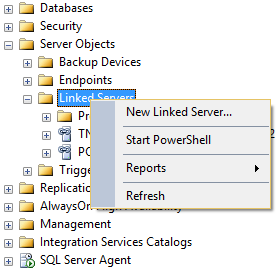

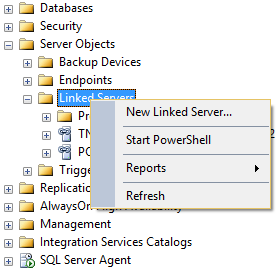

Now you can add a linked server in the SQL-Server Management Studio

(see below).

(see below).

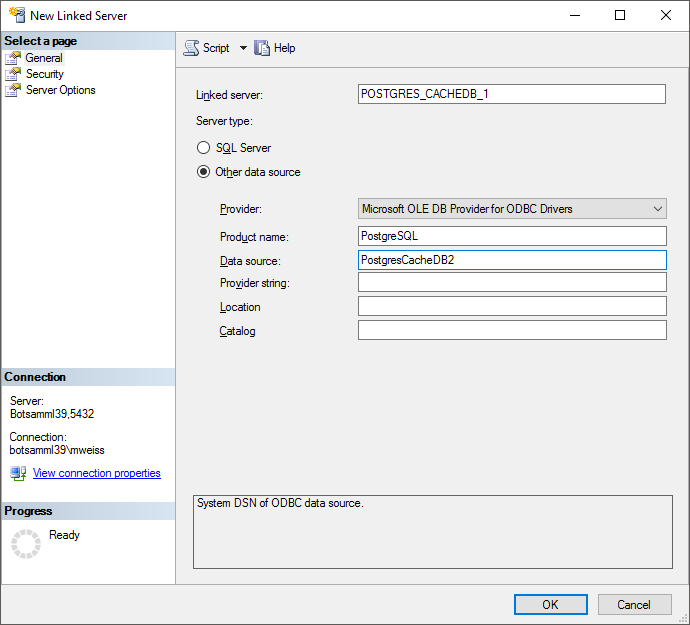

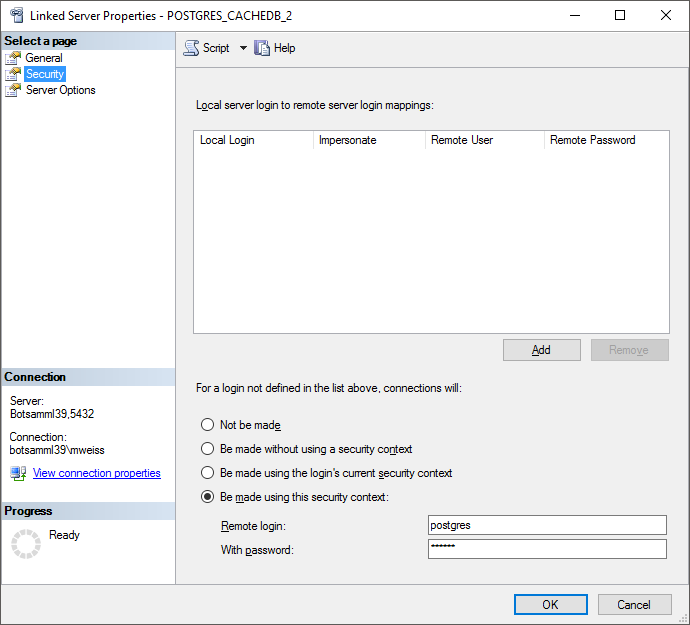

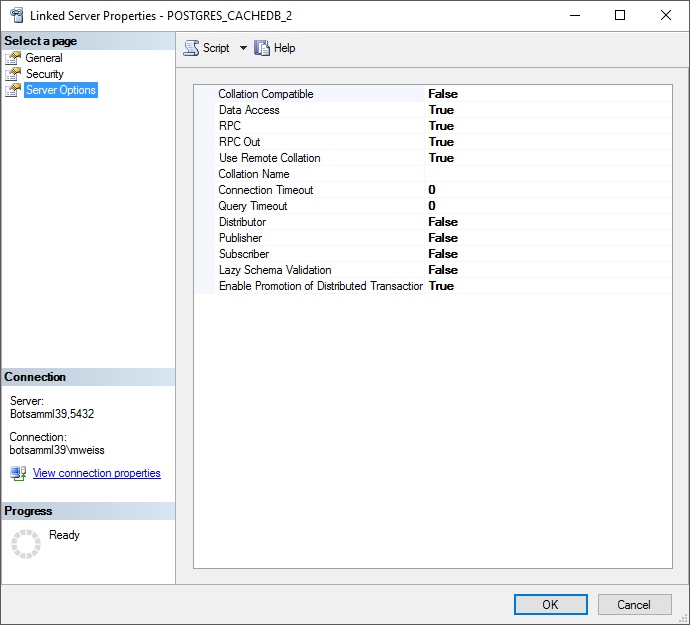

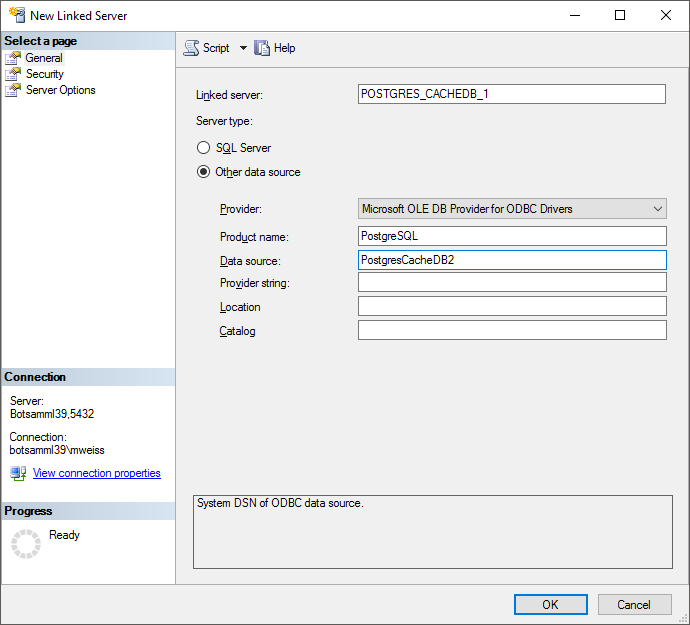

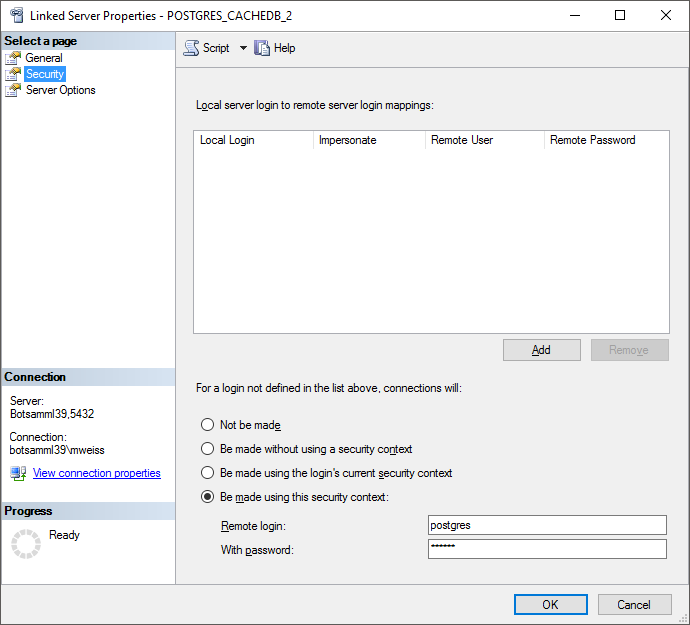

Configure the linked server using Microsoft OLE DB provider for ODBC

Drivers and the new created ODBC source as Data source (see below).

Now you are prepared to transfer your data on the fast route to the

postgres database.

Configure the linked server using Microsoft OLE DB provider for ODBC

Drivers and the new created ODBC source as Data source (see below).ow

you can add a linked server in the SQL-Server Management Studio

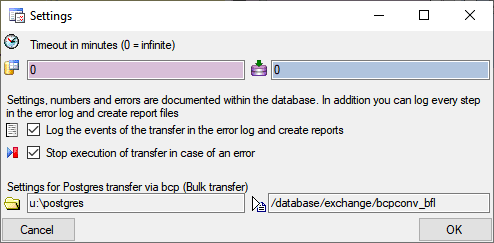

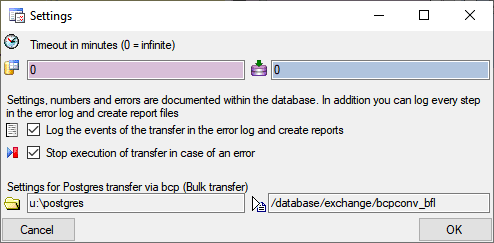

(see below). edit the

general settings for the transfer, click on the button in the main

form. A window as shown below will open. Here you can set the

(see below). edit the

general settings for the transfer, click on the button in the main

form. A window as shown below will open. Here you can set the  timeout for the transfer in minutes. The value

0 means that no time limit is set and the program should try inifinite

to transfer the data. Furthermore you can set the parameters for the

transfer of the data in chunks. If the amount of data is above a certain

threshold, it is faster to devide the data into smaller chunks. The

threshold for transfer into the

timeout for the transfer in minutes. The value

0 means that no time limit is set and the program should try inifinite

to transfer the data. Furthermore you can set the parameters for the

transfer of the data in chunks. If the amount of data is above a certain

threshold, it is faster to devide the data into smaller chunks. The

threshold for transfer into the  cache

database and into the

cache

database and into the  Postgres database can

be set as shown below, together with the maximal size of the chunks.

Postgres database can

be set as shown below, together with the maximal size of the chunks.

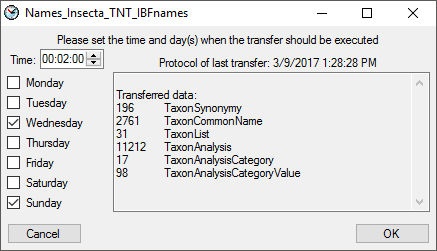

The scheduled transfer is meant to be lanched on a server on a regular

basis, e.g. once a week, once a day, every hour etc. . The transfer of

the data via the scheduled transfer will take place according to the

settings. This means the program will check if the next planned time for

a data transfer is passed and only than start to transfer the data. To

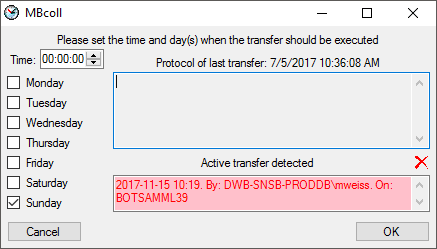

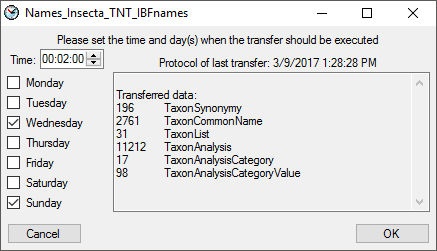

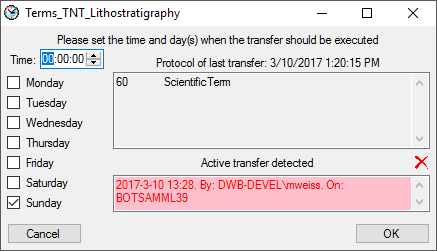

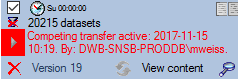

include a source in the schedule, check the  selector for the scheduler. To set the time and days scheduled for a

transfer, click on the

selector for the scheduler. To set the time and days scheduled for a

transfer, click on the  button. A window as

shown below will open where you can select the time and the day(s) of

the week when the transfer should be executed.

button. A window as

shown below will open where you can select the time and the day(s) of

the week when the transfer should be executed.

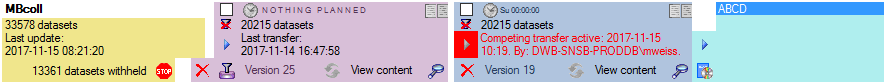

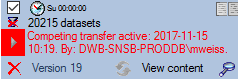

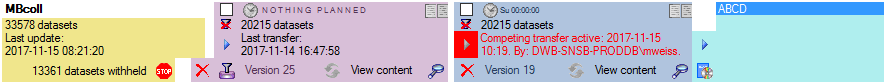

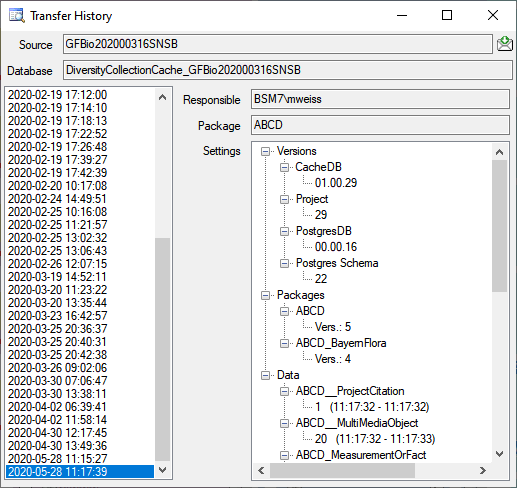

The planned points in time a shown in the form as shown below.

The protocol of the last transfer can seen as in the window above or if

you click on the  button. If an error occurred

this can be inspected with a click no the

button. If an error occurred

this can be inspected with a click no the  button.

button.

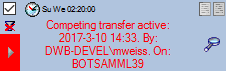

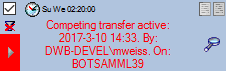

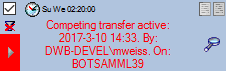

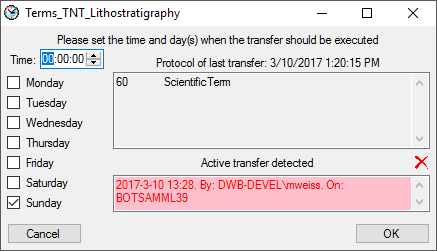

If another transfer on the same source has been started, no further

transfer will be started. In the program this competing transfer is

shown as below.

You can remove this block with a click on the  button. In opening window (see below) click on the

button. In opening window (see below) click on the  delete button. This will as well remove error

messages from previous transfers.

delete button. This will as well remove error

messages from previous transfers.

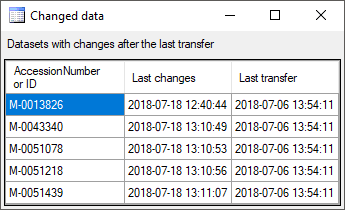

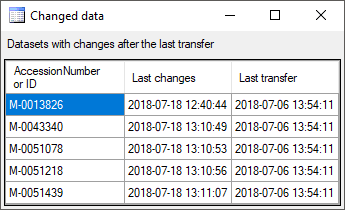

A further option for restriction of the transfers is the comparision of

the date when the last transfer has been executed. Click on the  button to change it to

button to change it to

. In this state the program will compare the

dates of the transfers and execute the transfer only if new data are

available.

. In this state the program will compare the

dates of the transfers and execute the transfer only if new data are

available.

Cache Database

Projects

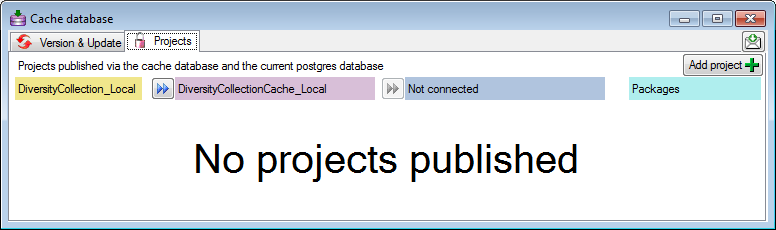

Adding Projects

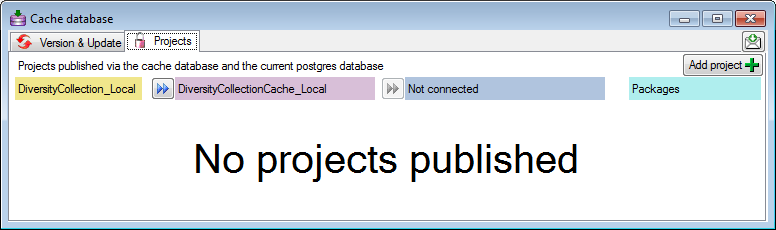

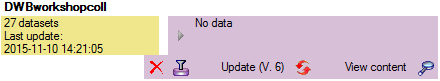

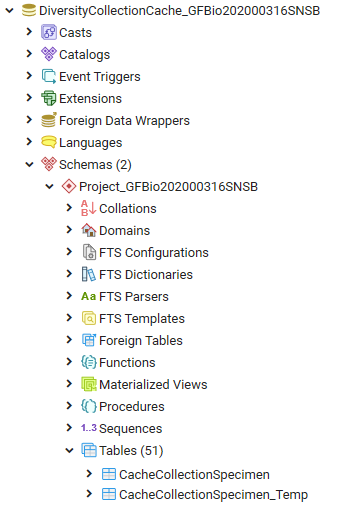

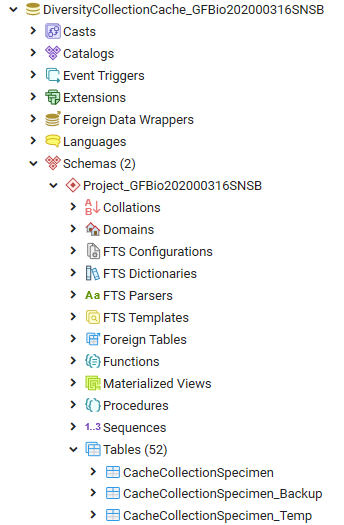

The data transferred into the [cache

database] are always transferred

according to a project they belong to. If no projects were added so far

the window will appear like shown below. For an introduction see a short

tutorial

.

.

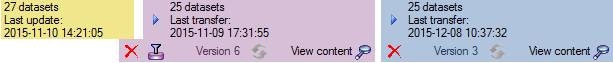

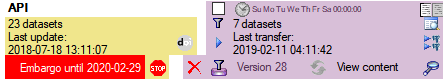

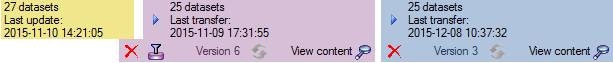

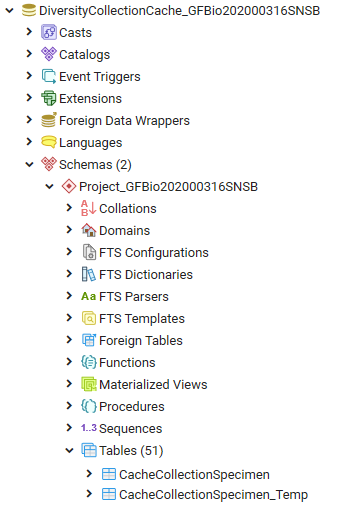

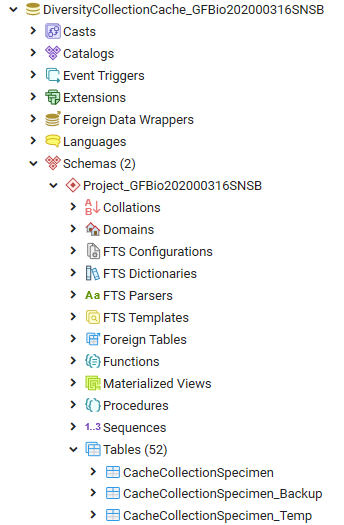

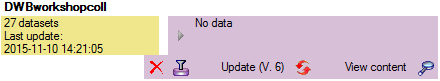

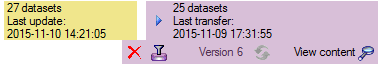

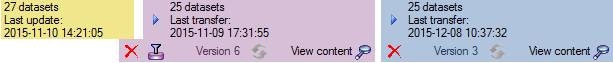

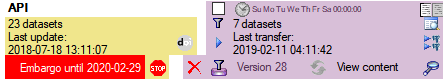

To add a new project for the transfer into the cache database, click on

the Add project  button. In the area below a

new entry as shown below will appear. The area on the right shows the

number of datasets in the project in the [source

database] together with the date of

the last update. To ensure the separation of the data between the

projects, DiversityCollection creates a separate schema for every

project named Project_[name of the project] together with needed

roles, tables etc..

button. In the area below a

new entry as shown below will appear. The area on the right shows the

number of datasets in the project in the [source

database] together with the date of

the last update. To ensure the separation of the data between the

projects, DiversityCollection creates a separate schema for every

project named Project_[name of the project] together with needed

roles, tables etc..

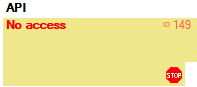

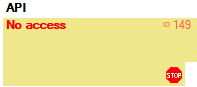

In case there are projects where you do not have access to, this will be

indicated as shown below.

In case a project has been renamed in the main database, a

button will appear as shown below. The

displayed name corresponds to the name in the main database. To see the

original name, click on the

button will appear as shown below. The

displayed name corresponds to the name in the main database. To see the

original name, click on the  button.

button.

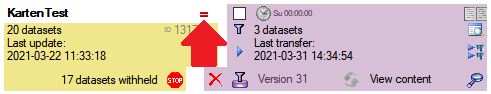

Update

Before transferring data you have to update the project schema to the

latest version, indicated by the appearance of an update button

. Click on the button to open a window as

shown below. Click on the Start update

. Click on the button to open a window as

shown below. Click on the Start update  button to update the schema to the latest version.

button to update the schema to the latest version.

After the update the database is ready to transfer data into.

Restrictions

Besides the restrictions in the source database, you can set further

restrictions for this transfer. Click on

the  button to edit the datawithholding reasons

for the data of the project. Click on the

button to edit the datawithholding reasons

for the data of the project. Click on the

button and choose the ranges of the

data that should be transferred (see below).

button and choose the ranges of the

data that should be transferred (see below).

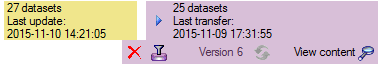

Transfer

To transfer the data you have 3 options as described in the

Transfer chapter.

Afterwards the number and date of the transferred data are visible as

shown below.

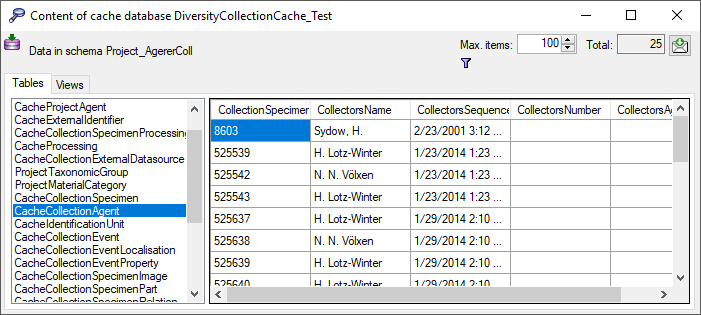

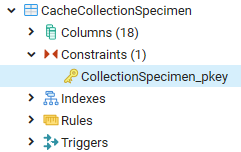

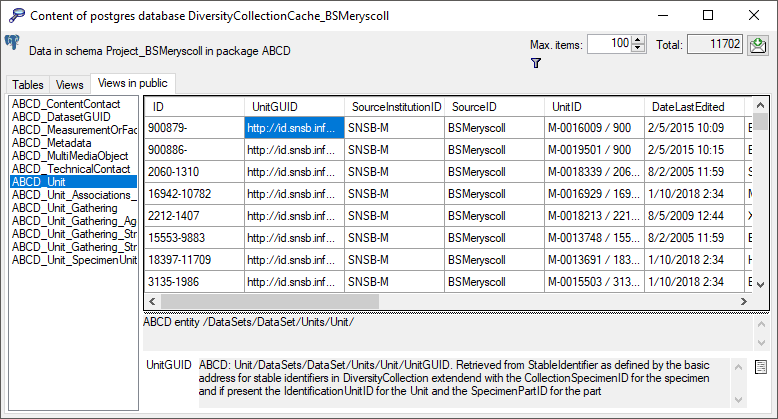

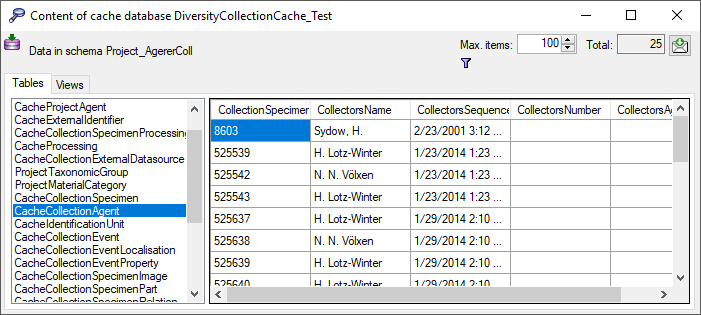

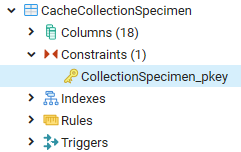

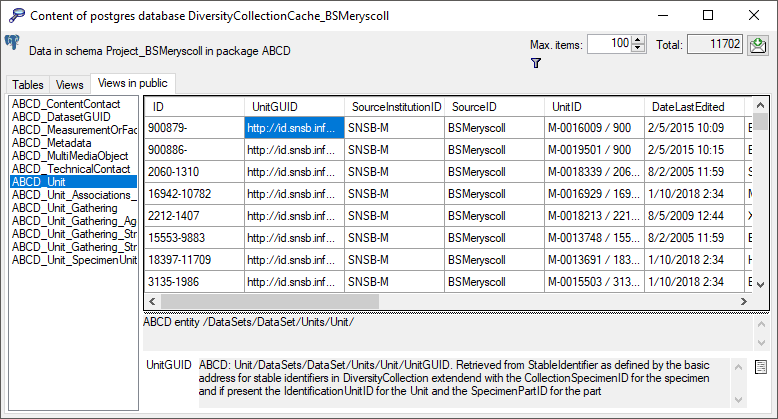

Content

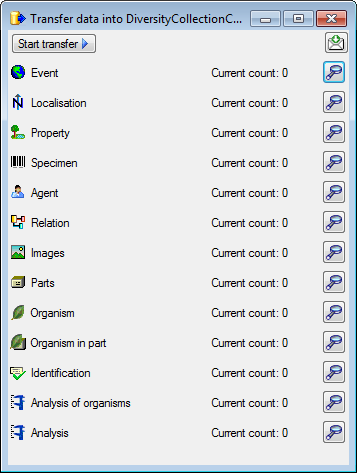

To inspect the transferred data use the View content

button. A window as shown below will open where

all tables containing the data of the project are listed.

button. A window as shown below will open where

all tables containing the data of the project are listed.

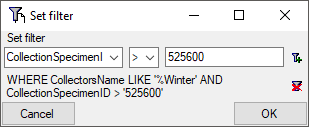

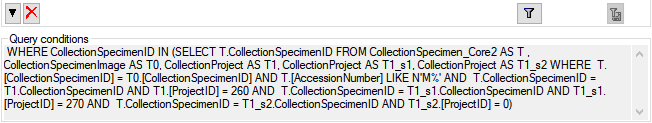

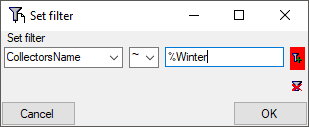

Filter

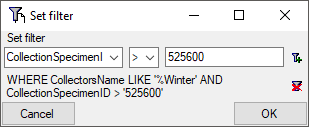

Click on the  button to filter the content. A

window as shown below will open. Choose the column for the filter, the

operator (e.g. = ) and the filter value (see below).

button to filter the content. A

window as shown below will open. Choose the column for the filter, the

operator (e.g. = ) and the filter value (see below).

Now click on the  button to add the filter

criteria to the table filter. You may add as many criteria as needed

(see below). With the

button to add the filter

criteria to the table filter. You may add as many criteria as needed

(see below). With the  button you can

clear the filter..

button you can

clear the filter..

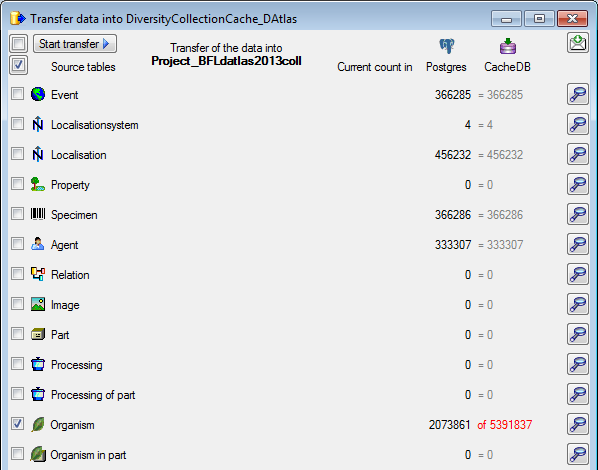

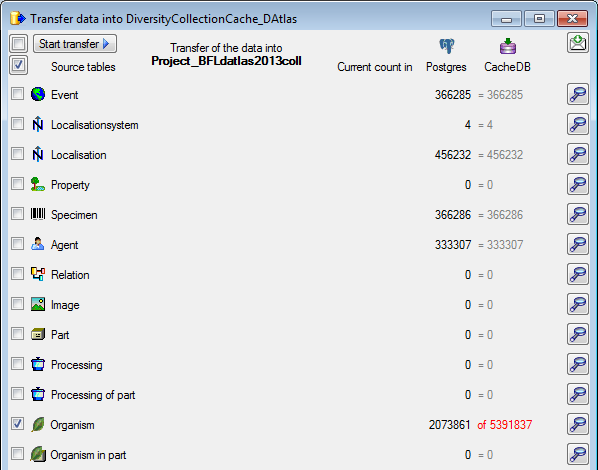

Postgres

Before you can transfer the data into the Postgresdatabase, you have to connect

to the Postgres database and click on the

to the Postgres database and click on the  button to establish

the project and run necessary updates

button to establish

the project and run necessary updates  . After

the project is established and up to date, use the

. After

the project is established and up to date, use the

button to transfer the data in the Postgres

area (see below).

button to transfer the data in the Postgres

area (see below).

If a project is exported into another  Postgres database on the same

server, these databases will be listed underneath the Postgres block

(see image below). For an overview of all target Postgres databases

click on the

Postgres database on the same

server, these databases will be listed underneath the Postgres block

(see image below). For an overview of all target Postgres databases

click on the  button.

button.

If the target is placed on the current server, the text will appear in

black (see image below). Packages will be listed for the other targets

as well.

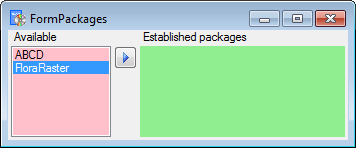

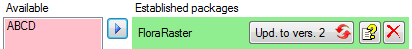

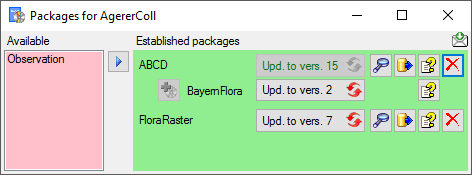

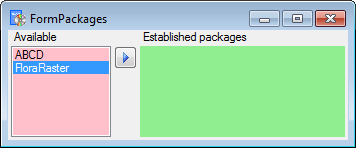

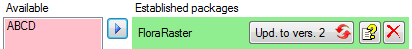

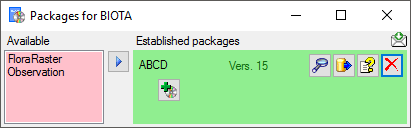

In the  Postgres

database you can install

packages

Postgres

database you can install

packages

to adapt the data to any needed

format.

to adapt the data to any needed

format.

Cache Database

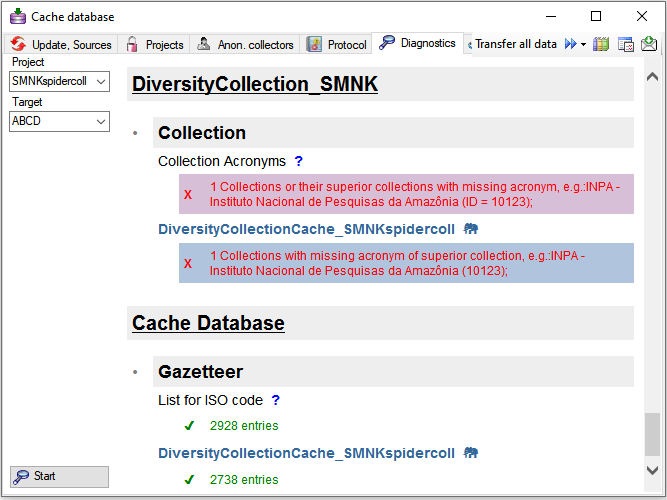

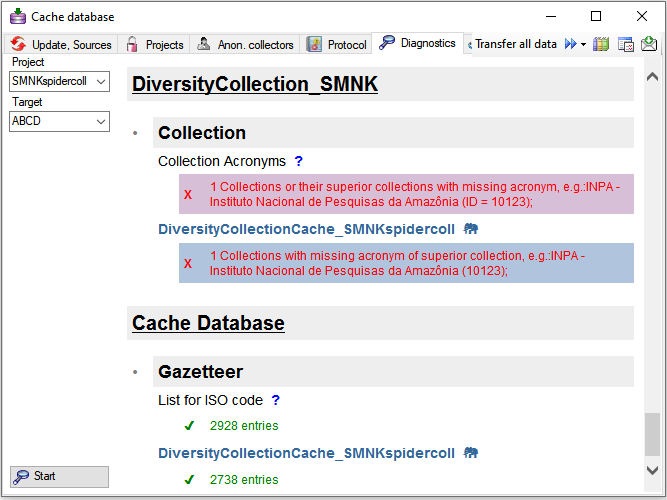

Diagnostics

To test data completeness of data targets you can use the diagnostics

. Choose the project and the target

you want to test and start the diagnostics. If the data in a postgres

database should be included in the test, please connect to this database

before starting the test. The result of the diagnose as shown below

marks missing information.

. Choose the project and the target

you want to test and start the diagnostics. If the data in a postgres

database should be included in the test, please connect to this database

before starting the test. The result of the diagnose as shown below

marks missing information.

Diversity Collection

Cache database

Infrastructure

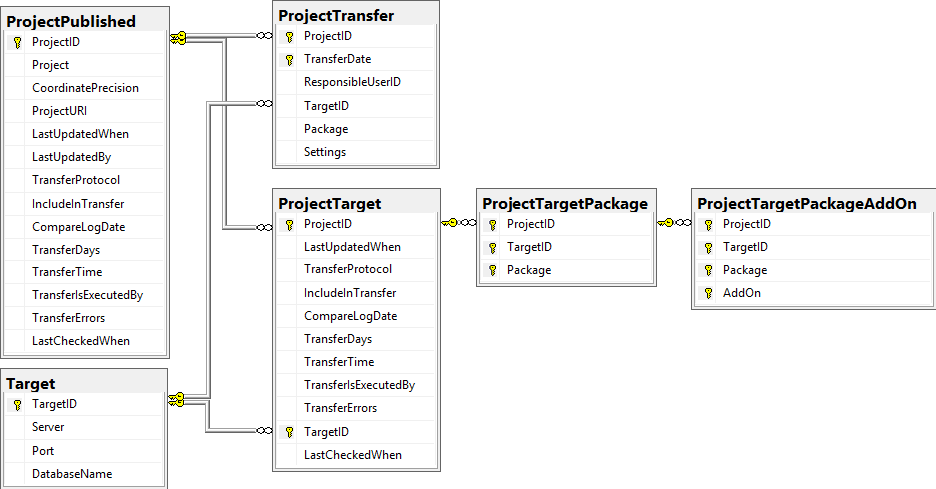

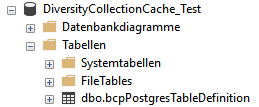

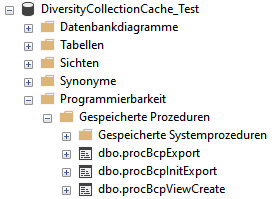

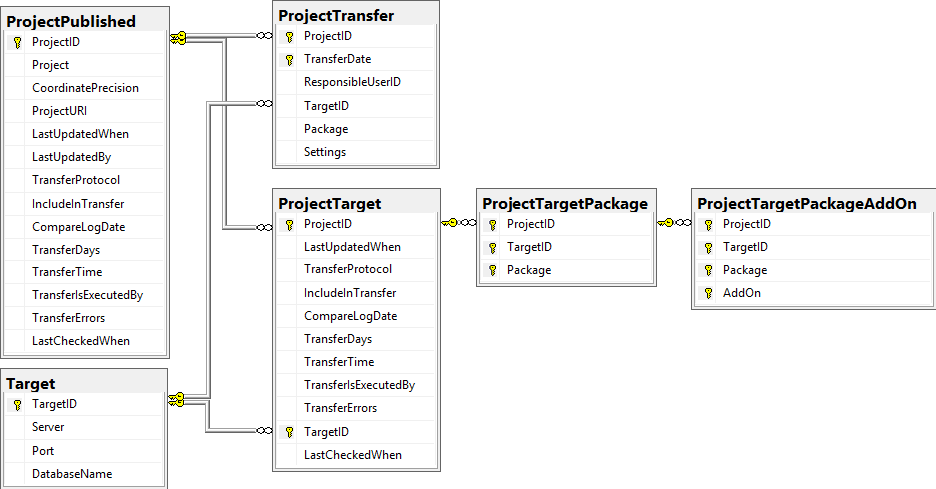

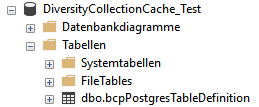

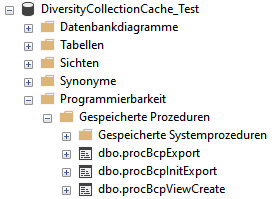

For the administration of the data that are published via the cache

database, certain tables as shown below are used. These are either

placed in the schema dbo or a schema named according to the published

project, e.g. Project_Test for a project with the name Test.

Central tables

There are a number of tables placed in the schema dbo that are

accessible by all projects.

Project tables

The central project tables contain the information about the projects

that are published together with the target (Postgres) databases and the

packages including optional add-ons into which they had been

transferred. This information is used to ensure a recovery in case of a

loss of the targets.

Source tables

To access sources from other modules (e.g. DiversityAgents) there are

tables for the storage of the principal access to the modules and a

number of tables containing the data (depending on the module).

Access tables

These tables contain the principal access like the name of the view

defined to access the data. The example below lists the tables defined

for the module DiversityAgents, but there are corresponding tables for

every module accessed by the cache database.

Data tables

These tables contain the data provided by the module and therefore

depend on the module. The example below lists the tables defined for the

module DiversityAgents, but there are corresponding tables for every

module accessed by the cache database.

To access the data in the module there are views generated by the

client. The name of these views are composed according to the name of

the database, the server and the project to ensure a unique name. These

are stored in the table AgentSource and are used by the client for a

transfer of the data from the module database into the tables in the

cache database. The example below lists the views for the module

DiversityAgents.

Project tables

These tables contain the data of the projects with every project having

its own schema. These tables correspond to the tables in the main

database of the module with certain limitations (no logging columns,

internal notes etc.)

Project procedures for the data transfer into the project tables

For every project table there is a procedure that transfers the data

from the main database into the cache table. The names of these

procedures are procPublish + the name of the table in the main database

e.g. procPublishAnalysis for the transfer from the table

Analysis into the table CacheAnalysis.

Subsections of Infrastructure

Diversity Collection

Cache database

TABLES

The following objects are not included:

- Logging tables

- Enumeration tables

- System objects

- Objects marked as obsolete

- Previous versions of objects

Table Agent

The main table with the data of the agent

| Column |

Data type |

Description |

Nullable |

Relation |

| BaseURL |

varchar (500) |

The basic URL as defined in the module database |

NO |

- |

| AgentID |

int |

Unique ID for the Agent (= Primary key) |

NO |

- |

| AgentURI |

varchar (255) |

The link to the dataset, i. e. the BaseURL + the AgentID |

YES |

- |

| AgentParentID |

int |

The AgentID of the superior agent if agents are organized within a hierarchy |

YES |

- |

| AgentName |

nvarchar (200) |

The whole name of the agent as shown e.g. for selection in an user interface. For persons this entry will be generated as follows: LastName, FirstNames, AgentTitle |

NO |

- |

| AgentTitle |

nvarchar (50) |

The title of the agent, e.g. Dr., Prof. |

YES |

- |

| GivenName |

nvarchar (255) |

The first names of the agent (if a person) or the name of e.g. an institution |

YES |

- |

| GivenNamePostfix |

nvarchar (50) |

Variable part of name, correctly placed at end of given names |

YES |

- |

| InheritedNamePrefix |

nvarchar (50) |

Variable part of name, correctly placed at the beginning of the inherited names |

YES |

- |

| InheritedName |

nvarchar (255) |

The last names of the agent (if a person) |

YES |

- |

| InheritedNamePostfix |

nvarchar (50) |

Additions after inherited name, like generation (Jr., III.) or names of religious orders |

YES |

- |

| Abbreviation |

nvarchar (50) |

Abbreviation of the agent |

YES |

- |

| AgentType |

nvarchar (50) |

The type of the agent, e.g. person, company |

YES |

- |

| AgentRole |

nvarchar (255) |

The role of an agent esp. a person within an organization. e.g. “Database Administrator” or “Curator” |

YES |

- |

| AgentGender |

nvarchar (50) |

The gender resp. sex of the agent |

YES |

- |

| Description |

nvarchar (1000) |

A description of the agent |

YES |

- |

| OriginalSpelling |

nvarchar (200) |

Name as originally written in e.g. chinese or cyrillic letters |

YES |

- |

| Notes |

nvarchar (MAX) |

Notes about the agent |

YES |

- |

| ValidFromDate |

datetime |

The date of the begin of the exsistence of the agent, e.g. the birthday of a person or the founding of an institution, calculated from ValidFromDay, - Month and -Year |

YES |

- |

| ValidUntilDate |

datetime |

The date of the end of the exsistence of the agent, e.g. death of a person or closing of an institute, calculated from ValidUntilDay, - Month and -Year |

YES |

- |

| SynonymToAgentID |

int |

The AgentID of the agent which was selected as a replacement for the current agent, e.g. if to equal datasets were imported from different sources |

YES |

- |

| ProjectID |

int |

The ID of the project in the module database containing the data |

NO |

- |

| LogInsertedWhen |

smalldatetime |

Date and time when record was first entered (typed or imported) into this system.Default value: getdate() |

YES |

- |

| SourceView |

nvarchar (200) |

The name of the source view of the data |

NO |

- |

The contact information resp. addresses of the agents

| Column |

Data type |

Description |

Nullable |

Relation |

| AgentID |

int |

Refers to the ID of Agent (= Foreign key and part of primary key) |

NO |

- |

| DisplayOrder |

tinyint |

Display order of records in user interface. DisplayOrder 1 corresponds to the preferred address (= part of primary key) |

NO |

- |

| AddressType |

nvarchar (50) |

Type of the adress, e.g. private |

YES |

- |

| Country |

nvarchar (255) |

Country of the address |

YES |

- |

| City |

nvarchar (255) |

City of the address |

YES |

- |

| PostalCode |

nvarchar (50) |

ZIP or postcode of the address (usually output before or after the city) |

YES |

- |

| Streetaddress |

nvarchar (255) |

Usually street name and number, but may also contain post office box |

YES |

- |

| Address |

nvarchar (255) |

Free text postal address of the agent |

YES |

- |

| Telephone |

nvarchar (50) |

Phone number, including area code |

YES |

- |

| CellularPhone |

nvarchar (50) |

The number of a mobile telephone device of the agent |

YES |

- |

| Telefax |

nvarchar (50) |

Fax number, including area code |

YES |

- |

| Email |

nvarchar (255) |

E-mail address of the agent |

YES |

- |

| URI |

nvarchar (255) |

URI pointing to a homepage containing further information |

YES |

- |

| Notes |

nvarchar (MAX) |

Notes about this address |

YES |

- |

| ValidFrom |

datetime |

The date when this address became valid as date according to ISO 8601 |

YES |

- |

| ValidUntil |

datetime |

The date of the expiration of the validity of this address as date according to ISO 8601 |

YES |

- |

| SourceView |

nvarchar (200) |

The name of the source view of the data |

NO |

- |

| BaseURL |

varchar (500) |

- |

NO |

- |

Table AgentIdentifier

| Column |

Data type |

Description |

Nullable |

Relation |

| AgentID |

int |

- |

NO |

- |

| Identifier |

nvarchar (190) |

- |

NO |

- |

| IdentifierURI |

varchar (500) |

- |

YES |

- |

| Type |

nvarchar (50) |

- |

YES |

- |

| Notes |

nvarchar (MAX) |

- |

YES |

- |

| SourceView |

varchar (128) |

- |

NO |

- |

| BaseURL |

varchar (500) |

- |

NO |

- |

Table AgentImage

| Column |

Data type |

Description |

Nullable |

Relation |

| AgentID |

int |

- |

NO |

- |

| URI |

varchar (255) |

- |

NO |

- |

| Description |

nvarchar (MAX) |

- |

YES |

- |

| Type |

nvarchar (50) |

- |

YES |

- |

| Sequence |

int |

- |

YES |

- |

| SourceView |

varchar (128) |

- |

NO |

- |

| BaseURL |

varchar (500) |

- |

NO |

- |

Table AgentSource

The sources for the data from a module database accessed via a view defined in the cache database

| Column |

Data type |

Description |

Nullable |

Relation |

| SourceView |

nvarchar (200) |

the name of the view retrieving the data from the database |

NO |

- |

| Source |

nvarchar (500) |

The name of the source, e.g. the name of the project as defined in the source module |

YES |

- |

| SourceID |

int |

The ID of the source, e.g. the ID of the project as defined in the source module |

YES |

- |

| LinkedServerName |

nvarchar (500) |

If the source is located on a linked server, the name of the linked server |

YES |

- |

| DatabaseName |

nvarchar (50) |

The name of the database where the data are taken from |

YES |

- |

| Subsets |

nvarchar (500) |

Subsets of a source: The names of the tables included in the transfer separted by " |

" |

YES |

| TransferProtocol |

nvarchar (MAX) |

The protocol created during the transfer of the data |

YES |

- |

| IncludeInTransfer |

bit |

If the source should be included in a schedule based data transfer |

YES |

- |

| LastUpdatedWhen |

datetime |

The date of the last update of the data |

YES |

- |

| CompareLogDate |

bit |

If the log dates of the transferred data should be compared to decide if data are transferredDefault value: (0) |

YES |

- |

| TransferDays |

varchar (7) |

The days the transfer should be done, coded as integer values with Sunday = 0 up to Saturday = 6Default value: ‘0’ |

YES |

- |

| TransferTime |

time |

The time when the transfer should be executedDefault value: ‘00:00:00.00’ |

YES |

- |

| TransferIsExecutedBy |

nvarchar (500) |

If any transfer of the data is active |

YES |

- |

| TransferErrors |

nvarchar (MAX) |

Errors that occurred during the data transfers |

YES |

- |

| LastCheckedWhen |

datetime |

The date and time when the last check for the need of an update of the content occurred |

YES |

- |

| Version |

int |

-Default value: (0) |

YES |

- |

Table AgentSourceTarget

The targets of the projects, i.e. the Postgres databases

| Column |

Data type |

Description |

Nullable |

Relation |

| SourceView |

nvarchar (200) |

SourceView as defined in table AgentSource |

NO |

Refers to table AgentSource |

| Target |

nvarchar (200) |

The targets of the projects, i.e. the Postgres databases where the data should be transferred to |

NO |

- |

| LastUpdatedWhen |

datetime |

The date of the last update of the project data |

YES |

- |

| TransferProtocol |

nvarchar (MAX) |

The protocol created during the transfer of the data |

YES |

- |

| IncludeInTransfer |

bit |

If the project should be included in a schedule based data transferDefault value: (1) |

YES |

- |

| CompareLogDate |

bit |

If the log dates of the transferred data should be compared to decide if data are transferredDefault value: (0) |

YES |

- |

| TransferDays |

varchar (7) |

The days the transfer should be done, coded as integer values with Sunday = 0 up to Saturday = 6Default value: (0) |

YES |

- |

| TransferTime |

time |

The time when the transfer should be executedDefault value: ‘00:00:00.00’ |

YES |

- |

| TransferIsExecutedBy |

nvarchar (500) |

If any transfer of the data is active |

YES |

- |

| TransferErrors |

nvarchar (MAX) |

Errors that occurred during the data transfers |

YES |

- |

| LastCheckedWhen |

datetime |

The date and time when the last check for the need of an update of the content occurred |

YES |

- |

Table AgentSourceView

| Column |

Data type |

Description |

Nullable |

Relation |

| BaseURL |

varchar (500) |

- |

NO |

- |

| AgentID |

int |

- |

NO |

- |

| SourceView |

nvarchar (128) |

The name of the source view of the data |

NO |

- |

| LogInsertedWhen |

smalldatetime |

Date and time when record was first entered (typed or imported) into this system.Default value: getdate() |

YES |

- |

Table bcpPostgresTableDefinition

| Column |

Data type |

Description |

Nullable |

Relation |

| SchemaName |

varchar (200) |

- |

NO |

- |

| TableName |

varchar (200) |

- |

NO |

- |

| ColumnName |

varchar (200) |

- |

NO |

- |

| DataType |

varchar (50) |

- |

YES |

- |

| OrdinalPositon |

int |

- |

YES |

- |

Table Gazetteer

| Column |

Data type |

Description |

Nullable |

Relation |

| BaseURL |

varchar (255) |

- |

NO |

- |

| NameID |

int |

- |

NO |

- |

| Name |

nvarchar (400) |

- |

NO |

- |

| LanguageCode |

nvarchar (50) |

- |

YES |

- |

| PlaceID |

int |

- |

NO |

- |

| PlaceType |

nvarchar (50) |

- |

YES |

- |

| PreferredName |

nvarchar (400) |

- |

NO |

- |

| PreferredNameID |

int |

- |

NO |

- |

| PreferredNameLanguageCode |

nvarchar (50) |

- |

YES |

- |

| ProjectID |

int |

- |

NO |

- |

| LogInsertedWhen |

smalldatetime |

Date and time when record was first entered (typed or imported) into this system.Default value: getdate() |

YES |

- |

| SourceView |

nvarchar (200) |

The name of the source view of the data |

NO |

- |

| NameURI |

varchar (255) |

- |

YES |

- |

| ExternalNameID |

nvarchar (50) |

- |

YES |

- |

| ExternalDatabaseID |

int |

- |

YES |

- |

Table GazetteerExternalDatabase

| Column |

Data type |

Description |

Nullable |

Relation |

| ExternalDatabaseID |

int |

- |

NO |

- |

| ExternalDatabaseName |

nvarchar (60) |

- |

NO |

- |

| ExternalDatabaseVersion |

nvarchar (255) |

- |

NO |

- |

| ExternalAttribute_NameID |

nvarchar (255) |

- |

YES |

- |

| ExternalAttribute_PlaceID |

nvarchar (255) |

- |

YES |

- |

| ExternalCoordinatePrecision |

nvarchar (255) |

- |

YES |

- |

| SourceView |

nvarchar (200) |

- |

NO |

- |

| BaseURL |

varchar (500) |

- |

NO |

- |

Table GazetteerSource

| Column |

Data type |

Description |

Nullable |

Relation |

| SourceView |

nvarchar (200) |

the name of the view retrieving the data from the database |

NO |

- |

| Source |

nvarchar (500) |

- |

YES |

- |

| SourceID |

int |

- |

YES |

- |

| LinkedServerName |

nvarchar (500) |

If the source is located on a linked server, the name of the linked server |

YES |

- |

| DatabaseName |

nvarchar (50) |

The name of the database where the data are taken from |

YES |

- |

| Subsets |

nvarchar (500) |

Subsets of a source: The names of the tables included in the transfer separted by " |

" |

YES |

| TransferProtocol |

nvarchar (MAX) |

The protocol created during the transfer of the data |

YES |

- |

| IncludeInTransfer |

bit |

If the source should be included in a schedule based data transfer |

YES |

- |

| LastUpdatedWhen |

datetime |

The date of the last update of the data |

YES |

- |

| CompareLogDate |

bit |

If the log dates of the transferred data should be compared to decide if data are transferredDefault value: (0) |

YES |

- |

| TransferDays |

varchar (7) |

The days the transfer should be done, coded as integer values with Sunday = 0 up to Saturday = 6Default value: ‘0’ |

YES |

- |

| TransferTime |

time |

The time when the transfer should be executedDefault value: ‘00:00:00.00’ |

YES |

- |

| TransferIsExecutedBy |

nvarchar (500) |

If any transfer of the data is active |

YES |

- |

| TransferErrors |

nvarchar (MAX) |

Errors that occurred during the data transfers |

YES |

- |

| LastCheckedWhen |

datetime |

The date and time when the last check for the need of an update of the content occurred |

YES |

- |

| Version |

int |

-Default value: (0) |

YES |

- |

Table GazetteerSourceTarget

The targets of the projects, i.e. the Postgres databases

| Column |

Data type |

Description |

Nullable |

Relation |

| SourceView |

nvarchar (200) |

SourceView as defined in table GazetteerSource |

NO |

Refers to table GazetteerSource |

| Target |

nvarchar (200) |

The targets of the projects, i.e. the Postgres databases where the data should be transferred to |

NO |

- |

| LastUpdatedWhen |

datetime |

The date of the last update of the project data |

YES |

- |

| TransferProtocol |

nvarchar (MAX) |

The protocol created during the transfer of the data |

YES |

- |

| IncludeInTransfer |

bit |

If the project should be included in a schedule based data transferDefault value: (1) |

YES |

- |

| CompareLogDate |

bit |

If the log dates of the transferred data should be compared to decide if data are transferredDefault value: (0) |

YES |

- |

| TransferDays |

varchar (7) |

The days the transfer should be done, coded as integer values with Sunday = 0 up to Saturday = 6Default value: (0) |

YES |

- |

| TransferTime |

time |

The time when the transfer should be executedDefault value: ‘00:00:00.00’ |

YES |

- |

| TransferIsExecutedBy |

nvarchar (500) |

If any transfer of the data is active |

YES |

- |

| TransferErrors |

nvarchar (MAX) |

Errors that occurred during the data transfers |

YES |

- |

| LastCheckedWhen |

datetime |

The date and time when the last check for the need of an update of the content occurred |

YES |

- |

Table GazetteerSourceView

| Column |

Data type |

Description |

Nullable |

Relation |

| BaseURL |

varchar (500) |

- |

NO |

- |

| NameID |

int |

- |

NO |

- |

| SourceView |

nvarchar (128) |

The name of the source view of the data |

NO |

- |

| LogInsertedWhen |

smalldatetime |

Date and time when record was first entered (typed or imported) into this system.Default value: getdate() |

YES |

- |

Table ProjectPublished

The projects published via the cache database (Details about the projects are defined in DiversityProjects)

| Column |

Data type |

Description |

Nullable |

Relation |

| ProjectID |

int |

ID of the project to which the specimen belongs (Projects are defined in DiversityProjects) |

NO |

- |

| Project |

nvarchar (50) |

The name or title of the project as shown in a user interface (Projects are defined in DiversityProjects) |

YES |

- |

| CoordinatePrecision |

tinyint |

Optional reduction of the precision of the coordinates within the project |

YES |

- |

| ProjectURI |

varchar (255) |

The URI of the project, e.g. as provided by the module DiversityProjects. |

YES |

- |

| LastUpdatedWhen |

datetime |

The date of the last update of the project data |

YES |

- |

| LastUpdatedBy |

nvarchar (50) |

The user reponsible for the last update. |

YES |

- |

| TransferProtocol |

nvarchar (MAX) |

The protocol created during the transfer of the data |

YES |

- |

| IncludeInTransfer |

bit |

If the project should be included in a schedule based data transfer |

YES |

- |

| CompareLogDate |

bit |

If the log dates of the transferred data should be compared to decide if data are transferredDefault value: (0) |

YES |

- |

| TransferDays |

varchar (7) |

The days the transfer should be done, coded as integer values with Sunday = 0 up to Saturday = 6Default value: ‘0’ |

YES |

- |

| TransferTime |

time |

The time when the transfer should be executedDefault value: ‘00:00:00.00’ |

YES |

- |

| TransferIsExecutedBy |

nvarchar (500) |

If any transfer of the data is active |

YES |

- |

| TransferErrors |

nvarchar (MAX) |

Errors that occurred during the data transfers |

YES |

- |

| LastCheckedWhen |

datetime |

The date and time when the last check for the need of an update of the content occurred |

YES |

- |

| Restriction |

nvarchar (MAX) |

An additional restriction of the content of the published data |

YES |

- |

Table ProjectTarget

The targets of the projects, i.e. the Postgres databases

| Column |

Data type |

Description |

Nullable |

Relation |

| ProjectID |

int |

ID of the project to which the specimen belongs (Projects are defined in DiversityProjects) |

NO |

Refers to table ProjectPublished |

| LastUpdatedWhen |

datetime |

The date of the last update of the project data |

YES |

- |

| TransferProtocol |

nvarchar (MAX) |

The protocol created during the transfer of the data |

YES |

- |

| IncludeInTransfer |

bit |

If the project should be included in a schedule based data transferDefault value: (1) |

YES |

- |

| CompareLogDate |

bit |

If the log dates of the transferred data should be compared to decide if data are transferredDefault value: (0) |

YES |

- |

| TransferDays |

varchar (7) |

The days the transfer should be done, coded as integer values with Sunday = 0 up to Saturday = 6Default value: (0) |

YES |

- |

| TransferTime |

time |

The time when the transfer should be executedDefault value: ‘00:00:00.00’ |

YES |

- |

| TransferIsExecutedBy |

nvarchar (500) |

If any transfer of the data is active |

YES |

- |

| TransferErrors |

nvarchar (MAX) |

Errors that occurred during the data transfers |

YES |

- |

| TargetID |

int |

The ID of the server, relates to table Target |

NO |

Refers to table Target |

| LastCheckedWhen |

datetime |

The date and time when the last check for the need of an update of the content occurred |

YES |

- |

| UseBulkTransfer |

bit |

If the bulk transfer should be used for the transfer of data |

YES |

- |

Table ProjectTargetPackage

Packages for projects as documented in the table Package in the Postgres database

| Column |

Data type |

Description |

Nullable |

Relation |

| ProjectID |

int |

Refers to ProjectID in table ProjectTarget |

NO |

Refers to table ProjectTarget |

| TargetID |

int |

Referes to TargetID in table ProjectTarget |

NO |

Refers to table ProjectTarget |

| Package |

nvarchar (50) |

Package installed for this project target |

NO |

- |

Table ProjectTargetPackageAddOn

The installed add-ons for packages

| Column |

Data type |

Description |

Nullable |

Relation |

| ProjectID |

int |

Refers to ProjectID in table ProjectTarget |

NO |

Refers to table ProjectTargetPackage |

| TargetID |

int |

Referes to TargetID in table ProjectTarget |

NO |

Refers to table ProjectTargetPackage |

| Package |

nvarchar (50) |

Package installed for this project target |

NO |

Refers to table ProjectTargetPackage |

| AddOn |

nvarchar (50) |

Add-on installed for this package |

NO |

- |

Table ProjectTransfer

The transfers of data of a project

| Column |

Data type |

Description |

Nullable |

Relation |

| ProjectID |

int |

ID of the project, part of PK |

NO |

Refers to table ProjectPublished |

| TransferDate |

datetime |

Date of the transfer. Part of PKDefault value: getdate() |

NO |

- |

| ResponsibleUserID |

int |

The ID of the user as stored in table UserProxy of the source database, responsible for the transferDefault value: (-1) |

YES |

- |

| TargetID |

int |

If the transfer regards a postgres database, the ID of the target (= Postgres database) as stored in table Target |

YES |

Refers to table Target |

| Package |

nvarchar (50) |

If the transfer regards a package, the name of the package, otherwise empty |

YES |

- |

| Settings |

nvarchar (MAX) |

The versions, number of transfered data etc. of the objects concerned by the transfer [format: JSON] |

YES |

- |

Table ReferenceRelator

| Column |

Data type |

Description |

Nullable |

Relation |

| RefID |

int |

- |

NO |

- |

| Role |

nvarchar (3) |

- |

NO |

- |

| Sequence |

int |

- |

NO |

- |

| Name |

nvarchar (255) |

- |

NO |

- |

| AgentURI |

varchar (255) |

- |

YES |

- |

| SortLabel |

nvarchar (255) |

- |

YES |

- |

| Address |

nvarchar (1000) |

- |

YES |

- |

| SourceView |

nvarchar (200) |

- |

NO |

- |

| BaseURL |

varchar (500) |

- |

NO |

- |

Table ReferenceTitle

| Column |

Data type |

Description |

Nullable |

Relation |

| BaseURL |

varchar (500) |

- |

NO |

- |

| RefType |

nvarchar (10) |

- |

NO |

- |

| RefID |

int |

- |

NO |

- |

| RefDescription_Cache |

nvarchar (255) |

- |

NO |

- |

| Title |

nvarchar (4000) |

- |

NO |

- |

| DateYear |

smallint |

- |

YES |

- |

| DateMonth |

smallint |

- |

YES |

- |

| DateDay |

smallint |

- |

YES |

- |

| DateSuppl |

nvarchar (255) |

- |

NO |

- |

| SourceTitle |

nvarchar (4000) |

- |

NO |

- |

| SeriesTitle |

nvarchar (255) |

- |

NO |

- |

| Periodical |

nvarchar (255) |

- |

NO |

- |

| Volume |

nvarchar (255) |

- |

NO |

- |

| Issue |

nvarchar (255) |

- |

NO |

- |

| Pages |

nvarchar (255) |

- |

NO |

- |

| Publisher |

nvarchar (255) |

- |

NO |

- |

| PublPlace |

nvarchar (255) |

- |

NO |

- |

| Edition |

smallint |

- |

YES |

- |

| DateYear2 |

smallint |

- |

YES |

- |

| DateMonth2 |

smallint |

- |

YES |

- |

| DateDay2 |

smallint |

- |

YES |

- |

| DateSuppl2 |

nvarchar (255) |

- |

NO |

- |

| ISSN_ISBN |

nvarchar (18) |

- |

NO |

- |

| Miscellaneous1 |